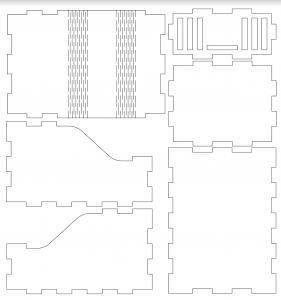

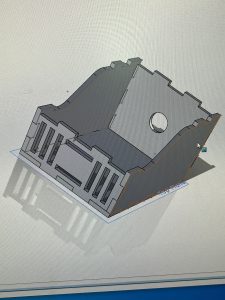

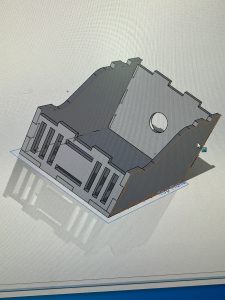

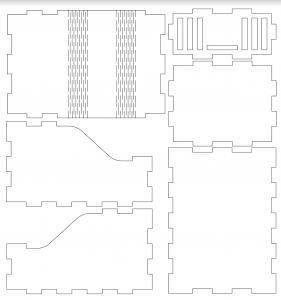

This week I worked on the design and assembly of the robot chassis and hardware. I made multiple adjustments to the CAD model in order to better accommodate our parts. First, the top piece of the chassis was too thick and inflexible to mold into the desired form factor, so I made adjustments to the front, back, and side plates to accommodate a thinner top panel. Additionally, I measured the dimensions of our motors and added supports to hold the motors and holes in the side panels to fix the axel. When testing out these supports, we found that the motors were not secure enough, so I drafted a new CAD design which ensures that the motors will not be able to move in any direction during operation. I will be laser cutting and testing this design as early as possible so that we can proceed with testing the calibration of the motors in the robot. I am currently behind on the sensor system assembly as one of our sensors has not arrived yet, but I will start working with our current sensors next week and help with the interfacing of these sensors.

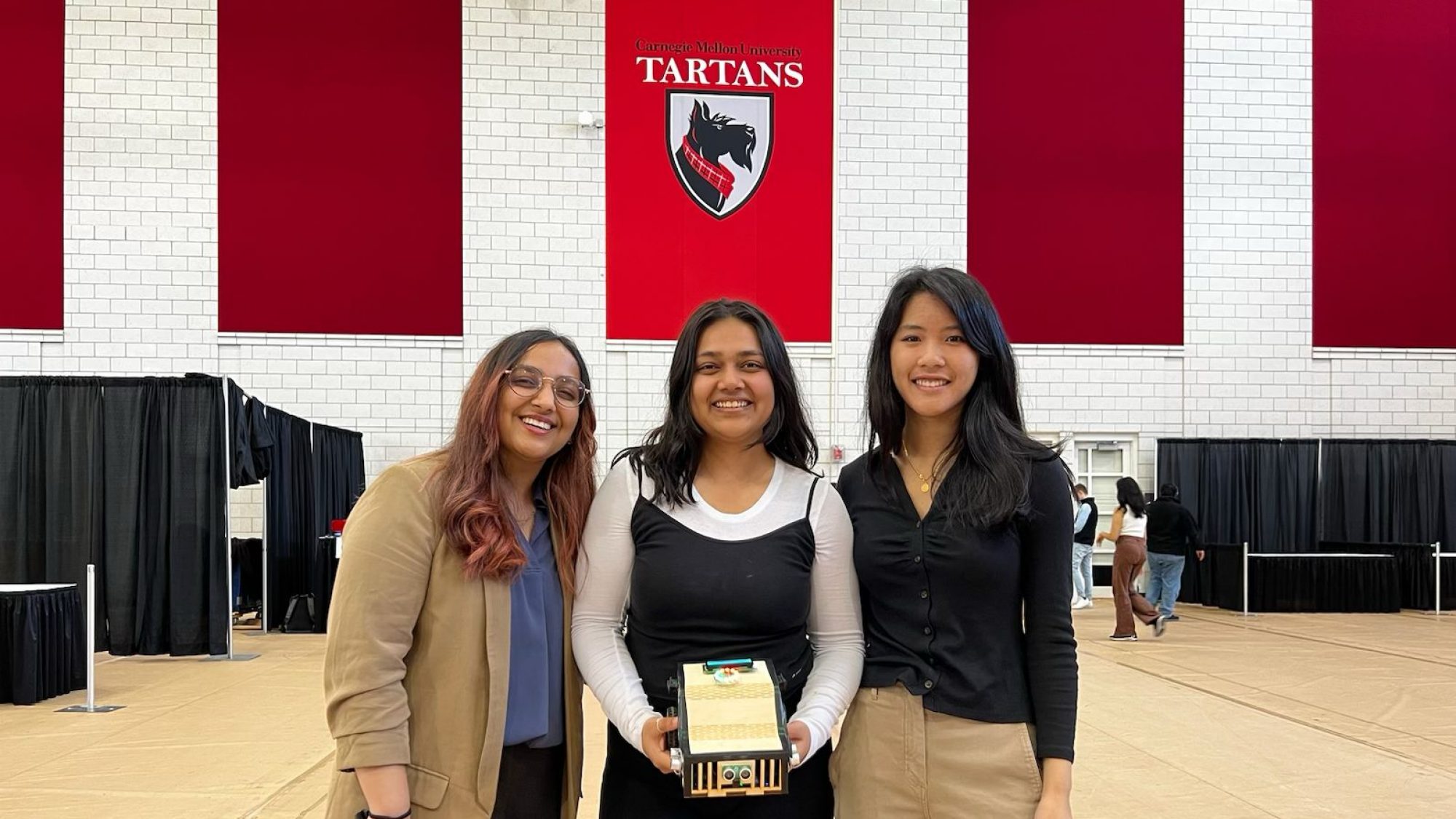

Team Status Report for 2/25

This week we worked on finishing up the robot design and laser cutting it, assembling the robot, getting started with the motor control, and interfacing the ESP8266 and connecting to the cloud. We also worked on the design review presentation and got started on the design report. We are currently focusing our efforts on the random exploration approach and will be setting up the motor control code accordingly.

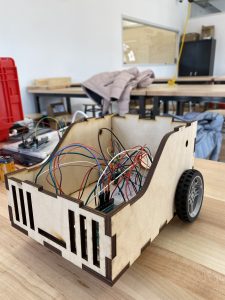

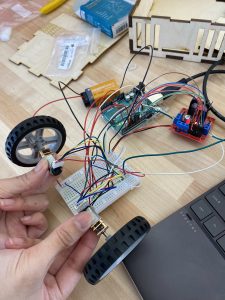

We had to make some modifications to the robot chassis, including holes for the wheels, and a structure to lift the motor up to the correct height for the wheels to touch the ground. We found some challenges in securing the motors because of the force on them from the wheels, and are working on adding a secure structure that can go over the motor snugly so that it will not move while the wheels are turning. We all met to work on getting the motors set up connecting to the Arduino, and see how the components with wiring fit into the robot chassis, which was not technically under our task assignments, but we feel it was necessary to get an understanding of how everything will look once it is connected with our different systems.

We are currently behind on the sensor system assembly, as one of our parts (the Grove Multichannel Gas Sensor) has not come in yet. We will start collecting data once this part arrives, and in the meantime, will be setting up Azure templates for the BME280 and the ENS160 sensors to the cloud.

Eshita’s Status Report for 2/25

This week, I worked on setting up the Azure IoT hub instance with the configurations and adding the ESP8266 to the devices list for communicating with the cloud. I faced a number of issues in setting this up, as the ESP8266 Wi-Fi module we ordered does not have much documentation or listed steps for connecting to Azure. I used a modified Azure SDK for the NodeMCU version of the chip through the Arduino IDE, but there are additional requirements like flashing the firmware since we’re relaying it through the Arduino Uno. Flashing the firmware is very OS-dependent, so I am thinking about how we’re going to integrate all of this together down the line. I fell behind schedule this week, not being able to work with Caroline on the sensor system assembly, but we will begin this immediately since there is still a sensor that has not arrived yet. Connecting this hardware to the cloud was harder than I had imagined, previously coming from building just software solutions on the cloud. I feel like my shortfall in not being able to contribute this week has given me some anxiety about playing catch-up, but I will work on this immediately and make it a priority for me to complete it.

Aditti’s Status Report for 2/25

This week I worked on assembling the robot and motor control. Caroline and I worked on laser cutting all the pieces of the robot chassis which took a few iterations. The first iteration of the top piece was cut on 6mm wood which proved to be too thick for achieving the bending effect we were going for. We recut the top piece on 3mm wood and adjusted the other pieces accordingly. We also realized our ball caster is too big, causing our robot to tilt backward, so we’ll have to get a new one of the appropriate size. I worked on interfacing the L298N with the DC motors and controlling them through the Arduino. I initially struggled with getting the encoders to read correctly using hardware interrupts but eventually figured out the timing and update rules. I also implemented PID control for position control for one motor and will work on extending this to the robot position next week. We still need to test the current code with the motors mounted on the robot to ensure that we can achieve straight-line motion, which I plan to do next week. Additionally, I worked on preparing for the design review presentation and planned out connections and interfaces for the design report.

Next week I will continue to work on motor control and odometry and plan to get the logic working for random exploration on the field. My progress is currently on track with the schedule. I will also be working on the design report over the next few days.

The updated test code and CAD files can be found on the github repository: https://github.com/aditti-ramsisaria/ece-capstone

Caroline’s Status Report for 2/18

This week, I primarily worked on the robot design and CAD model. I worked with Aditti to scope out the dimensions of the robot and the layout of the internal components. Then, I modeled the parts in Solidworks and created a layout for laser cutting the pieces. I also inspected the parts that arrived for our robot this week and made additional measurements to ensure that our hardware assembly goes smoothly. We now have most of the key parts needed to assemble our robot, and are just waiting on one of the gas sensors and a shield for the Arduino). We are on track to laser cut the pieces and have the main robot chassis assembled by next week. Next week, I will start testing the parts that arrived. In particular, it is important that we verify that the functionality of the motors and their compatibility with the rest of our systems.

This week, I did not have to apply any skills learned from ECE courses as I was mostly focused on robot construction. I learned CAD modeling from previous internships and the introductory mechanical engineering course.

Eshita’s Status Report for 2/18

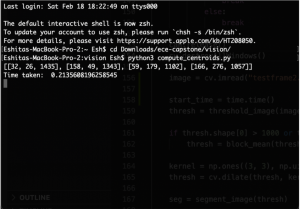

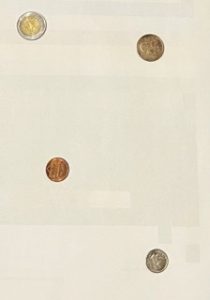

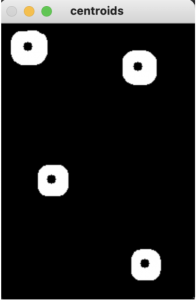

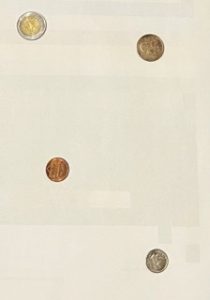

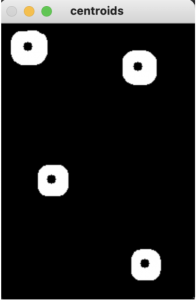

This week I worked on preparing our decided cloud platform: Azure IoT Hub and starting the software integration of Aditti’s wavefront segmentation computer vision program with the USB camera. The camera integration proved to be a difficult task, as with closer distance and smaller arenas (I used Letter sized paper as the “arena”), the camera shadow would be interpreted as an additional object. I hence had to use an artificial light source from my phone camera to make sure objects were illuminated correctly. I suspect we will need further testing on making sure the camera is stable and can click frames without a shadow appearing from its overhead nature. I have attached a few pictures below illustrating my testing of the camera feed. We have created a Github repository for our code and CAD files so far.

For the Azure application, there are currently two steps I must perform. One is to link an Arduino with the Wifi module, and the next step would be to link this Wifi Module to the cloud. I have found the following resources to help me investigate the same. (https://blog.avotrix.com/azure-iot-hub-with-esp8266/ for connecting the ESP8266 to the cloud and https://www.instructables.com/Get-Started-With-ESP8266-Using-AT-Commands-Via-Ard/ for controlling the ESP8266 from the Arduino). Next week, after we have all the robot CAD files printed out, Caroline and I will start the next step on sensor array assembling and cloud data collection.

The courses that helped me understand this course are 10-301 Introduction to Machine Learning for computer vision segmentation, 18-220: Electronic Devices and Analog Circuits for understanding basic commands and time delays on the Arduino. We spent some additional time researching multitasking on the Arduino for parallel processes. I have no formal experience in Cloud Computing, but I am certified as a Machine Learning Engineer and Cloud Architect for Google Cloud Platform, which has helped me immensely in investigating implementation on the cloud this week.

Aditti’s Status Report for 2/18

This week I focused on finalizing the robot CAD. I worked with Caroline to develop an effective layout for our parts on the robot and did the SolidWorks for the base plate. I also worked on researching controller strategies like pure pursuit and PID control for robot motion and finalized some algorithms – down-sampling and wavefront segmentation for vision, A* with visibility graphs for global path planning, and Runge-Kutta and PID control for motion. Some of the courses that cover these topics include: 18-370 (Fundamentals of Controls), 16-311 (Intro to Robotics), 16-385 (Computer Vision), and 18-794 (Pattern Recognition Theory).

I also started looking into alternatives for multi-threading on the Arduino as we discovered that we will need to have the robot sensing and moving at the same time to make good use of time. So far, I have found some approaches that make use of millis() based timing instead of delay() along with OOP to make a state machine on the Arduino. Additionally, I worked on preparing the slides for the design review and preparing for presenting next week. We plan on laser-cutting the robot chassis tomorrow (Sunday 02/19) and assembling the robot as and when the parts come in. Next week I plan on starting to test out the motors and calibrate them so that the robot can move along a fixed path. This will involve implementing the PID controller and odometry as well. Since we will be testing out the alternative approach of random motion before path planning, I’ve decided to prioritize odometry over path planning for the moment.

Team Status Report for 2/18

This week we worked on getting started on several aspects of our robot, including the CAD design and the Wavefront Segmentation algorithm for our path planning. We also ordered all the parts for our robot and received them, focusing on how different parts will integrate together. Additionally, we researched how to connect the Arduino and Wifi module to Azure, which is now the decided cloud platform for our ScentBot. Towards the end of the week, we collected this information onto our Design Review slides.

We have attached a photo of our completed CAD design.

With the parts we have ordered, we anticipate a few challenges, which we also discussed with our advisors. These would be good motor control and getting the robot to follow a straight path since we are assembling the robot using custom-built parts. We are also considering an alternate path planning approach because of the high dependence on sensor sensitivity in our project.

If the sensors are sensitive enough to detect an object from a distance farther than the 0.5m radial distance, we will change our test setup to have a single-scented object. This will be placed in a scent diffuser/spray to create a radial distribution of scent for our robot to follow. The robot will “randomly” explore the map until it detects a scent, and will follow in the direction of increasing probability. The robot will receive a travel distance and angle to follow and will reorient itself to a different angular orientation after this set distance. An image of our alternate testing approach is shown.

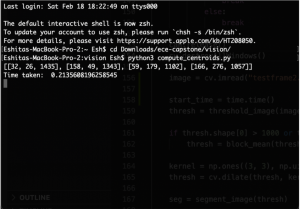

The Wavefront Segmentation algorithm runs on a letter size sheet of paper within 0.22s on average, and can detect objects present on a white background. It thresholds the images for faster computation, and calculates and prints the location of the centroids of the objects. One challenge we immediately faced was making sure shadows do not overlap within the image capture from the overhead camera.

The principles that we utilized to solve the problem of determining our robot design to fit our use-case requirements involved research into differential-drive robots, PID control, wavefront segmentation, Runge-Kutta localization, A* graph search, visibility graph, state machines on the Arduino, fluid dynamics & distributions, and chemical compositions and gas sensor types.

Caroline’s Weekly Status Report for 2/11

This week, I practiced and delivered the proposal presentation for my team. In addition to the presentation, I helped research and organize different parts for our robot so that we can order components as soon as possible and begin testing and assembly. We had to shift a few items on our schedule such as robot assembly because they required specific parts, but we will catch up as soon as possible. Next week, I will help work on the CAD modeling for the robot chassis and also start setting up the Arduino codebase for path planning.

Eshita’s Status Report for 2/11

This week I focused on researching cloud implementations and alternatives for our sensor data collection and for hosting our classification algorithm. I also focused on the proposal presentation with Aditti and Caroline, where we met several times to go over various design details. There are several alternatives to consider: we could collect the data directly onto the Arduino serial monitor for training purposes, and send telemetry data for classification once our model is implemented using payloads with Azure. We could also send the same payloads for both collecting the training data and for the actual classification. The Machine Learning model for classification would be imported into a Jupyter/Python instance. I also found a project which utilizes Python notebooks and libraries along with AWS IoT to read data from sensors, and am spending more time doing trade studies between the two. I am more drawn towards AWS because of my prior experience with it, but doing research on AWS and Azure shows advantages for both. They are economical solutions that offer a lot of message-sending abilities from various IoT devices to their dashboards. The main tradeoff I envision currently is the difference in ML capabilities between Azure and AWS. While AWS is less friendly to beginners and more costly, Azure’s ML capabilities might be harder to implement with their IoT hub. My goal for week 5 is to create an instance with an Arduino board I have lying around and see if I can send some basic data about an LED light being on/off on Azure, on schedule with the Gantt chart presented during our proposal.