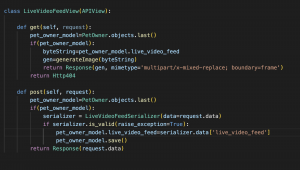

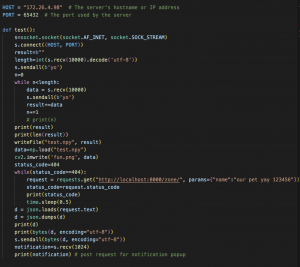

At the beginning of the week, we did testing on the system for the final presentation. A test that was relevant to the web application was testing the notification speed to the user after being notified of a pet entering the forbidden zone. We learned that the speed was limited by the polling rate, or rate of GET requests from the frontend to the backend, for checking if a notification should be displayed to the user or not. As well, we worked on updating the block diagram and displaying a demo for the complete solution for the final presentation. In terms of technical implementation, I worked on the live video feed. My initial iteration was displaying an image of the room a frame per second. The CV would send a byte array to the backend, which would be transformed into an image, and the frontend would poll the backend every second using the useInterval React hook and display the image. Though this was easier to do implementation wise and the live video feed would be considered “functioning”, we wanted to find a way to display more frames per second. Hence, we learned about StreamingHttpReponse object in Django. Essentially, this object would take a constantly updating byte array and display the image for the specific byte array. We were able to show more frames/second of the room, but there are some issues with loading the images quickly, which can lead to image flickering. We hope to reduce the image flickering so that users can smoothly view the live video feed.

As of right now, I am behind schedule. I will be working on finishing up the live video feed, finishing up the authentication process, making a user friendly and intuitive website, and deploying the frontend before integration and testing on Tuesday.

Next week, I hope to finishing up the individual tasks for the web application as mentioned above. As well, we look to do integration and testing before the final poster and report.

In addition to the systems test we have done, I have done unit tests for each part of the web application, including choosing forbidden zones, displaying a heat map for the pet activity logs, displaying a notification a user for a pet entering the forbidden zones to name a few, so that system integration is as smooth as possible.