What are the most significant risks that could jeopardize the success of the

project? How are these risks being managed? What contingency plans are ready?

Risks that could jeopardize the success of the project are the limitations of the radar without the DCA1000EVM to quickly process and stream the raw radar data in real time, which requires a workaround in how the data is stored and sent, such as recording and saving a file, and then streaming the data afterwards, which increases the total time from data collection to wireless transmission to the base station computer to classification by the neural network. In order to fit the time constraint of 3 seconds, lower frame rate data may need to be sent, reducing the quality of the data and possibly reducing the F1 score of the neural network.

Were any changes made to the existing design of the system (requirements,

block diagram, system spec, etc)? Why was this change necessary, what costs

does the change incur, and how will these costs be mitigated going forward?

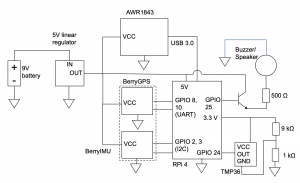

Changes were made to the circuit after assessing available parts, mostly concerning communication between components which do not affect cost. The communication between the radar and the Raspberry Pi was changed from SPI to USB 3 since the radar evaluation module has a USB port, and the communication between the GPS and the Raspberry Pi was changed from I2C to UART because in the GPS/IMU module we bought, the GPS has separate communication from the IMU, which still communicates with I2C. Since no buck converters were available at TechSpark and no estimate of when they will be restocked, a linear regulator, which is less expensive, was used to convert the battery from 9V to 5V, which decreases battery life due to lower efficiency compared to a buck converter. Instead of connecting the temperature sensor output to a transistor gate, the output voltage was simply added to 0.33V for simplicity due to the range of expected output voltages (0.3-1.8V) which coincide with the voltages accepted by the Raspberry Pi’s GPIO pins (0-3.3V).

Provide an updated schedule if changes have occurred.

One week is taken off of the circuit schedule, and is replaced with work on preprocessing the radar data to input into the neural network.

How have you have adjusted your team work assignments to fill in gaps related to either new design challenges or team shortfalls?

Since we are communicating internationally with authors of the Smart Robot drone radar dataset for clarification on preprocessing steps such as cubelet extraction of the radar data, which adds time, we are focusing on building the other parts of the project earlier, such as the circuit, so that the overall work is rearranged instead of delayed.