This week was a better week for the team. We were able to tackle many problems regarding our capstone.

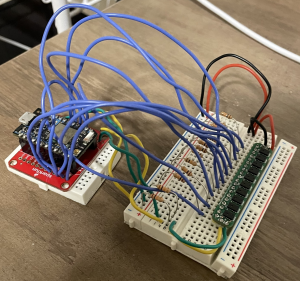

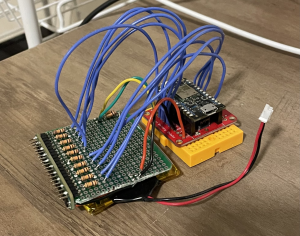

Our biggest concern was the Jetson. After reading online tutorials on using Edimax, we were able to finally connect the Jetson to WiFi. Next week, we’ll be focusing on connecting the sensors to the Jetson and the Jetson to the Unity web application so that we can accomplish our MVP and start formally testing it.

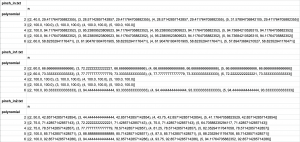

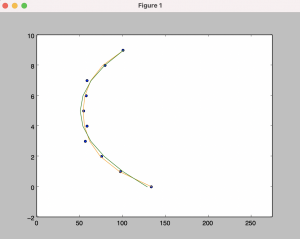

Since we have time now, we went back to our gesture algorithm and sought to improve the accuracy. We explored different machine learning models and decided to see if Support Vector Machines (SVM) would be effective. On the data that we already collected, the model seems to be performing well (95% accuracy on cross-validation). However, we know that the data is not comprehensive of different locations and speeds of the gestures. We collected more data and plan on training our model on that over the next week. It takes a lot of computational power, so we will try training on either a Jetson or a Shark machine. We were also suggested AWS, which we might also look into.

Biggest risk now is latency. We want to make sure that everything is fast with the addition of the Jetson. We have a Raspberry Pi as a backup, but we’re hoping that this works. Therefore, we haven’t made any changes to our implementation. We also went ahead and ordered the band to mount the sensors on. We plan on collecting more data and training on that next week after we find an effective way to train the model.

We should have a working(ish) demo for the interim demo on Monday. We also seem to be on track, but we will need to further improve our detection algorithms and thinking to have a final, usable product.