This week was heavily design focus. I was responsible for giving the Design Review presentation, so I spent most of last weekend researching and getting the presentation together. It was very helpful doing this presentation because it helped show me what parts of the project we have more planned than others. For example, the “middleware” part of the project, i.e. the Jetson and the communication part, is where we have the most questions surrounding the specs and how/if it’ll fit in the final scope of our project. However, we have the most information on the sensors, which we have already ordered. I have a clearer understanding of the direction of our capstone and what areas I need to explore more.

Speaking of areas I need to learn more about, I continued tinkering with the Jetson this week. It’s still behaving very spottedly, and I predict it has something to do with how I downloaded the image on the SD card. As mentioned before, this is the weakest part of our project, so I need to spend more time on next week. My goal before Spring Break is to get it set up, collect data from the sensors, and send information to the web application. It’s a lot, but if I make this my sole focus, it’ll be good for the project as a whole after we come back.

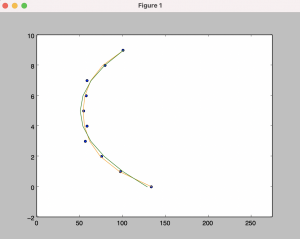

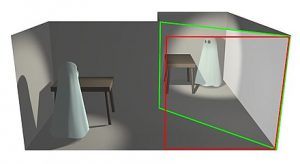

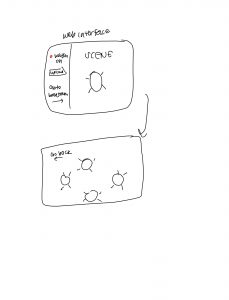

We ordered individual sensors to test out while we wait for the PCB board to be manufactured. We began testing it and decided to create a visual interface of it. The idea that I had was that the yellow dots represent the sensors and the blue dot represents the objects that are being detected.

I originally wanted to create this web application in Django, so I told Edward to code the MQTT communication in Python. However, since the CSS of the “objects” need to be changed, and the only time it would update is when the server would refresh, we decided to change our web application to be all on the client side. I asked Edward to change the code into JavaScript, and then we were able to get an almost instantaneous rendering of the sensor data. A demo is attached to the link below.

https://drive.google.com/file/d/1w0-5nBngHThfDe-A_Iem-NNt1k3NcuCe/view?usp=sharing

For the next week, I will focus on the Jetson and finishing the design review paper. If I have some time after these two, I will finetune our visualization so that it can be used as a dashboard to measure the latency and accuracy of the sensors. However, this may become a Spring Break or after Spring Break issue.

As mentioned before, the Jetson is the weakest part and the greatest risk. Although we have a contingency plan if the Jetson doesn’t work, I don’t see a reason why we have to remove it at the moment. I will reach out to my teammates and the professors on Monday so that we can get over this hump. We’re still pretty much on schedule, since we are getting a lot of work done before the actual sensors get shipped.