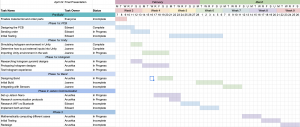

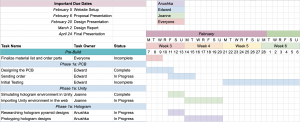

This week, we attached the board and the sensors to a wristband we bought. We collected new data with the board on our arm and retrained our number-of-finger detection model with this new data. The sensors need to be elevated and slanted up to that it doesn’t confuse the skin on the arm as a finger.

We are having a bit of trouble detecting swipes and pinches reliably, so we have been discussing removing pinches entirely from our implementation. Our number of finger classifying SVM model gets confused between swipes and pinches and it causes a lot of problems for gesture detection. We created a demo of a “swipes-only” system and that seems to work much better, since it only has to detect if there is “none” or “one finger.” Anushka and Edward (mostly Anushka) have been trying our various new techniques to detect fingers and or gestures, but we are having trouble finding an optimal one. Early next week, we will discuss what our future plans are for our finger detection.

Anushka and Joanne have cut new acrylic sheets for the hologram and will work on creating an enclosure this week.

We are a bit behind schedule this week as we are working on refining our “swipes-only” system. We may create two modes for our system: one that has the swipes-only model so that we are guaranteed a smooth performance, and one that has both swipes and pinches, which isn’t as smooth but does have more functionalities. We are also considering changing the gesture for the zooms while still using our two finger model, but we have to come to a decision by early next week on if and how we should implement this.

We’ve decided to scrape the Jetson as the latency of communication between the sensors and the Jetson was so much slower than the latency between the sensors and our laptops. We were looking forward to using the Jetson for machine learning, but again due to network issues we’ve been having and the fact that we are able to train the models on our own laptops has led us to this conclusion. We have learned a lot from using it, and we hope that in the future, we can find whatever the issue is behind it.