This week was final presentation week so we as a group worked a lot on polishing the slides and doing testing on our prototype. We conducted a lot of testing on latency, performance, accuracy and that was our focus for the latter half of the week. The early parts of the week, Edward and I took time to debug our unity/gesture code to provide better model translations. Before there was a bug of irregular spikes in the model’s movement when given gestures. However we were able to go through our whole code, and figure out what caused the issue. We were not resetting the appropriate values after the completion of one gesture, thus causing issues with data calculation. Our integrated model works fairly well in response to gestures now! After that we have been focusing on testing + hologram portion. I added new features to the web app which include changing models (now there are three models that the user can switch between), and incorporating the previous sensor data visualization we had with our current web app. I will continue this week to finish up the UI for the web app and help with finalizing other portions of the project such as display/hologram creation as well as the final report/ poster.

Joanne’s Status Report for 4/23/2022

This week was a busy week since we are wrapping up on our project and we had some covid case situations that delayed our work schedule.

Edward and I acquired black acrylic sheets to encase our hologram pyramid and display over. We tried gluing the cut out plexiglass (for the hologram), however, the glue we used from Tech Spark did not apply as nicely as we hoped (nor did it stick). We then thought of a new design to laser cut the hologram layout so it would have a puzzle like attachment feature at the edges, so it can connect to the other sides of the hologram pyramid without all of the glue and tape. We drafted the new version to cut up. There was some covid related issues in our group, so we are in the midst of working out a schedule so we can go in to Tech Spark and cut the new version.

We are still working on our model gestures. Since our gesture algorithm did not work out, and we are relying on our finger detection algorithm for our gestures, we moved noise filtering and data queueing to the Unity side of things. The model works well in the test data for “good” swipes and zoom gestures. However during the live performance, due to noise, there are a lot of erratic model movement that we are trying to resolve. The swipe gestures look ok live, the pinches have some unknown behavior. That has been my primary goal this week. To filter our noise, I have tried taking the average of points coming in for a specific gesture and comparing that to identify a factor for gesture movement. It seemed to work well in reducing noise in the swipes, but not in the pinches for some reason.

I have been working on finalizing the web app UI and included visual components that would help with user interface (like including a finger visualization of where ur finger is on the trackpad, and battery info). As a group, we talked about collecting data for testing and have been working on our final presentation.

Joanne’s Status Report for 4/16/2022

This week we worked together a lot on testing our integrated product and fixing individual bugs we had from each of our parts. We mounted our board onto the wristband we bought. We have been testing our algorithm on the new data that reflects user input taken from the arm. Right now we see that there is a lot of noise in the data that causes the model to move in erratic behaviors. Right now I am working on trying to smooth out the noise on my end, while Edward and Anushka also work on filtering out the data on their end as well.

I have created a new rotation algorithm that makes the model rotate at a much smoother pace. When tested on ideal data (data with no noise), it moves at a very smooth consistent rate relative to how fast the user swiped. Before I only had a rough rotation algorithm where the model move based on the distances given to me of the fingers. Now I take into account the timestamps of when the data was taken so I can approximate a relative speed of the user. This change was only for rotations when it was limited to the X axis.

Due to some problems in gesture detection on the sensor side, we are right now planning of getting rid of pinches, since pinching and swiping motion is confusing our ML model. Thus we are thinking of implementing rotation in all degrees to add additional functionality. I have added that functionality in right now. However the translation of the finger locations does not translate intuitively to the model rotation (it rotates in the right direction but not the right angles?). I am working on how to make the swipe made by the user look more like the 3d rotation we would expect to see. We have been talking as a group to see what other functionalities we can include from what we have now and in the time frame left. Some ideas might be detecting taps, or creating a new zoom in scheme that does not involve the traditional pinching motion.

Right now I am also currently working on new ways of getting rid of the unexpected rotation spikes due to noise. I graphed out the data points and decided to try averaging each data point in a swipe and using that as a calculation standard for finger location so that I can try to reduce the effect of noise in the data. I will test that implementation out this week.

Anushka and I also have recut the hologram from plexiglass to fit the dimensions of the display we are going to use for the presentation. We are planning to create the encasing (for light blocking) this week.

Joanne’s Status Report for 4/10/2022

This week was demo week so we focused in on integrating the parts of our project. We conducted more testing on how the live sensor data translates to our model transformations (rotate, and zoom). We tried to mimic as many gestures from different angles and observed whether or not they performed the correct functionality on the model. We found out there was slight lag between the user input and the model translations due to lag in data being sent due to spotty wifi in the lab. Professor Tamal mentioned that he could help us out with the connectivity issue, so we are planning to talk to him this week about that as well.

Other than demo work, I also started taking in more info from the hardware to also display things such as battery life, on/off status. Once i get the information via MQTT from the hardware, it updates it self on the webapp. I am also planning this week to create a visual layout of where the finger is in relation to our sensor layout.

I thought of a new algorithm to help translate the data better into smoother translations. I could not translate my new approach to code yet due to carnvial week. However I hope this new approach will help in making the model move more smoothly even in the presence of noise and at a rate more consistent with how fast the user is swiping/ pinching.

I believe we are on track, and as a group this week we are planning also to mount our hardware onto a wristband so that we can see what actual data taken from our arm would be like.

Joanne’s Status Report for 4/2/2022

This week I focused on drafting up the web application by adding UI and other features we need for the project. I added a login/register feature for users and am in the midst of creating a preview of the 3d model.

I refined code for the 3d model rotation. Previously our problem was that noise in data caused the 3d model to spike toward the wrong direction. Therefore I filtered out all of the noise by just ignoring data points that caused those spikes. This caused this 3d model to rotate more smoothly. I also tested out the zoom in feature using live data from the sensors. The zoom in right now zooms in at a constant pace. I am working on a fix right now to get the zoom in to work at a pace that matches the user’s input gesture speed. This week I will focus on refining these algorithms more so the translations happen more smoothly even when there is a lot of noise.

I also started helping out with the gesture detection part of the project. We had team meetings to discuss how we can improve the algorithm to increase accuracy in finger detection. We came up with the conclusion of using ML and are in the midst of testing our new algorithm. We are in the midst of integrating all of our components.

Joanne’s Status Report for 3.26.2022

This week I continued to work on refining the Unity- Web Application portion of the project. Last week, I got up to testing fake data on just the Rotate function via MQTT protocol. In the beginning of the week, I talked with my group members and they decided for now, that the rotation gestures will be limited to rotation about the x-axis (Up). Thus, I modified the code to account for this change. The model rotated well about the x-axis during the tests with my dummy data.

I wanted to see how the Rotate function would react to real life data, so we decided to integrate the live sensor reading with the basic gesture recognition algorithm, to my Unity web application. Right now, I had a python script that I ran from my local terminal that would publish to the public MQTT broker. Then a javascript script on the DJango web application would read those published data values, and send that data over to Unity where it would be parsed for use in order to Rotate the model. I decided to create another parsing function within the javascript script so that I could call the appropriate function (i.e. Rotate or Zoom In/Out) and also send the data in the correct format for the Unity parsing function I wrote.

After testing it with live data, the model rotated well about the xaxis. Since the gesture algorithm is not complete yet, we tested by ignoring all gesture data that was not classified as a swipe. You can see a demo of the working prototype on this google drive link below, and it will also be on our main team status report. There is also another problem that we are in the midst of figuring out about the model slightly dipping away from the correct direction, then moving in the right direction immediately afterwards, during the transition between two swipe gestures. We are currently testing to see if its a problem with the data being sent over, or if it is a problem with how I am calculating Rotation values. Also we will work on making the rotation more smooth this week.

https://drive.google.com/file/d/1h3q5Os-ycagcWNNDkVVVS57qLM31H1Ls/view?usp=sharing

Lastly, I finished up a basic zoom function call. So now the model can zoom in and zoom out on calls to the Unity function from the Django web application. I am still figuring out how to zoom in based on how fast the user is pinching in and out. Right now it is just zooming in and out by a constant factor every time the function is called. Refining these two functions will be something I will be working on this week. Since we still are trying to figure out zoom out gesture detection, we have not been able to test the zoom functionality with live data, but that will also be another goal for this week.

Joanne’s Status Report for 3.19.2022

So far I worked more on the Unity-Web application side of the project. I wanted to test calling Unity functions would work with streaming data. This is to mimic the data that would be streamed over from the Jetson Nano to the Web Application. I decided to use the MQTT protocol to stream over data from a Python script. I used the MQTT Eclipse Mosquitto broker. The data will be sent from the Python script to the Django Web application in the format of a string. For now, I made it so that it sends over fake dummy data that contains the gesture name, and the x and y coordinates for testing.

I wrote a script on the Django application to call a Unity function I wrote called “Rotate” every time a new data string was published onto the broker. When Rotate is called, it will parse the data that was just sent in. When I tested with dummy data, at first I ran into some issues with the model getting stuck after a certain degree change. However I was able to fix that by getting rid of a rotation limit that I incorrectly wrote initially. As of now, the model is able to rotate in the right direction (up-left, down-right, up-right, up-left) based on the data. I am still working on the algorithm to translate the data that is streamed in, into rotating at more precise angles.

At the same time, I am also looking into how to perform zoom operations with another set of dummy data. I have gotten zooming for a single view of the game object done through script, now I need to see how to access all four cameras (which is needed to get all four perspectives of the 3d model), and zoom in on all four cameras. I also am planning this week to see how to correlate our data that would be streamed in to how fast the zoom will occur.

This week, I hope to finish up a basic working of the zoom and rotate functionalities based on dummy data that is being streamed in. This way we can start trying to integrate in the later part of the week.

Joanne’s Status Report for 2/26/2022

This week I worked on with my group more design related portions of our project. We finished up the design review slides and started thinking about the design review paper. We also got some sensors in this week and have been testing them out.

I continued to work on the Unity – Web application portion of the project. Last week I worked solely on Unity to see if I can take in dummy data and make changes to the model (i.e. rotation, moving). These changes would be reflective of what gesture the user will be making on our trackpad portion of our project. We decided that the flow of our project would be that the Jetson Nano will send over data to our web application, then the web application will communicate via Javascript to our Unity application that is embedded inside our web app.

I created the web application in Django which will host our web application portion of our project. Then I embedded the Unity application from last week onto the Django web app. I wanted to figure out how to send serialized data from the web application to Unity. This is so that when we are able to get data from the sensors to the web app, we are able to send that information to Unity to reflect the changes. I researched into how Unity communicates with a web application (specifically django). So far, if i press a button which represent either moving up, down, left, or right, that will call the appropriate Unity functions I wrote to move the 3d model left, up, right, or down. Thus we are able to get basic serial data from a html page to the Unity application.

This week, I realized that the way I implemented rotation last week, requires the use of certain Unity functions that track the mouse deltas for you. The only problem is that the function takes in input whenever the user makes a mouse drag and calculates the mouse delta, however we need to be able to make rotations with data sets (x,y coordinates of sensors). I started to look into how we can replicate the effect of this function using just x, y positions that we get from dummy data (which will later be sensor x,y coordinates).

For the upcoming week, I am planning to write a script that will send dummy data via wifi to the web application and perform translations to the 3d model. I am not 100% sure, but I am thinking about also using the MQTT protocol much like Edward and Anushka did for the sending of data from sensors to their visual application. I will also work on looking into rotations algorithm more this week.

Joanne’s Status Report for 2.19.2022

This week I worked more on the Unity portion of our project. I am working on mimicking the translations that would be applied to the 3d model after a user produces a gesture (i.e. zooming in/out, swiping). I initially wrote script to get 3d object to move on its x,y,z coordinates when a user does specific key presses. This was to ensure that the object responds to the key presses and that the projected image also translates well. Next I got the swiping motion which translates to rotation of the object to work. The script currently takes x,y coordinates from the mouse cursor location and will rotate the 3d object. I am planning to work on the last gesture (zooming in/out) this week. I have been discussing with Anushka (who is working on the jetson nano part) about how the data should be serialized (i.e. what kind of data should be sent and in which format) when sent from the Jetson to the web application.

I worked on some portions of the design slides and talked as a group about our future design plans.

Joanne’s Status Report for 2.12.2022

This past week I worked on making the proposal slides with my team and I presented it during the proposal review. We got back feedback from our classmates and TA’s. One of the feedback was thinking about how we were going to implement the hologram visual portion of our project.

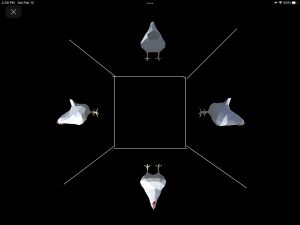

Thus for the latter part of the project, I worked on Unity to create the layout of the hologram. In order to project a hologram onto our plexiglass pyramid, we needed to place four perspectives of a 3d model around the base of the plexiglass pyramid. My main responsibility was to use Unity to create the four perspective view of a random 3 model and then export it to the web using Webgl. I placed a photo of the unity scene when it is running below. The white lines are drawn in by me to illustrate how the pyramid would be placed in respect to this view.

I exported the Unity scene onto a web browser so we can place the pyramid on top of my ipad (which holds now the link to the unity scene with the four views of a model). Edward and I helped Anushka in laser cutting the pyramid plexiglass she designed. An image of a basic hologram model that we completed is on our team status report page for this week.