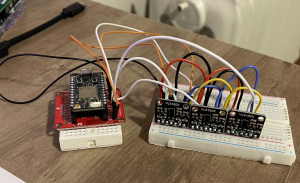

This week, I continued working on the finger and gesture detection and further integrated the wearable with the Webapp. Our data is extremely noisy, so I have been working on smoothing out the noise.

Originally, the Photon is unable to send the timestamp when it collected all 10 sensor values, since the RTC on the Photon did not have millisecond accuracy. But, I found a way to get an approximate time of sensor data collection by summing up elapsed time in milliseconds. This made the finger detection data sent to the Webapp appear smoother.

I am not super confident in our SVM implementation of finger detection, so I want to remove pinches entirely in order to reduce noise. Two fingers that are close together often get confused with one finger and vice-versa. Because of this fact, many swipes get classified as pinches, which throws off everything. So, I decided to work on an implementation without pinches and it seemed to work much better. As a group, we are deciding whether or not this is the direction to go. If we are to continue with only detecting swipes, we will need to have a full 3D rotation rather than only rotating along the y-axis.

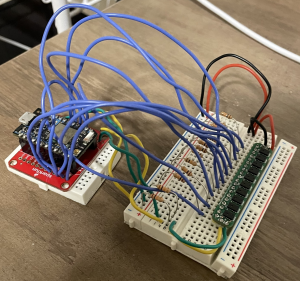

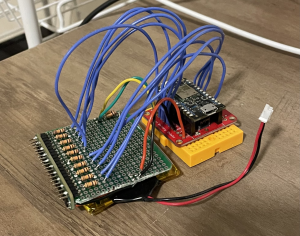

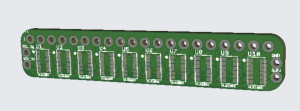

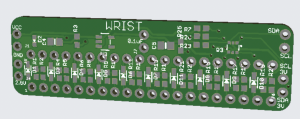

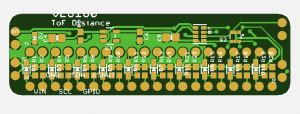

This week, we also attached the board to a wristband we ordered and have been working off of that. The sensors need to be elevated slightly and I am not sure how annoying that will be to a user. This elevation also makes it so that users cane just swipe on their skin casually; they need to deliberately place their finger in a way so that the sensors can catch it.

This week, I will work on trying to filter and reduce noise in our swipes-only implementation, which I think we may stick with for the final project. This will be a tough effort.

I am a bit behind schedule as the project demo comes closer. Detected finger locations are a bit shaky and I’m not sure if I can figure out a way to clean them up. I will work with Joanne to come up with algorithms to reduce noise, but I’m not too confident we can make a really good one. Removing pinches limits the functionality of our device and I’m not sure if its even “impressive” with just swipes. We will need to either explore more gestures or broaden our use case to make up for this fact.