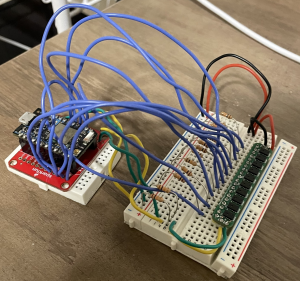

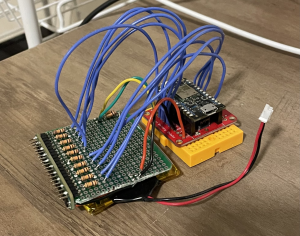

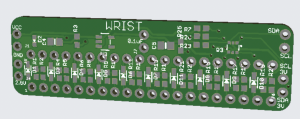

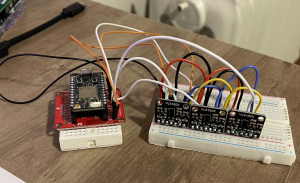

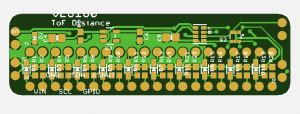

This week, I worked on gesture recognition algorithms with Anushka and helped integrate everything with Joanne.

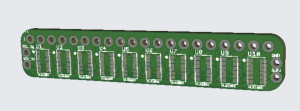

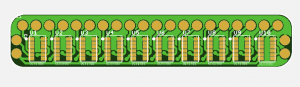

Our algorithm fits a curve to the 10 sensor data points and finds the relative min and max values of that curve. If there exists a max, we say the data represents a pinch. However, I am not too happy with the accuracy of this. I think we need to do something where we look at data over time, but I was having trouble figuring out what to do. Next week, I will work on this more and possibly explore other techniques.

I also wrote a script to test what we have now with Joanne. In real-time, we are able to collect data from the wearable, process it, and send it to our webapp. The webapp shows a 3D model which can be rotated as we swipe. This demo works fairly well, but there are some kinks to work out. Pinches are ignored in this demo, and swipe detection works pretty well, honestly.

I am on track this week, but I am worried we will not get a good algorithm in place and will fall behind one day. Further integration will take some time too. Next week, I will work on our deliverable, which will probably be a refined version of our swiping-to-rotate demo. The major issues for us looking forward are differentiating between a swipe and a pinch.