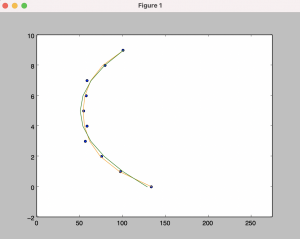

This week, I mainly focused on different hologram designs. We have three options right now:

- The angles in the pyramid are 53-53-127-127, and the dimensions are for a hologram that fits an iPad.

- The angles in the pyramid are 53-53-127-127, and the dimensions are for a hologram that fits a monitor.

- The angles in the pyramid are 60-60-120-120, and the dimensions are for a hologram that fits an iPad.

So far, we have tried all three, and the last one works the best but only in total darkness. Next week, we’re going to try to test new angles before the demo day, then probably pick the one with the greatest visual effect. I’ve also been working on the final sewing of the device onto the wrist band. We adjusted the height of the sensors since our hand was interfering with the input. I have also been working on the poster for our final demo.

This is the final official status report of the semester. We have all the parts completed sufficiently, but we know there are always areas of improvements. We’re going to try to make minor changes throughout the week before demo day, but otherwise, we have a completed product! I am most concerned about how it will go overall. We have tested our product amongst ourselves, but we are aiming to test it on several participants before demo day and final paper. We are excited to show everyone our progress and what we’ve learned!