For this week, I worked on multiple aspects of the project. First, I finalized my post-processing method of acceleration data. With our TA’s (Janet Li) help, I was able to create a way to detect direction based on my accelerometer recordings of the Y and Z-axis. Basically, a certain direction (up, down, left, or right) is determined by when the acceleration data returns a higher or lower value than the positive or negative threshold values that I set for each axis. After a direction is known, the algorithm knows when the sensor is going to rest state when deceleration results in the data lower or higher than the positive or negative threshold. In the resting state, the acceleration data is in the range of 0.3 – 0.5. Hence, the positive and negative threshold values are 2 and -2. These values are selected based on multiple trials and the consideration that floating values in the range of -2 and 2 can sometimes occur if a person’s hand naturally shakes. The direction detection is critical as it is used to trigger the conversion of acceleration into velocity data. In order to change acceleration into velocity data, I used the trapezoidal rule for mathematical integration. Since I chose to acquire acceleration data every 10 milliseconds, each subinterval of 10 milliseconds is used for the trapezoidal approximations. The basic equation for this derivation is fairly simple by using the following equation:![]()

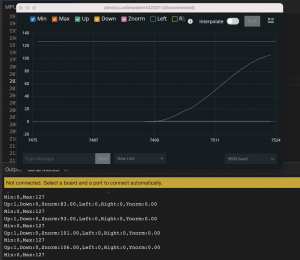

Here, the f(x) values will be the acceleration data sampled every 10 milliseconds and Δx is (b-a) / N. Since the addition term is changing dynamically as I collect data, I calculate the integral dynamically and update the instantaneous velocity when a user makes a movement. After the conversion, I map the range of velocity data to an output range of 0 to 127, which is the range of values for MIDI. Tomorrow, I plan to meet up with Harry in the lab to work on establishing a connection between the Micro and the Due. The processed data will be very useful for the implementation tomorrow. The picture below is a screenshot of the MIDI data produced using the accelerometer/gyroscope sensor.

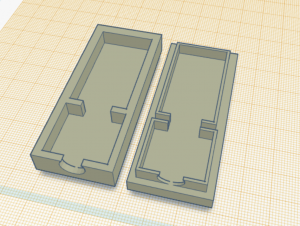

Another work that I did during this week was designing the glove. Our team created CAD files for AruCo markers and received help from TechSpark on 3D printing those pieces. We also printed a casing for the Micro. The markers were designed and printed correctly, but the Micro didn’t fit in the casing. So, I am redesigning the casing and will request another print next week. The picture below is the mockup design of the case.

Using epoxy, we attached the AruCo markers on the glove and tested its functionality with the Jetson. The picture below is a photo of the glove with the markers attached.

Along with the 3D printing job, I also worked on getting the webcam attached to the helmet. Fortunately, there is a screw hole on the bottom of the webcam and so I hand drilled a quarter-inch hole in the facade of the helmet and used a nut to hold the camera.

Despite all this work, I am a little bit behind schedule. I was planning to conduct tests for the pressure sensor this week. However, the design of the glove and helmet took more time than I expected. Although I am confident with the data that I obtained from the pressure sensor. Hence, I expect to perform tests on the sensor next week. Along with that, I will conduct the testing protocol for the accelerometer/gyroscope sensor as well. I think now I am confident that my components can be tested for validation. If serial communication and data transfer between the Micro and Due turn out to be successful tomorrow, I hope to show the two subsystems creating sound next Tuesday during the Interim Demo.