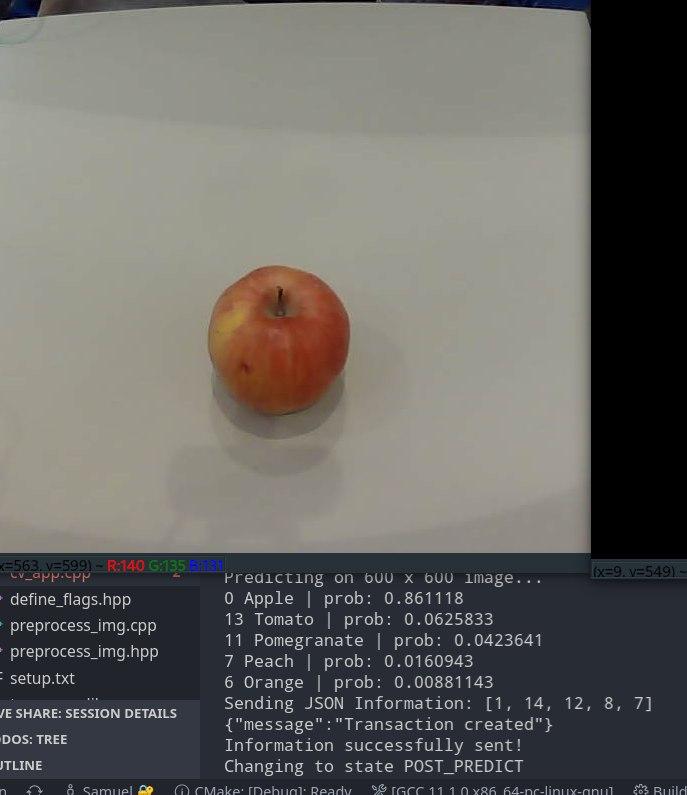

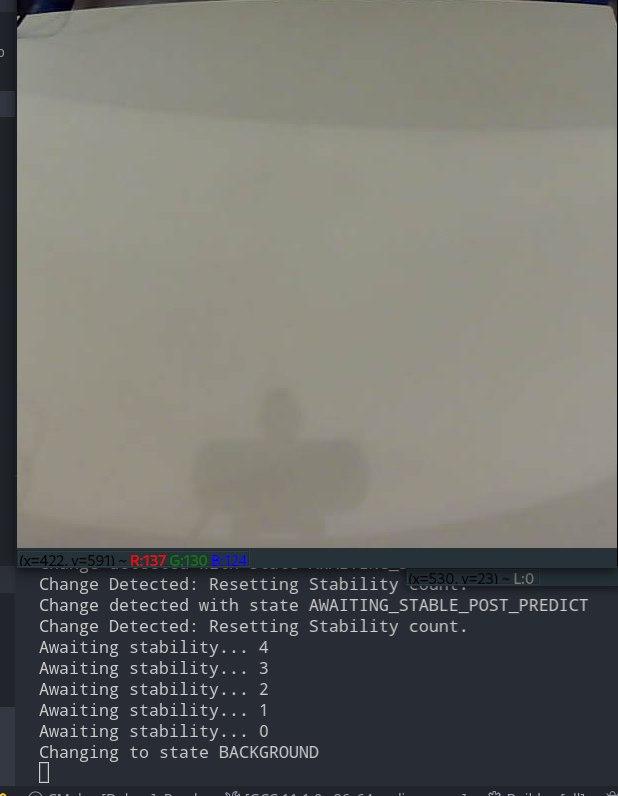

Last week, we used the saws and equipment from carnival to cut up our wood into usable pieces. This week, we finally got the chance to actually assemble them into a folding platform to assist in our computer vision algorithm. This is necessary to create a nice, light background that is easy to mask out and better detect changes on. For example, when someone walks by, there won’t be significant change to the camera detection since it won’t see the foot, just small changes in lighting. The platform also helps to get more regular sizing of fruit. Users will know to hold it at a consistent height (next to the platform) which makes classification and segmentation much easier.

The folding feature is a small feature so that the fridge takes up less square footage when scanning is not in use.

This week I finished construction of the folding platform for the camera. using the pieces we had cut last week. We found various wood screws in tech spark to finish attaching the foldable hinges. The wood screws were slightly long, so I used hot glue to cover up anything dangerous that could catch.

We also purchased white spray paint for the platform to even further decrease any barriers of detection, but when Samuel ran the computer vision it appeared to work fine with just the light colored wood. I may still paint it next week for aesthetic purposes.

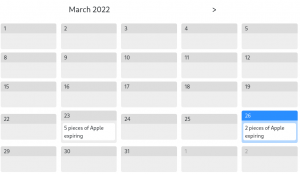

On the front end side, I have begun work on a nutrition information system in place of the recipe recommendation system. The recipe recommendation system was proving very difficult to integrate and test, since these timers are for very long periods of time and required extra frontend and backend integration. Instead, when clicking on items in the interface, it will display nutritional information about the item. For an apple, this will pop up:

I’m still in the process of integration and will work to finish this up next week. Overall, we’re in very good shape. I just need to get the front end up and running on the tablet next week.

The back-end API had quite a bit of work done to it as well. Logic has been added for when items are replaced into the fridge, ensuring that the item count and expiry dates remain correct even when the same item is removed and placed back into the fridge. This is done by storing the state of the fridge – new items are only added to the tally when it is in “add” mode, i.e. when the user is loading new groceries from the store. All other additions in the “track” mode are considered replacements of previous removals.

The back-end API had quite a bit of work done to it as well. Logic has been added for when items are replaced into the fridge, ensuring that the item count and expiry dates remain correct even when the same item is removed and placed back into the fridge. This is done by storing the state of the fridge – new items are only added to the tally when it is in “add” mode, i.e. when the user is loading new groceries from the store. All other additions in the “track” mode are considered replacements of previous removals.