Final Video: https://youtu.be/hp0plAxwQCk

30 Apr 22 – Team Status Report

Most of this week was spent preparing for the final presentation, demo and posters, and A LOT of testing. We also managed to install the fully integrated system on an actual fridge!

Since most of the individual work has already been completed, most of our time this week was spent testing our integrated system together and ironing out bugs with the CV, front-end and back-end side. Besides this, we also worked on the final presentation and poster. Both the presentation and the poster seems to have turned out well, and we’re really excited for the final demo and to see how the project fares overall!

Next week, we will continue to work on the final video and poster. However, testing has also revealed some improvements and features that we could add, both on the frontend side (related to UX design like button sizes/placement), and the backend side (email notifications). That being said, we are ahead of schedule (now making reach goals and extra features, i.e. non-essential), and are really excited for our final demo and video!!!

30 Apr 22 – Samuel’s Status Report

Since I had completed quantity detection last week, I had nothing much left to do for the CV side of the project, besides testing and experimenting with model training/data collection, which I did. I also helped out with the installation of the system onto an actual fridge:

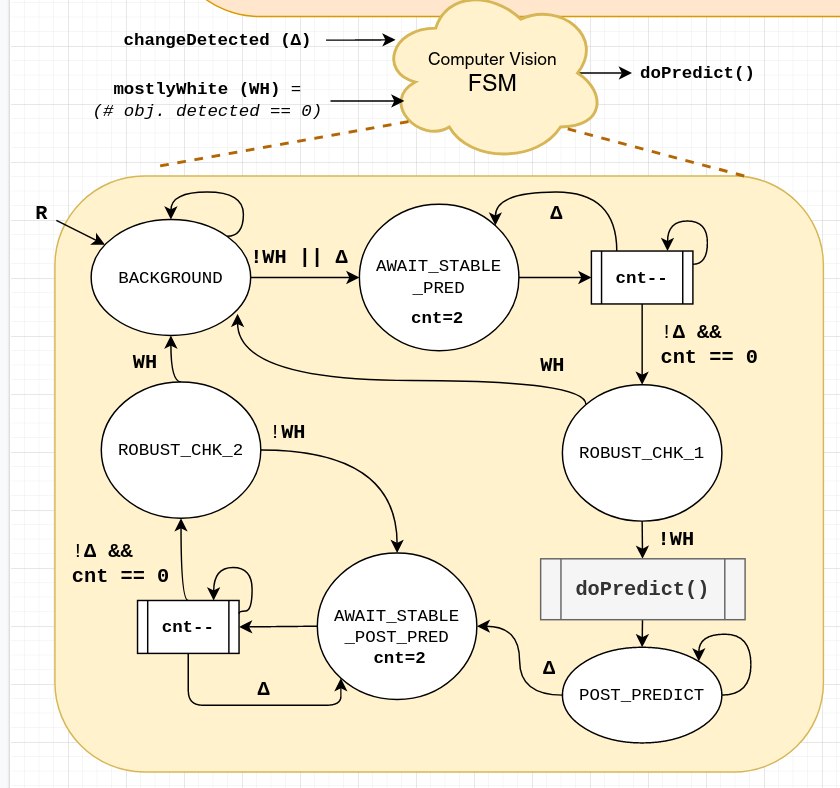

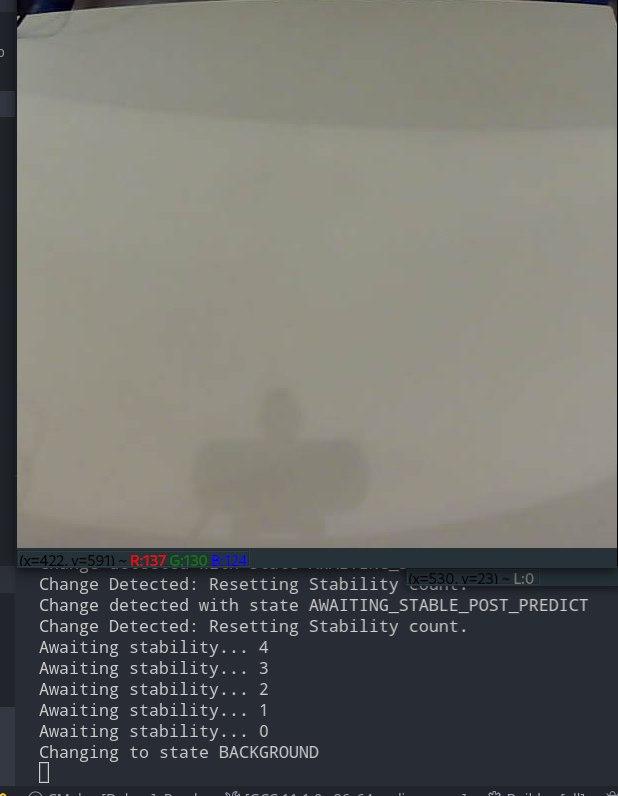

During testing, I found some edge and potential failure cases, and added more robustness checks. In particular, by using my white background detection, I fixed an issue where the FSM will move into the wrong state if the user tries to remove fruit one-by-one off the fridge, or tries to add more fruit once a prediction is done. The final FSM is shown in the Figure below:

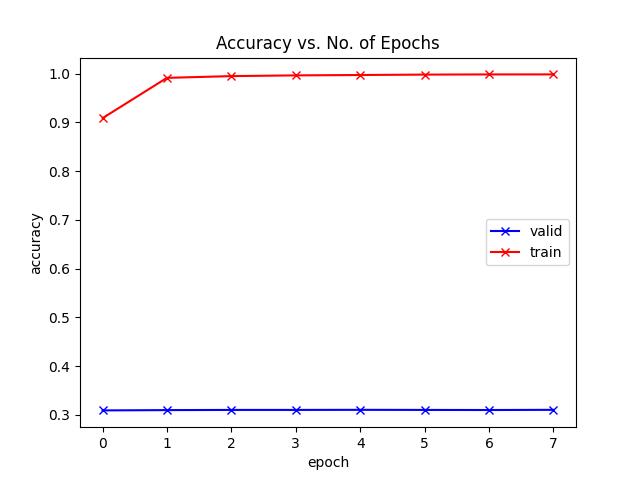

I also trained the model again with some new fake fruits but the validation accuracy was still poor (although training accuracy was good). From the graph shown below, it seemed that the CNN was not learning the underlying model of the fruits, but was instead overfitting to the model. Most likely, this was the result of not having enough data to learn from for the new classes, thus creating confusion between fruits.

Next week, the focus will be on final testing on the integrated system (although we have actually tested quite a bit already, and the system seems to be fairly robust), and preparation for the final video and demo. CV wise, we are definitely ahead of schedule (basically done), since increasing the number of known fruits/vegetable classes was somewhat of a reach goal anyway.

23 Apr 22 – Team Status Report

We made quite a bit of progress this week with our project, and are actually mostly prepared for a demo already 😀 We will focus our efforts on simple incremental changes + lots of testing.

We have thus far:

-

- Integrated the entire system with our tablet + Jetson

- Completed a benchmark accuracy and speed test

- Did extensive full-system bug-testing and fixed some bugs in our back-end code with returns and made the system more robust against incorrect quantity data (e.g. removing more fruits than there are in the fridge)

- Completed training of self-collected classes, including bell peppers, and are potentially looking at adding more classes.

- Debugged and fixed classification speed issues on the Jetson

- Spray-painted the background white to improve algorithm accuracy

- CV algorithm is now more robust against “false triggers”

- Nutritional information now available to user

That being said, we have found out some interesting findings and possible improvements that we can make to our project that will make our product even more refined:

- Adding email or in-app notifications to remind the user that their food is expiring soon (backend + frontend)

- Quantity detection using simple white background thresholding and GrabCut (CV, backend interface already in place)

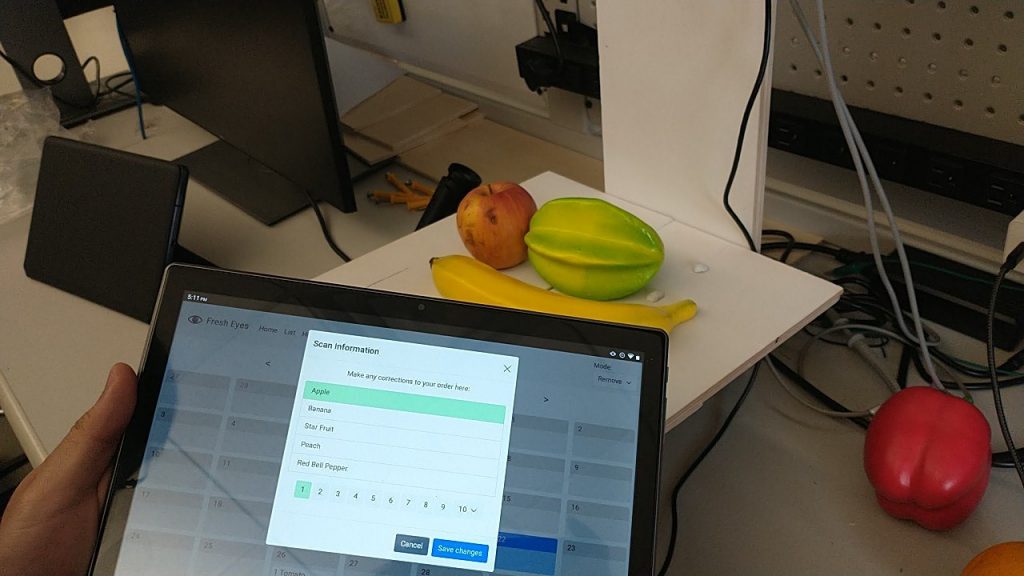

- Different fruit type selection (see Figure above) (frontend + CV)

- Smart recipe detection

- EVEN MORE TESTING TESTING TESTING.

We are currently comfortably ahead of schedule but will keep pressing on in refining our final product 🙂

23 Apr 22 – Samuel’s Status Report

This week, we focused on integration and testing, and I also made some minor improvements to the CV algorithm, and attempted to collect more data for training.

Integration with Jetson

The Jetson was surprisingly annoying and difficult to setup, and I spent at least 10 hours just trying to get my CV code to run properly on the Jetson. In particular, trying to install various dependencies like PyTorch and OpenCV took a long time; we needed to compile a lot of dependencies from source (which came with a lot of its own errors) because the Jetson is an ARM aarch64 system which is not compatible with the x86_64 architectures that most things are precompiled for. The various issues were compounded by the fact that the Jetson was slightly old (using older version of Ubuntu, low RAM and memory capacity).

Even after I/we* managed to get the code up and running on the Jetson, we had significant problems with the speed of the Jetson system. I/we at first tried various methods including turning off visual displays and killing processes. Eventually, we realized that the bottleneck was … RAM!!!

What we discovered was that the Jetson took 1-2 minutes to make its first prediction, but then ran relatively quickly (~135ms) after that. This is in comparison to my computer which runs a single prediction in ~30ms. When Alex was debugging with a display of system resources, we eventually pinpointed the issue to being the lack of sufficient RAM when loading and using the model for the first time: the model was just too big to fit into RAM properly, and a major bottleneck came from having to move some of that memory into SWAP. Once that was complete, the algorithm ran relatively quickly. However, because it is nonetheless using memory accesses (swap) instead of the faster RAM, the predictions on the Jetson still ran slower than that of my computer. Nonetheless, it still runs fast enough (~135ms) after this initial “booting” stage, which has now been integrated as part of the “loading” in my CV code.

*while I was in charge/did most of the debugging, my teammates were also instrumental in helping me get it up and running (it is after all, Alex’s Jetson), so credit should be given where it is due 🙂

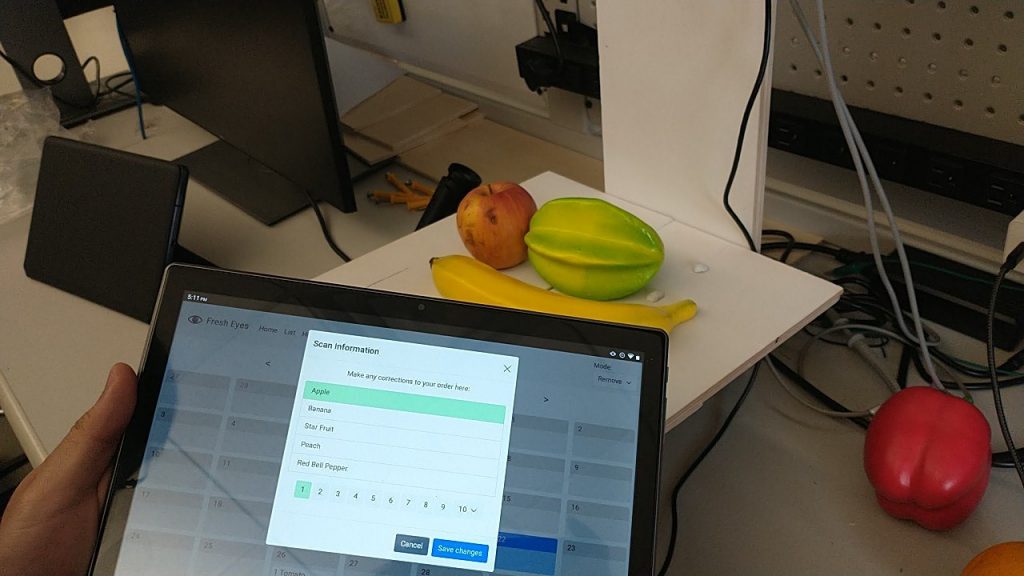

CV Training

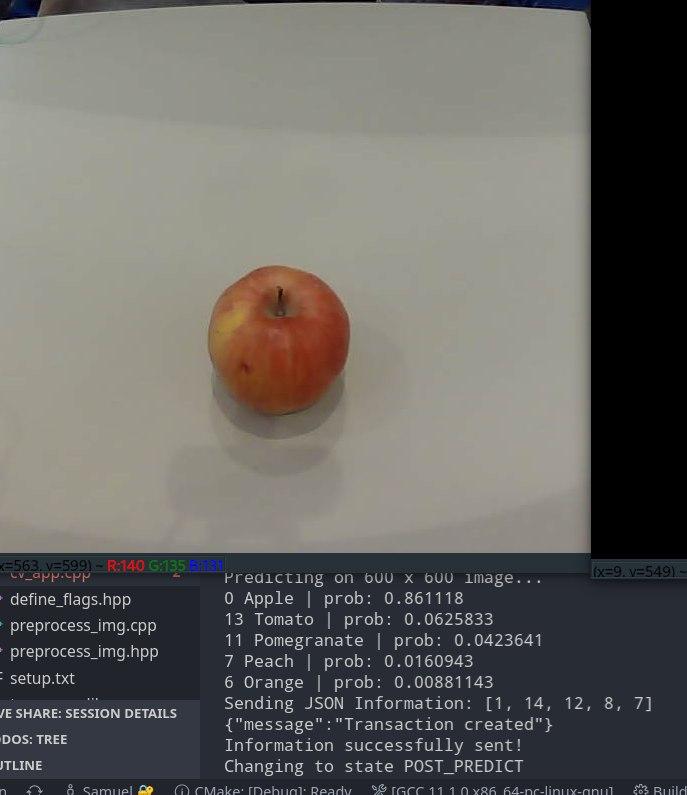

While trying to fix/install dependencies on the Jetson, I had also in parallel attempted to collect some more data with the new fake fruits that came in, including the addition of a new “Lemon” class. However, our model could not converge properly. I believe that it was due to the fact that some of the fake fruits/vegetables were not very high-quality, and looked fairly different from the ones in the original dataset (and in real life) like the peach and pear, so when validating against our original test images, it failed to perform very well. Next week, I aim to try training only the fake fruits/vegetables that look realistic enough (like the apple, lemons and eggplant). That being said, the algorithm already performs very well against some of the semi-realistic fake fruit, like the starfruit and banana shown in Figure 1 below.

During testing, I was actually pleasantly amazed by the neural network’s ability to detect multiple fruits despite being a classifier, and outputting probabilities associated with those fruits. As can be seen in Figure 1 below, the fruits being captured are Apple, Banana and Starfruit, which appear as the top 3 probabilities on the screen, as detected by the network.

Figure 1: Multiple Fruits Detection

Minor Improvements – White Background Detection

After spray painting the platform with Alex, we now had a good white background to work with. Using this piece of information, I was able to have a simple (and efficient) code that detects whether the background is mostly white using the HSL image representation, and checking for how many pixels are above a certain threshold.

Since my algorithm currently uses changes in motion (i.e. pixel changes between the frames) to switch from different states (background, prediction, wait for user to take off their fruit) in my internal FSM, this white background detection adds an important level of robustness against unforeseen changes, like an accidental hand swipe, lighting changes or extreme jerks to the camera. Otherwise, the CV system might accidentally go into a state that it is not supposed to, such as awaiting fruit removal when there is no fruit there, and confuse/frustrate the user.

Future Work

We are currently far ahead of schedule in terms of what we originally wanted to do (robust CV algorithm, fruit + vegetable detection), but there are a few things left to do/try:

- Quantity detection: This can be done by using white background segmentation (since I already have some basic algorithm for that) + floodfill to have a rough quantity detection of number of fruits on the platform. Right now, our algorithm is robust to multiple fruits, and there is already an API interface for quantity.

- Adding more classes/training: As mentioned above, I could try retraining the model on new classes using the fake fruits/vegetables + perhaps some actual ones from the supermarket. Sadly, my real bell peppers and apple are already inedible at this point 🙁

Team Status Report – 16 Apr 22

This week we spent most of our time building the actual platform, bug testing and making minor incremental improvements on the system.

Figure 1: Example of Fully-Constructed Platform

Alex: Built the platform and installed camera onto it. Readying Jetson for integration. Started work on nutritional information

Sam: Helped Alex with building of platform. Tested scanning + collected data on actual platform. Working on various normalization algorithms to improve illumination robustness.

Oliver: Conducted more extensive bug testing and fixed a number of bugs in the backend. Made expiry date calculations generate more robust and user-friendly output (i.e. granularity on a daily level instead of on a microsecond level).

We are currently on schedule but need to speed up the integration process to leave more time for testing.

Next week, we will work on integrating the full system, by testing it with the Jetson and installing on a real fridge door. We are also in the midst of training with our self collected dataset and will try that next week.

Samuel’s Status Report – 16 Apr 22

This week I mostly helped Alex to build the platform for the camera setup, and then attempted some basic tests on the setup. I also am currently training a model based on the data collected from previous weeks (and some from this week after getting the platform up)

Figure 1: Example of Fully-Constructed Platform

Figure 2: Example of self-collected data (here, of yellow squash) taken on new platform

More notably, I have been trying to improve the robustness of the change detector since I predict issues with the CV algorithm falsely detecting a change due to illumination changes from the opening of the door or a person walking around etc.

To this end, I tried using division normalization, where we divide the image by a very blurred version of the same image as per https://stackoverflow.com/a/64357685/3141253

While this allowed the algorithm to be very robust against illumination it also reduced the sensitivity significantly. One particularly problematic aspect occured because my skin color was similar to the birch wood color and so my hand was “merged” into the background. When displaying with a red apple, however, there was a better response, but not always enough to trigger changes. With this in mind, we have plans to color the platform white and hope that this might cause a larger differential between natural colors like that of skin and fruit, vs those of the artificially white background.

Another alternative is to play around with the saturation value of the HSV dimension. Or, since the main issue is going to be falsely detecting a change that moves the FSM into a background state falsely, we can potentially check for a true extra object using a Harris corner detector.

Next week, in addition to trying the aforementioned items, I will also be helping to integrate the CV algorithm on the Jetson. We are currently on schedule but might need to speed up the integration component so we can spend more time on robustness testing.

Samuel’s Status Report – 9 Apr 22

This week we successfully demoed an integrated, working system where a user could scan a fruit, and that fruit will be stored in a database and sent to the UI for modification and subsequent entry into the calendar.

I was very proud of the CV system’s robustness and smooth workflow as it was able to consistently scan different fruits under different environments and reasonable accuracy.

With basically an MVP already, we are definitely ahead of schedule but could add more features and improvements.

In particular, my next step is to collect more of our own data and train on it since the original dataset had no vegetables and obscure fruits. I spent most of the post-demo time this week collecting my own dataset. In fact, I collected over 800 images each for red peppers, yellow peppers and yellow squash, under various lighting conditions, positions, occlusions and shadows etc.

Examples of dataset images:

Next week, I will continue collecting dataset images on more vegetables like carrots, and begin work on making the hardware platform/stand for our integrated system. When the Jetson is ready, I will also begin work on that.

Team Status Report – 9 Apr 22

This week, we were able to make our preliminary demo which went quite well. We were able to show the robustness of our CV algorithm and the full integration of our system (front and back end), through the successful scanning and adding of fruit to the database, and having the added fruit show up on the UI.

There were some slight hiccups during the actual presentation, in particular when we tried to show the remove feature that was added in a rush without much testing. In the actual demo/final presentation this will not happen, and there will probably be some sort of code freeze so that we will only demo robust and tested features.

Since we basically have an MVP complete already, we are slightly ahead of schedule, and have been and are currently working on making the chassis/hardware and adding on some key features.

Summary of some progress this week

- Samuel: Collecting more data for various fruits and vegetables

- Oliver: Fixed remove feature bugs

- Alex: UI touchups, cut wood for chasis and platform

Next week,

- Samuel, Alex: Work on chasis/platform, integrate Jetson

- Oliver: Recipes and/or nutritiontal information, bug-test

Samuel’s Status Report – 2 Apr 22

This week was an extremely productive week, and I was able to get a fully working CV system up and running, with various features such as motion detection, stability checks and sending the top 5 predictions to the backend via the API.

FSM – Motion Detection/Stability Check

Last week, I was able to get basic background subtraction/motion detection working that compares the current frame with the previous one to check for changes. This week, I created a simple FSM which would use this motion detection algorithm to check for changes that indicates a user wants to predict an image, wait for stability, and predict on that stable image. Then, when the user removes the fruit, the algorithm similarly detects that change, waits for stability, and returns to a “background state”.

This FSM allows for a stable and robust detection of an item, as opposed to the initial algorithm that performed predictions on a potentially-blurry “delta image”.

Fig 1: Prediction after stability

Fig 2: Background State after stability

Fig 3: Ready for next prediction

API Integration

After Oliver was able to get a simple API working, it was time to integrate the CV system with the backend. For this, I used the curlpp library to send a HTTP request in the form of a JSON array of item IDs to the server/backend after a successful prediction.

Normalization

I also attempted to experiment with different normalization techniques (and re-trained the network accordingly), including ImageNet’s original normalization statistics, to improve the robustness of the algorithm against different lighting conditions. However, for some reason, the normalized models always worked worse compared to the non-normalized ones, and therefore I am currently sticking to the unnormalized model that seems surprisingly robust. It has worked amazingly well on different backgrounds, lighting conditions and environments (I’ve tested using monitors and chairs as platforms, at home and on different parts of campus, and it works well in all cases with few problems).

Demo + Future Work

Moreover, we were able to get our algorithm interfaced and working with the front-end and back-end, in preparation for our demo next week. Now that we have a fully working and robust scanning system, we are currently very much on schedule, and in fact slightly ahead. My next step is to work on

- Integrating with the Jetson hardware

- Collecting my own datasets

- Seeing if there are any bugs with my normalization algorithm that affected its accuracy (because normalization should fix problems not cause them)