This week we made much progress as a group in preparation for demo day!

We were all individually able to get all our individual components working AND integrated together:

Individual Components

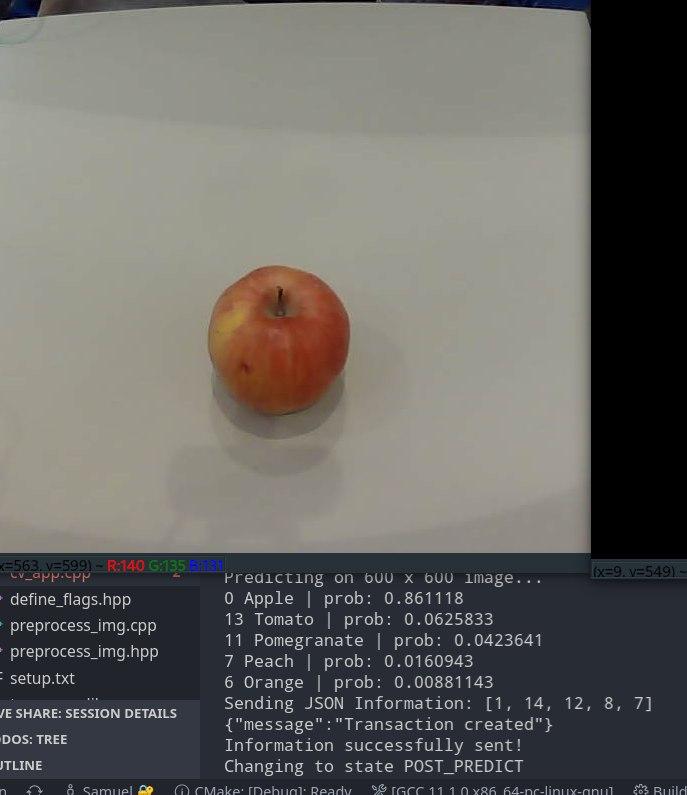

- Samuel: Completed CV scanning system, allowing for accurate detection of scan/removal and prediction on stable image

- Alex: Trained NN and got websockets coded and working/integrated with the API.

- Oliver: Made a lot of progress this week. Almost fully integrated all available backend API endpoints with the front-end as well as the CV system. Product works end-to-end as a result. Also implemented “live-streaming” of CV predictions to all front-ends

Overall workflow

- User places fruit under camera in CV system

- CV system detects movement and waits for stable image

- CV system predicts on image and sends the top 5 predictions to backend via JSON API

- Back-end stores the CV predictions and their relative ranks, and emits a “newTransaction” event to client front-ends.

- Front-end receives event from the API and presents a prompt for the user to confirm the CV’s predictions and committing the transaction into the back-end database.

We are happy with our current progress / product right now, as everyone in the team worked hard on their individual parts, and were also able to integrate everything together smoothly.

With a working integrated MVP, our team is on track (in fact slightly ahead of schedule) to deliver all that was promised in earlier stages, and it is even possible that we can achieve some of our stretch goals, such as a door sensor to tell whether it is an item addition or removal.

The immediate tasks at hand (in order of priority) are:

- Item removal – System currently only supports item addition, but needs to have an interface to support removal. Should be completed quite fast.

- Hardware integration – system currently works off a laptop, but want it to work on our Jetson, which currently needs its motherboard fixed. Once the new one arrives, integration should be pretty fast.

- Chasis/Platform – Currently propping up the camera on white chairs or monitors facing a white table/table with piece of paper, but eventually need to have it mounted on a proper chasis and platform. However, the algorithm still works despite different environmental conditions, indicating its robustness!

- Data collection – currently we have 14 classes of fruits that the algorithm can robustly detect (including apples, oranges, tomatoes, bananas, pears), but some of these fruits are not commonly bought (eg. pomegranate, kiwi, starfruit). Would need to collect some data of our own for vegetables like carrots, bell peppers, broccoli, cauliflower etc

- Recipes – Was originally a stretch goal, but now something we can possibly do!