This week was an extremely productive week, and I was able to get a fully working CV system up and running, with various features such as motion detection, stability checks and sending the top 5 predictions to the backend via the API.

FSM – Motion Detection/Stability Check

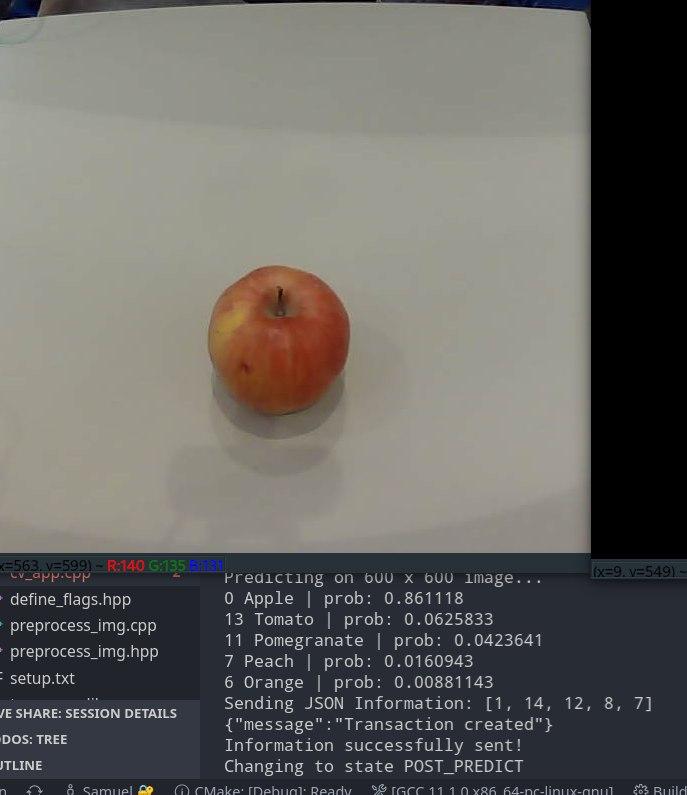

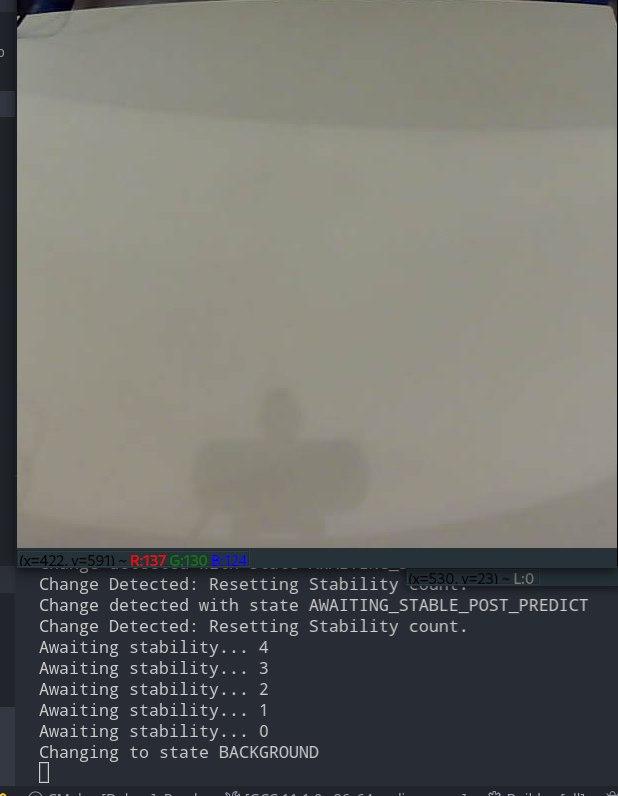

Last week, I was able to get basic background subtraction/motion detection working that compares the current frame with the previous one to check for changes. This week, I created a simple FSM which would use this motion detection algorithm to check for changes that indicates a user wants to predict an image, wait for stability, and predict on that stable image. Then, when the user removes the fruit, the algorithm similarly detects that change, waits for stability, and returns to a “background state”.

This FSM allows for a stable and robust detection of an item, as opposed to the initial algorithm that performed predictions on a potentially-blurry “delta image”.

Fig 1: Prediction after stability

Fig 2: Background State after stability

Fig 3: Ready for next prediction

API Integration

After Oliver was able to get a simple API working, it was time to integrate the CV system with the backend. For this, I used the curlpp library to send a HTTP request in the form of a JSON array of item IDs to the server/backend after a successful prediction.

Normalization

I also attempted to experiment with different normalization techniques (and re-trained the network accordingly), including ImageNet’s original normalization statistics, to improve the robustness of the algorithm against different lighting conditions. However, for some reason, the normalized models always worked worse compared to the non-normalized ones, and therefore I am currently sticking to the unnormalized model that seems surprisingly robust. It has worked amazingly well on different backgrounds, lighting conditions and environments (I’ve tested using monitors and chairs as platforms, at home and on different parts of campus, and it works well in all cases with few problems).

Demo + Future Work

Moreover, we were able to get our algorithm interfaced and working with the front-end and back-end, in preparation for our demo next week. Now that we have a fully working and robust scanning system, we are currently very much on schedule, and in fact slightly ahead. My next step is to work on

- Integrating with the Jetson hardware

- Collecting my own datasets

- Seeing if there are any bugs with my normalization algorithm that affected its accuracy (because normalization should fix problems not cause them)