During last week’s status report, I mentioned how we needed to find a dataset which exposed the network to a wider variety of fruit.

This week, was a relatively productive one: I managed to train the network on the “silvertray” dataset (https://www.kaggle.com/datasets/chrisfilo/fruit-recognition) which produced relatively robust results on test data that the network had never seen before (a green label indicates accurate detection; here we had 100% accuracy on test data).

Of course, the test data also involved the same silver trays that the algorithm trained on, so a high accuracy is expected.

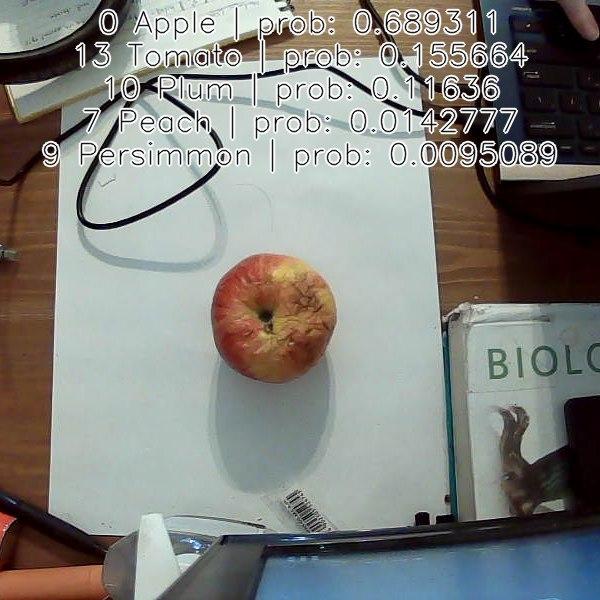

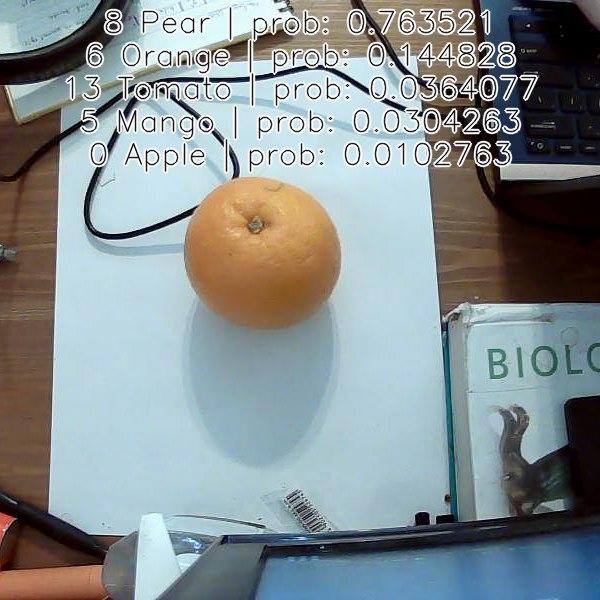

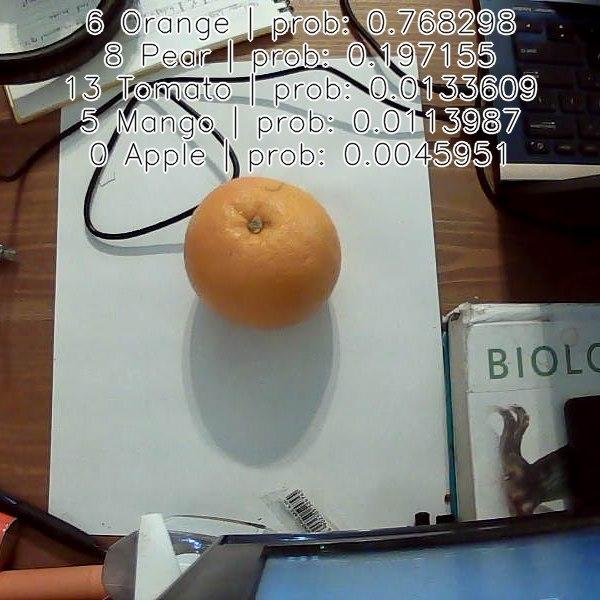

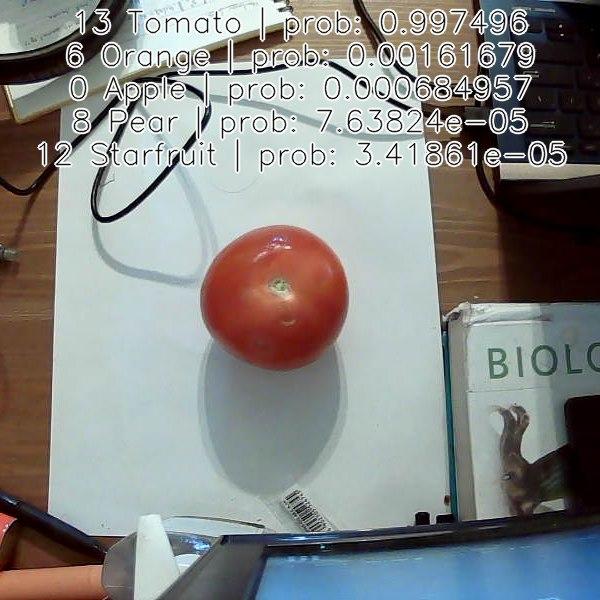

I then moved on to making it perform detection using real-world data, on our C++ application with our webcam, and these are results!

As visible in the images above, the NN is able to detect the fruits accurately on in a real-world situation (including a noisy non-white background WITHOUT segmentation applied). That being said, there are some inaccuracies/misdetections such as with the orange above despite the frames being very similar. I describe needed improvements later below.

With this, we are currently on track towards a working prototype, although we could probably train the network to handle more classes with self-collected or web-procured data.

Next week, we will begin integration of the various components together, and I will work on several improvements to the current CV algorithm/setup:

- Include a “change detection” algorithm that will detect when significant changes appear; this will allow us to tell if a fruit is needing to be scanned.

- Normalization of the image before processing; this will help reduce issues with random lighting changes, but might require that the network be retrained

- Build the actual rig with a white background and test the algorithm on that

- If necessary, change to using a silver tray or silver-colored background similar to the network’s training set, and/or collect our own datasets.