What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

So far, a risk that we have discussed this week is the issue of our app not being able to recognize perfectly straight lines as lines, since people are generally not going to be able to draw completely perfect lines. We discussed the merits of a temporal approach, but decided against that because it would require the user to have a camera mounted over their drawing, and defeats the primary purpose of our project (since we’re trying to make the whole experience easier than having to make the diagram digitally). We then settled on a different solution, which is that the user can circle where the corners of the line are and the program would automatically draw a line between them. We also added a stretch goal of snapping a not completely straight vertical or horizontal line to be perfectly at 0º or 90º.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

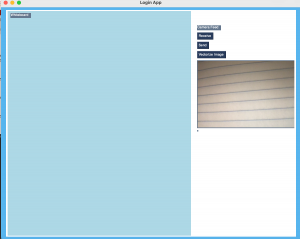

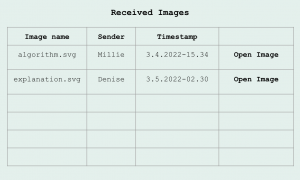

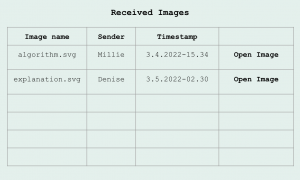

There are no updates to the schedule for our project. We did, however, add an additional design page for our GUI/frontend. This page will contain all of the received images of the user (up to a 100 images). Our example of what this would look like is below:

There will be an “Open Image” button, as shown in the last column, that the user can click on whenever they decide to open it. The image name is the name that was given by the sender. We also want to display the sender of the image so that the user can keep track of which image came from who, reducing the amount of external communication (the sender wouldn’t have to send a text to the user to notify that they were the one who sent the image). This also helps users keep track when multiple users are sending images to each other within one session. Currently, we’ve been working on our frontend/GUI and our code for vectorizing a diagram.