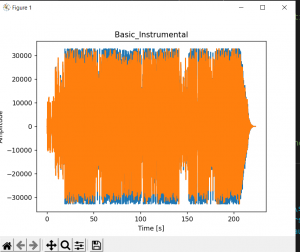

Last week, I was having issues with determining the beat amplitude (general). I am continuing to use the amplitude threshold-based onset beat detection algorithm. One idea for this algorithm is to determine a subset of the audio file where beat detection is definitely occurring (done using the BPM). On the subset, the algorithm appears to be working alright. Unfortunately, I am still working on setting up the testing sequence, but on first glance, the time stamps from the subset of the audio file created by my script appear to match what I expected it to. One thing is I do believe it is overdetecting some beats, which is due to the nature of the sampling rate of the wav file. I need to figure out how to sort through these detections to get it into a user friendly pattern.

The next steps are to again verify the subset’s beat detection and then attempt to extend it throughout the entire instrumental file. Because this is the instrumental file, further testing will need to occur to understand the limits of the algorithm. We were asked a question during the presentation over what exactly the limits of the algorithm are, so testing for whether it can handle short clips, or long clips, etc will have to begin next week if the algorithm can handle the entire wav file.

Currently, I believe I am still on schedule because the instrumental piece is close to being done. I think we should be okay to reach our goals set on the gantt chart.