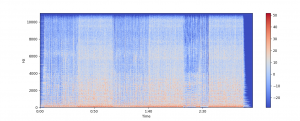

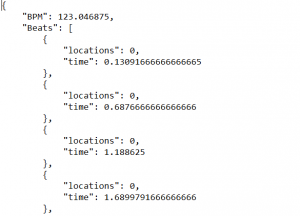

This week, the team focused largely on the presentation and getting the testing done in time. From previous playtesting, we know that there are a few playability issues that we needed to solve. This was addressed in our presentation as well. However, some of the feedback we got was that more feedback in game visuals was needed, the beatmaps were not well synched with the audio files, and the drums were not recognizing hits.

We have been invited to present at TechSpark, meaning that we need to make all of our aesthetic improvements by Thursday. While we have finals coming up, one of our challenges will be balancing the workload with the final touches we have to make on the project as well as preparing the presentation and the poster as well as the video and the demos.

Overall, this next week will be dedicated to finishing up the project (the individual tasks are mentioned on our blog posts) and finishing up the course documentation by Thursday-Friday.