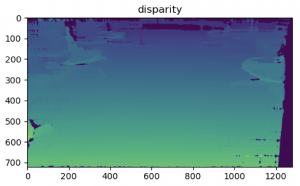

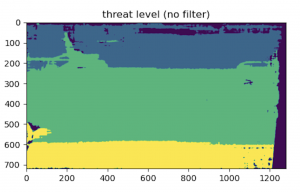

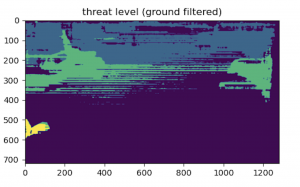

This week I finalized the depth imaging model with Ning as detailed in the team status report. And then I tested the integrated sensor feedback model with Ning and Xiaoran. Through this testing, we first, discovered and fixed latency issues between ultrasonic sensing and vibrational feedback; second, changed the number of threat levels from three to two to make the vibration difference more distinguishable; third, made communication between Raspberry Pi and Arduino more robust by explicitly checking for milestones like “sensors are activated” in our messaging interface instead of relying on hand-wavy estimates of execution times.

I also tried two different ways of launching the raspberry pi main script at boot up, i.e., @reboot with cron job scheduling and running in the system admin script rc.local , without success. Upon boot up, while the process of the script can be seen, the physical system is not activated. Now the system can be demoed by running remotely through SSH, but I will troubleshoot this further during the week. Between now and demo day, I will also further test, optimize, and present the system with the team.