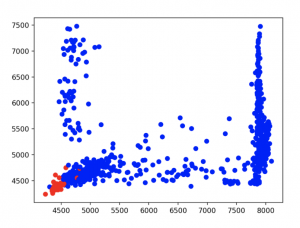

I constructed a test bed for live testing different models for processing the main expressions we will be using to do EEG data parsing. This allowed me to give live feedback about how well a simple blink versus baseline model which only takes the maximum values found within the time period performed. This initial model only uses 2 input features from AF3 and AF4 across the baseline and blink samples to predict whether a blink occurred. Both a logistic regression model and random forest model performed relatively well on the live test bed. The next model tested was one that would distinguish between whether there was any important activity at all or no activity. Both a logistic regression and a random forest model were trained again only on maximum voltage differences from AF3 and AF4 across all collected data samples. The random forest still returned a low error rate across the samples because of overfitting. However, the logistic regression reported a 20% error rate using just these 2 features to predict activity versus baseline, which is below our target of 10% error. With some investigating the graph below was plotted.

The blue points represent a test or training point in which activity occurred while the red points represent a test or training recording in which no activity occurred. The x-axis plots the largest AF3 EEG electrode difference found within the sample and the y-axis plots the largest AF4 EEG electrode difference found within the sample. Apparently using these two features alone with all the data does not create linearly separable data, so the logistic regression model could not predict well. My plans for this week include further testing with different models comparing different features and training on different sets of data points to get models that report very high accuracies on doing specific prediction tasks. Then, I plan to manually construct an aggregate system of separately trained models to do the entire task of distinguishing user features. Currently I am on pace with projected deadlines outlined in the design review.