This week I presented our project proposal as well as did further research into the synchronization issue. This remains my biggest concern: being able to synch real time vs only synching after the tracks are recorded will greatly affect how our project is constructed. I want to know the advantages and technological limits of implementing both of them asap, so we can decide on which one to focus on moving forward.

In saying this, I’ve found partial solution in the web app, SoundJack. The application can control the speed and number of samples that are sent over to other users which allow users to have some control over the latency and greatly stabilize their connection. It calculates the displays the latency to the user, so they may make the appropriate adjustments to decrease it. Users then can set multiple channels to mics and chose what audio to send to each other via buses.

One coincidental advantage of this is that, because we will be taking care to reduce latency during recording, the finished tracks will not need much adjustments to be completely on-beat. Still, where this solution falls short is that the latency will either have to be compounded with multiple users in order for real time to keep up with digital time, or other users will here an ‘echo’ of themselves playing. Additionally, the interface of all the programs (SoundJack, Audiomovers) I’ve looked into is pretty complicated and hard to understand. One common complaint I’ve seen in comments from YouTube guides is that it makes sound recording more engineering focused than music-making focused. Perhaps our algorithm could do these speed and sample adjustments automatically, to take the burden off of the user.

Furthermore, in these video guides, the users use some sort of hardware device so that they are not reliant on wifi connection, like what our project assumes they will be doing in. So far, I’ve only read documentation and watched video guides of this. Since it is a free software, I want to experiment with this in our lab session on Monday and Wednesday.

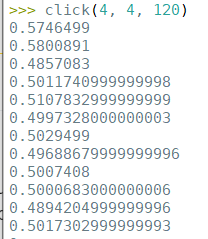

I completed the Django tutorial and have started on metronome portion of the project. I have a good idea what I want this part of our project to look like, however I have less of an idea of what exactly the metronome’s output should be. One thing I know for sure is that, in order to mesh with our synchronization output, there needs to be a defined characteristic in the wave where the beat begins. I also think that, because some people may prefer to have a distinguishing beat at the beginning of measures, we need to take that into account when synchronizing.