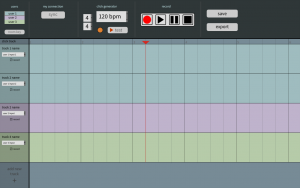

This week, I worked on the design review presentation with the rest of the team. I created this tentative design of the UI for our web app’s main page, where users will be recording and editing their music together.

In the beginning of the week, Jackson and I tested out SoundJack, and found we could communicate with one another with the latency of 60ms through it. This was much better than either of us were expecting, so using this method (adjusting the packet size to increase speed and amount of packets to be sent/received to increase audio quality) as a basis for our user-to-user connection seems to be a good idea. But instead of manual adjustments, which can become really complicated with more than two people, I will be creating an automatic function that takes into account of all the users’ connectivity, and set the buffer parameters based on that.

We have settled a major concern of our project, as we will be reducing the real-time latency so that users will be able to hear each other and synchronizing their recording afterwards. We have updated our gantt chart to reflect this.

My first task will be to create the click track generator. To begin, I created a CSS form which will send the beats per measure, beat value, and tempo variables to the server when the user sets them and clicks on the ‘test play’ button. A function will then generate a looped audio sound with this information and play it back to the user. As for the latter, I’m still not too sure whether the sound should be created with Python DSP Library or the Web Audio API. Further research is needed, but I imagine both implementations will not be too different, so I should be able to get the click track generator functioning by 3/9, the planned due date for this deliverable.