This week I integrated my syncing algorithm to the new recorder. After a lot of attempts to unsuccessfully write our recorded data into a readable .wav format. I gave up and decided to implement Recorderjs. Using its built in ‘exportWAV’ function, I was able to create an async function that uploads recordings to our server.

With that done, I did some more tests with the new recorder, playing a few pieces along with the metronome to iron out some bugs. One error I kept stumbling upon was that the tolerance parameter I set for detecting whether a sound was to be considered ‘on beat’ did not work for all tempos. While it was important to account for some pieces which may begin on an offbeat, the parameter would be impossible to meet for faster tempos. To fix this, I set some limits on how low the value could be.

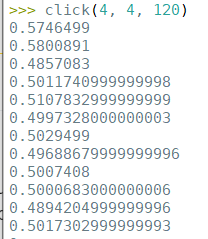

The most critical part of our project is the latency reduction. Since we haven’t done cloud deployment yet, some of the networking aspects cannot yet be tested and improved upon. For the time being, I familiarized myself with the monitoring and django channels as implemented by Jackson the week prior. When reading about channels, I began wondering if the original click generator I wrote on python could be implemented via an AsyncWebSocket consumer. While the click track we have now works fine, users inside a group will have to listen to one instance of it being played through monitoring, rather than having the beats sent to them from the server. This might cause some confusion among users, as they will have to work out who to run the metronome and how much to set the tempo; on the other hand, if the metronome is implemented through a websocket, then the tempo will be updated automatically for all users when the page refreshes. Latency will affect when users hear the beats either way but again, we’ve yet to test that.

Right now, getting our project deployed onto the cloud seem to be the most important thing. This Monday, I will discuss with everyone on how to move forward with that.