Our team worked on creating design review slides. I focused on creating login & registration page for the basic user authentication set-up.

Ivy’s Status Report for 3/6

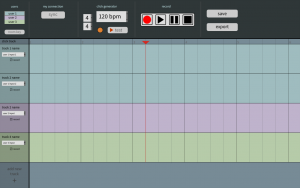

This week, I worked on the design review presentation with the rest of the team. I created this tentative design of the UI for our web app’s main page, where users will be recording and editing their music together.

In the beginning of the week, Jackson and I tested out SoundJack, and found we could communicate with one another with the latency of 60ms through it. This was much better than either of us were expecting, so using this method (adjusting the packet size to increase speed and amount of packets to be sent/received to increase audio quality) as a basis for our user-to-user connection seems to be a good idea. But instead of manual adjustments, which can become really complicated with more than two people, I will be creating an automatic function that takes into account of all the users’ connectivity, and set the buffer parameters based on that.

We have settled a major concern of our project, as we will be reducing the real-time latency so that users will be able to hear each other and synchronizing their recording afterwards. We have updated our gantt chart to reflect this.

My first task will be to create the click track generator. To begin, I created a CSS form which will send the beats per measure, beat value, and tempo variables to the server when the user sets them and clicks on the ‘test play’ button. A function will then generate a looped audio sound with this information and play it back to the user. As for the latter, I’m still not too sure whether the sound should be created with Python DSP Library or the Web Audio API. Further research is needed, but I imagine both implementations will not be too different, so I should be able to get the click track generator functioning by 3/9, the planned due date for this deliverable.

Team Status Report for 3/6

Our biggest risk remains that sending audio over a socket connection may either not work or not lower latency enough to be used in a recording setting. To manage this risk, we are focusing most of our research efforts on sockets (Jackson and Christy) and synchronization (Ivy). As a contingency plan, our app can still work without the real-time monitoring using a standard HTML form data upload, but it will be significantly less interesting this way.

In our research, we found that other real-time audio communication tools for minimal latency use peer-to-peer connections, instead of or in addition to a web server. This makes sense, since going through a server increases the amount of transactions, which in turn increases the time it takes for data to be sent. Since a peer-to-peer connection seems to be the only way to get latency as low as we need it to be, we decided on a slightly different architecture for the app. This is detailed in our design review, but the basic idea is that audio will be sent from performer to performer over a socket connection. The recorded audio is only sent to the server when one of the musicians hits a “save” button on the project.

Because of this small change in plans, we have a new schedule and Gantt chart, which can be found in our design review slides. The high-level change is that we need more time to work on peer-to-peer communication.

Jackson’s Status Report for 3/6

This week, I spent a lot of time looking into websockets and how they can be integrated with Django. I updated the server on our git repo to work with the “channels” library, a Python websockets interface for use with Django. This required changing the files to behave as an asynchronous server gateway interface (ASGI), rather than the default web server gateway interface (WSGI). The advantage this provides is that the musicians using our application can receive audio data from the server without having to send requests out at the same time. As a result, the latency can be lowered quite a bit.

Additionally, I worked pretty hard on our design review presentation (to be uploaded 3/7), which included a lot more research on the technologies we plan to use. In addition to research on websockets, I looked specifically at existing technology that does what we plan to do. One example is an application called SoundJack, which is essentially an app for voice calling with minimal latency. While it doesn’t deal with recording or editing at all, Ivy and I were able to talk to each other on SoundJack with latency around 60ms, far lower than we thought was possible. It does this by sending tiny packets (default is 512 samples) at a time using a peer-to-peer architecture.

We are still on schedule to finish in time. Per the updated Gantt chart, my main deliverable this week is a functional audio recording and playback interface on the web.