Final Poster PDF

Lavender’s Status Report for May 8

This week I gave the final presentation and received Prof. Sullivan’s feedback regarding the expressiveness of correlation coefficients for the fidelity of the filtered signal. I will address this point in our final report. I also helped with the test with Eryn. It is exciting that she passed the user test. Finally, I helped with making the final poster. Next week I will focus on writing up the final report.

Lavender’s Status Report for May 1

This week I made and prepared for the final presentation. Tomorrow I will test our software with Tianyi Zhu, to observe the effect of our detection algorithm on a different individual. In the coming week, I will keep practicing for the final presentation and write part of our final report.

Lavender’s Status Report for Apr 24

This week I implemented the low pass filter for short-length data packet (32Hz) and changed the eye blinking removal algorithm to Empirical Mode Decomposition (EMD), which is faster than ICA or STFT+MCA. I also tried testing the game as a test subject. However, my hair is too thick to ensure good contact quality. With the team, I helped testing the entire pipeline (with calibration and gameplay) with Chris as the test subject.

Team’s Status Report for Apr 24

This week our team finished integrating the entire pipeline.

On the software side, we added a calibration phase that records user data to fine tune the pretrained model.

On the neural network side, we implemented a fast API for fast training routine and integration with the software part.

On the game logic side, we implemented the denoising algorithm for band pass filter and EMD.

Interim Report Gantt Chart Update

Lavender Status Report for Apr 10

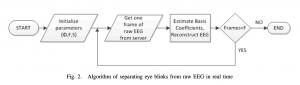

I worked on real time denoising of the input data and data io this week. For the real time denoising, we confirmed the feasibility of denoising using ICA last week, but it is not fast enough so we decided to try performing denoising in real time using some heuristic. The reason why we avoided data driven approaches for this particular problem is that we do not have sufficient time to record and annotate data for training a denoiser. The approach we take utilizes the sparsity of blinking signals.

The idea is that we find two vector spaces (known as the “dictionaries”), where the blinking artifacts and the original signals are sparse (meaning that most of the coefficients are zero). Then we find the projecting coefficients of the mixed signal on both spaces, and reconstruct the clean signal using only the coefficient from the “clean” vector space.

(block diagram by Maitiko et. al)

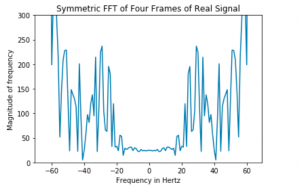

While the idea seems simple, there are a lot of design choices in implementation. For instance, we find the dictionaries using Short Time Fourier Transform with a long Hann window and a short Hann window. The time-frequency trade off makes the frequency resolution of the found dictionary basis vectors different. To ensure the consistency of the length of our basis vector, we chose to fill zeros to improve frequency resolution. Also, to save storage spaces, we decide to only keep the nonnegative Fourier coefficients during basis matching, because the Fourier transform for real signals is symmetric around zero.

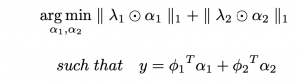

Last example is the choice of optimizer. We chose Fast Iterative Shrinkage-Thresholding Algorithm (FISTA) with the PyLop library because it solves an unconstrained problem with L1 regularization and (as the name suggests) it is faster than ISTA.

(objective function with L1 regularization)

While the code for the denoiser is complete, I am still debugging, eye-examining, and testing with previously recorded data to make sure it works. This week we will test with real-time data frames once it is done.

Lavender’s Status Report for Apr 3

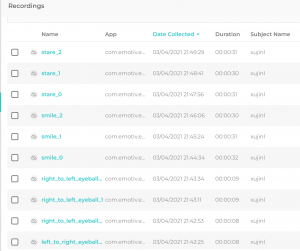

This week I recorded the dataset for training and testing with Chris. For each activity we recorded 3 episodes with 15-30 seconds duration. The activities include closed-eyes neutral, open-eyes neutral, think about lifting left arms, think about lifting right arms, move eyes from left to right, move eyes from right to left, smile and stare.

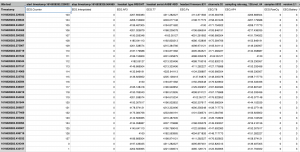

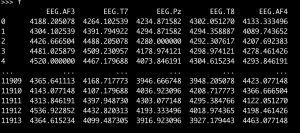

I also exported the recorded dataset to csv file and wrote a python script to automatically convert the batch of csv files to pandas dataset, which can be used directly for learning with pytorch.

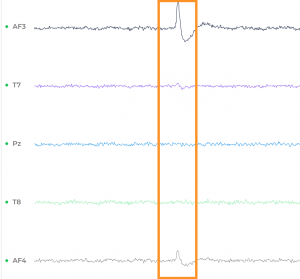

One challenge with preprocessing the raw data is eye blinking removal. The artifact is visually obvious in the frontal lobe sensors AF3 and AF4. Using the MNE-Python neurophysiological data processing package, I was able to remove the eye blinking artifact using fast independent component analysis (ICA). This method works by separating the additive components of a mixed signal. In our case, the eye blinking is an additive component to our source brain signal. The fast ICA works by first pre-whiten the input signal (centering each component to have zero expected value, and then using a linear transformation to make the components uncorrelated and have unit variance). Then the fast ICA uses an iterative method to find an orthogonal projection that rotates the whitened signal such that non-Gaussianity is maximized. It is noteworthy that while the MNE-Python works well for preprocessing our recorded data, for real-time packages of short duration, its performance is subpar. So I am implementing the software for using morphological component analysis on the short time fourier transform to remove the blinking noise in real time, following the method suggested by

“Real time eye blink noise removal from EEG signals using morphological component analysis” by Matiko et al.

Lavender’s Status Report for March 27

This week, I organized two hardware tests. The first test revealed the contact quality issue, which was unexpected. I subsequently contacted Emotiv customer support for the contact quality issue, training issue and raw data stream API issue. The second test confirmed that wet hair with plenty of glycerin can stabilize the contact quality to 100%. Next week I will record real data with good contact quality and start testing our denoising algorithm with the real data.

We tried multiple ways for differentiating the brainwave signals. In the picture above, we tested whether seeing different colors would result in a visible difference. We also tested temperature, pain, movement, and sound. So far, movements seem to be the most effective cue. We are still looking into more training procedures and signal processing techniques to improve our detection.