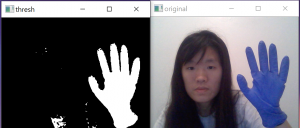

For this week, I worked with my group to complete our Design Review paper. Additionally, I made progress on gesture detection. As per Uzair’s advice, to ignore the face and background in webcam images, I wore a generic brand blue surgical glove and extracted only that to get only the hands, as pictured below:

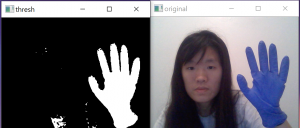

Next, I started generating both training and testing datasets using this method, with two labels: gesture vs no_gesture. I currently have about 300 images for each of the labels for training, and about 100 each for testing. The images below are of the gesture label in the datasets folder:

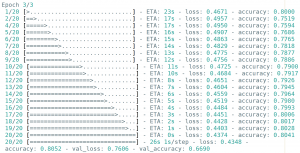

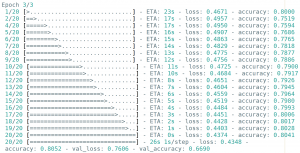

I then implemented the CNN training algorithm and model then began training. I tried to adjust parameters and tested which gave the best results in training and found that the highest testing accuracy (which varied from 65 to 69% validation accuracy, and about 80% training accuracy ) and I got was for a batch size of 15, number of steps of 20, and 3 epochs, of which the printout of the last epoch is here:

For the next week, I will first work on incorporating this into the overall pipeline and complete a finished version of the product before moving on to improving the model and accuracy. I suspect the accuracy will improve a lot if I do multi-category classification instead of binary, because there are a lot of nuances with the no_gesture label, since it incorporates everything else that is not a gesture.