For this week, I looked over the feedback given by peers from the design review presentation and discussed some plan changes with my group. I have decided to train my gesture detection ML model in Python and then export it as a json or txt file, since training is done separately and doesn’t affect application speed. Then, in our actual application, we will be using c++ and using that to run a classifier with our model.

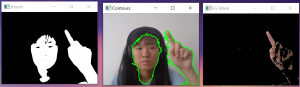

In terms of coding, I have been able to do 3 different types of maskings, one which does threshing by detecting pixels within a skintone range of colors, one that contours areas of skintone, and one that does dynamic background subtraction, essentially detecting changes in motion. I have taken a screenshot below for demo purposes:

For next week, I will be working on figuring out a way to combine information from the three to 1) filter out noise and 2) only focus on the hand and ignore the face.