For this week, my main focus was to improve the gesture detection, because in our most recent demo, if the hand was tilted or angled in any way, there were a lot of small gaps in the drawing where the gesture was not detected even when it was supposed to be. I managed to make those issues much better after a lot of testing with different hand landmarks. In the end, I had to switch to using euclidean distance as opposed to just x or y distance of finger landmarks, and I changed up the threshold and which specific joints are used in the detection. Additionally, I tested other candidate hand gestures, such as the pinching gesture and the two finger point gesture, as alternatives, but they do not seem to be as easy to make nor as effective as our original one.

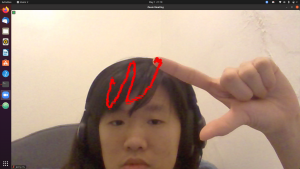

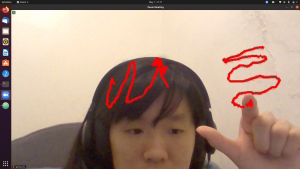

In the images below, we can see that even when the hand transitions naturally from the “proper” gesture to a tilted or angled version of it in the process of drawing, there are no gaps in the curves. The part at the end where there is more scribbling is simply due to me having trouble keeping my fingers absolutely still when trying to take a screen capture.

Additionally, I worked (and will keep working on) the final presentation slides with the rest of my team to have it ready by Sunday midnight.