- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

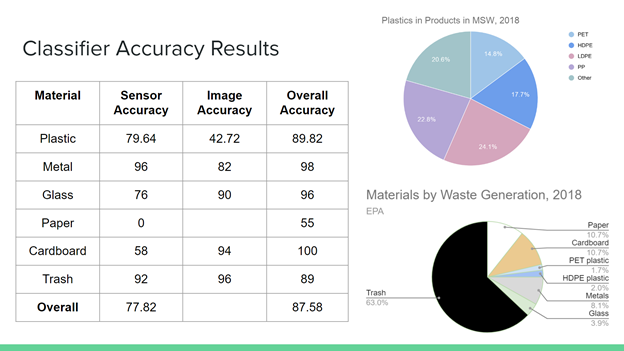

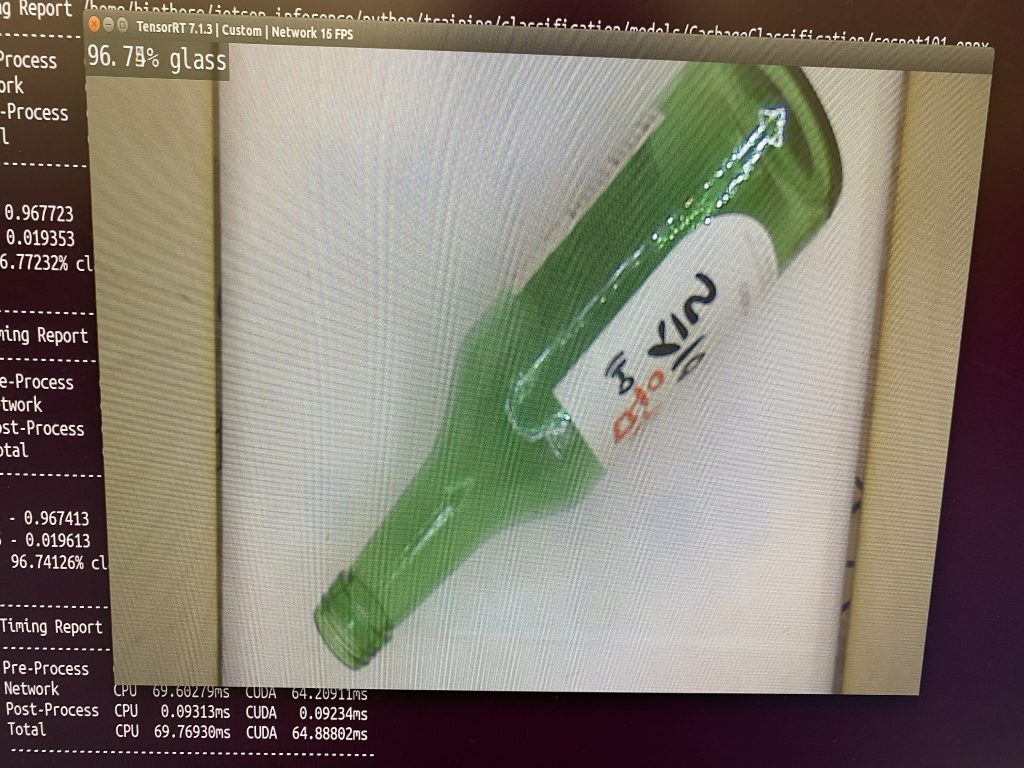

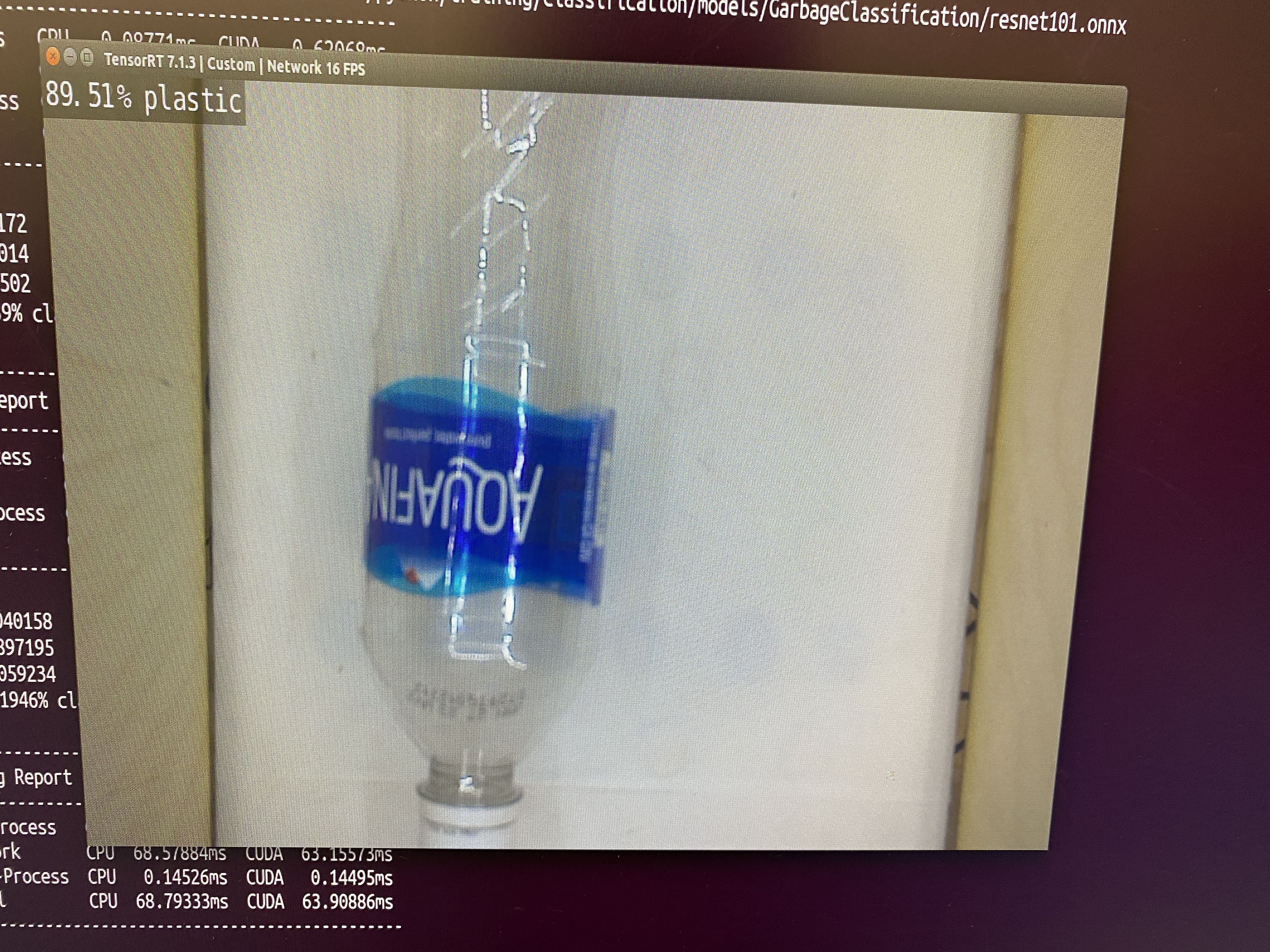

The classification of items may be very difficult, considering we need to distinguish between items with different materials (i.e. metals vs. glass vs. paper). It is difficult to estimate the effectiveness of our classifier until we begin implementing it, so we are going to add more sensor input. We are now using a variety of sensors, in addition to the camera, that can help us distinguish between the different materials. We plan to use an inductive sensor for detecting metals. We looked into using capacitive sensors for other material detection, but these can be very expensive with tiny sensing range. Our backup sensors include an LDR sensor for detecting plastics and glass.

- Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

From the abstract, we have narrowed down the scope of our project and introduced different sensor inputs other than the camera. In particular, we have more clearly defined which items are considered recyclable (i.e. limiting recyclable plastics to just plastic water bottles). This changes the use case for our project, but makes classification more feasible. We have also reduced the number of sorting categories because having multiple categories for recyclables did not significantly improve our use case, and it overcomplicated our mechanism.

We also changed our mechanism for moving the item from the sorting platform to the recycling or non-recycling bins. Instead of a tilting platform that will tilt the item into one of the two bins, our mechanism will be a sliding box that will slide over a motor track and push the item into one of the bins. This change was necessary because we previously weren’t using sensors that need to be in close proximity or attached to it.There are no costs from this change because the sliding mechanism will still be able to put an item into one of the bins.

We also altered our use case from individual households to CMU campus, because it seemed more reasonable that a college campus would be more focused on improving recycling rather than individuals who don’t already recycle (and consequently, would not be interested in buying our product).

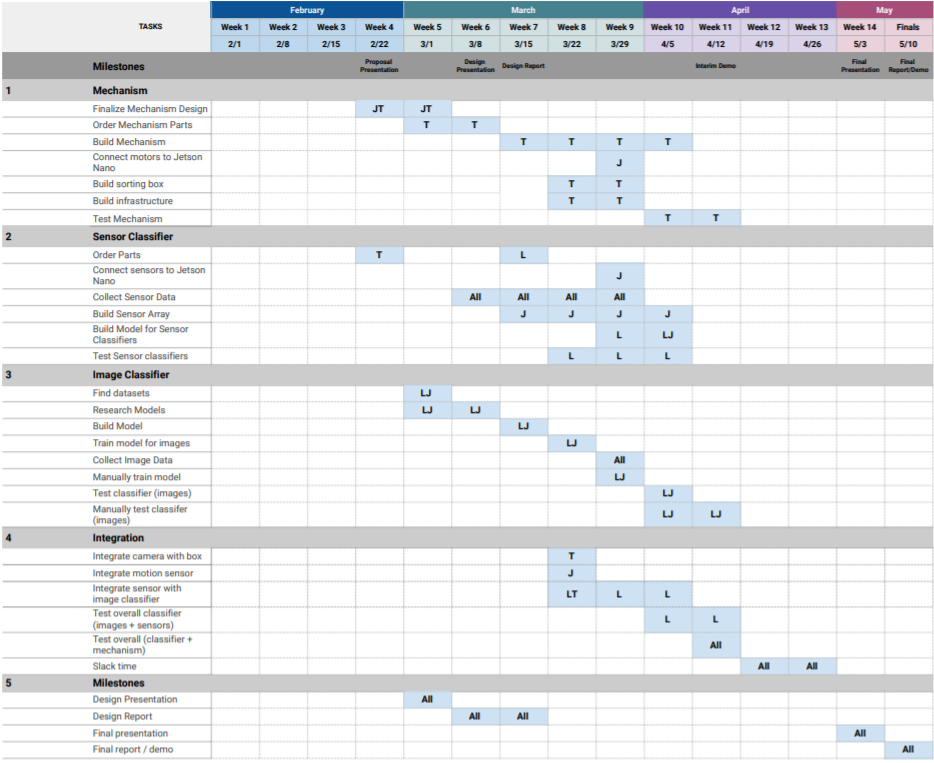

- Provide an updated schedule if changes have occurred.

We are still on track to finish our proposal presentation this weekend and begin the design process next week.

- This is also the place to put some photos of your progress or to brag about a component you got working.