This week, I helped with the recording of the demo video, and finished the poster.

My progress is on track.

Next week, I’ll be working on the final report.

Jessica Lew, Lauren Tan, Tate Arevalo

This week, I helped with the recording of the demo video, and finished the poster.

My progress is on track.

Next week, I’ll be working on the final report.

This week, Jessica and I retrained the image classifier model using the ResNet-101 model instead of ResNet-50, since the accuracy before wasn’t very good. I updated the sensor classifier a bit to reduce fluctuation between readings and make it more consistent, and modified the code for the switch (which is used for detecting whether someone closed the lid) to make it work properly (previously, it was detecting whether the lid was opened and whether the lid was closed). I helped test the classifiers with real objects, and updated some of image classifier confidence levels according to the tests. I also worked on coming up with the distribution of weights for different categories we used to calculate the accuracy of the different classifiers (sensor, image, overall) – we decided to use % material by MSW generation, which is the % of a certain material out of all waste generated/produced. For our slides, I calculated the accuracies of the different classifiers and plotted the accuracy per epoch of the ResNet-101 model.

My progress is on track.

Next week, I hope to start recording videos of our project for the video demo.

I helped retrain the image classifier on our extended dataset with some of our own images. Plastic bottles seem to be pretty accurate now, which is good because capacitive sensors were unable to be used to detect plastic bottles. I also tried to improve the accuracy of the sensor classifier, but may have made some readings more inconsistent than before, since the readings are fluctuating a lot, especially for metals. The glass sensors are fairly consistent and accurate, though. I also updated the training and validation datasets for the materials that more images were taken of (since the retrained model only had additional images for certain categories, not all of them).

My progress is on schedule.

In the next week, I’d like to help retrain the image classifier on the dataset with all of the images Tate added of the different materials we brought.

After wiring the sensors to a solderless breadboard, I found out that some of the connections were a bit loose, so I transferred all of the sensor wiring to a solderable breadboard, and soldered all of the wires on. I also soldered on all the connections for powering the circuit and connecting the sensor outputs to the Jetson Nano. This week, I also did background subtraction (making the top of the sensor platform white) by attaching 2 pieces of paper on top of them. This was possible because the capacitive sensors are unable to detect paper. I recalibrated some of the sensors again to glass and HDPE plastics, since some weren’t behaving as expected, which could be due to the fact that I calibrated the sensors sensitivity before attaching the pieces of paper to the top of them.

I’m on track for my progress, but may need to debug some circuit issues for the sensors, since there were a few bugs that popped up. I also need to retrain the image classifier model on AWS to improve our current image classification accuracy.

Next week, I hope to have the sensors ready for the interim demo and connected to the Jetson Nano.

This week, I mostly spent time making the sensor platform ready to be attached to the exterior box. I helped laser cut the wood pieces for the sensor platform using Tate’s CAD design. Each of the sensor wires was too long (multiple feet), so I cut them all to around 8-10″ in length and re-soldered all of the wires to connect them to stronger, additional wires so they could actually be inserted into a breadboard properly (the original wires are too flimsy). I also calibrated all of the capacitive sensors to the materials we want to detect (HDPE plastics and glass) for each of the upper and lower bounds (3 per bound). When calibrating, I determined that the capacitive sensors sensitivity was unable to be adjusted enough to recognize PET plastics, so the sensors ultimately will only be able to detect HDPE plastics. This may narrow our scope more on plastic detection, but I am hoping the image classifier may be able to recognize the more common items that are made of PET plastics, like plastic water bottles. I also tried to follow a tutorial for training our ResNet model, but ran into some issues running the code on AWS.

I am slightly behind in my progress since I didn’t get the training done on AWS, but I’ll be trying more training tutorials meant to run on AWS this weekend.

Next week, I hope to be done with the AWS training for the image classifier or alternatively, finding a more accurate algorithm to train on the Nano instead (since Jessica already trained on the Nano, but results were not too accurate). I also want to do object detection and background subtraction on images received by the Raspberry Pi camera, since the camera was able to be connected to the Nano this week.

This week, I helped wire 16 inductive sensors and some of the 6 capacitive sensors we used to measure the voltage and current of, in order to ensure the output voltage was within the limit for each GPIO pin on the Jetson Xavier (3.3V). I also met with Jessica to connect the motor and motor driver to the Jetson Xavier and test it, but it didn’t end up working. I also helped connect the sensors to the Jetson Xavier and confirm that the output from the Xavier was what was expected (we confirmed that the voltages were within the 3.3V maximum and that the GPIO pin read “HIGH” when an object wasn’t touching each sensor, and read “LOW” when an object was touching a sensor).

My progress is slightly behind because I’ll be training the model on AWS on Sunday. However, if this ends up taking a lot of time, I may train it on the Jetson Xavier instead. I will be putting in more time to get this working.

I hope to finish training the model for the image classifier, and start writing the code for background subtraction and object detection.

The most significant risks to the success of this project are interfacing parts with the Jetson Xavier, like the motor and motor driver. These risks are being managed by the team allocating more time in the schedule to connect these parts. We have a Jetson Nano and a Jetson Xavier, so we will have multiple people working on this as a contingency plan if necessary.

No changes have been made to the existing design of the system.

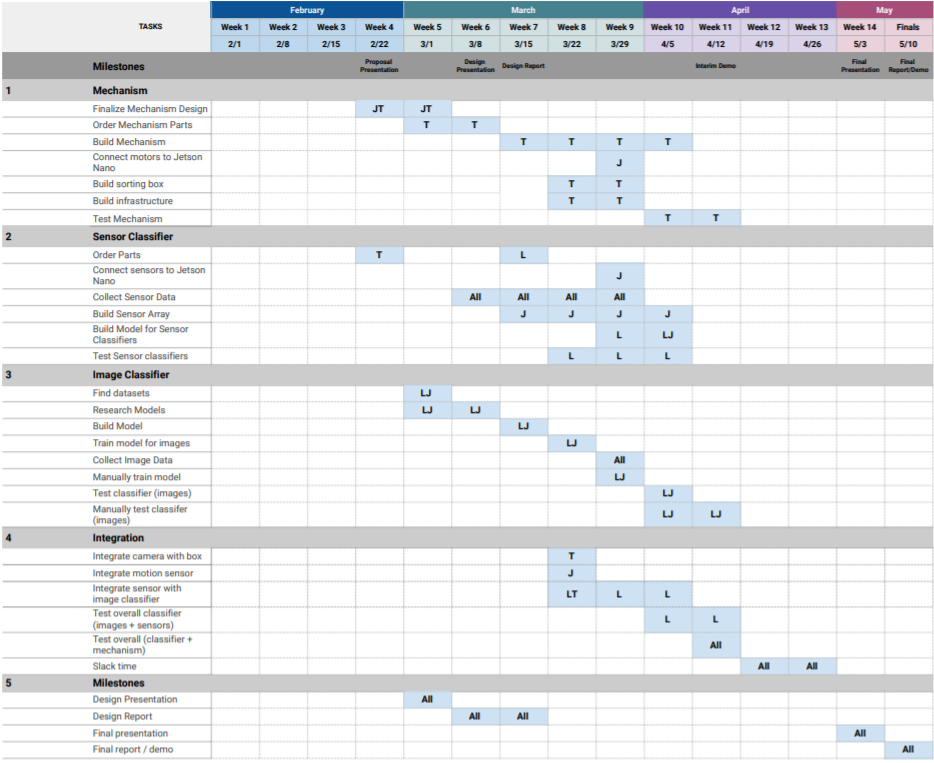

We are pushing back hooking up the motor and motor driver to the Jetson Xavier by a week (moving it from week 3/22 to 3/29). We have attempted to connect them this week, but didn’t get the motor to move yet.

This week, I helped update Tate on the sensor placement design, and helped him prepare for the design presentation by providing feedback on points he missed or needed to include during practice runs of the presentation. I also helped solder the wires used in the load sensors, and soldered the header pins onto the load sensor module. At a team meeting to test all of the sensors, I brought a bag of recyclables that included the different materials we needed to use to test all of the sensors (like metal cans, glass bottles, recyclable plastics, and paper cartons). I helped test some of the sensors by placing some of these objects close to each sensor. As of this week, all of the sensors except the load sensors were tested. I also started writing a rough draft with the key points of our classifier design and design requirements for the design report.

My progress is on schedule.

I hope to have the final draft of the classifier design (with a detailed software spec) in the design report next week, and start building the model for the image classifier.

The most significant risks are the sensing distances of some of the sensors the team tested. For example, the LDR sensor has a sensing range of 30 cm, but objects are only detected if they are directly placed above it. For this reason, we will change the number of each type of sensor on our platform to address this issue as well as possible. Some of the sensors also are not able to distinguish between the materials we want them to (such as the capacitance sensor for paper vs. non-paper materials), so we will need to either research another sensor to use for certain materials, or adjust the scope of the recyclable categories as needed. Other sensors may not be able to be used as intended (i.e. LDR sensor can’t detect all types of glass), so we may also change the type of sensor used for certain recyclable categories (i.e. use capacitance sensor for glass). Our contingency plan for the limited sensing ranges includes purchasing more sensors if budget allows.

The number of some of the types of sensors was changed, and we will be changing the way some recyclable categories are detected. After testing, the IR sensor was determined to be useless for the purposes of this project, since it can’t distinguish between any of the recyclable categories, so we may be removing it entirely from our system spec. Changing the number of other kinds of sensors was also necessary because some sensors cannot be used to distinguish between certain recyclable categories as intended. For example, the LDR sensor only is able to detect clear glass, not colored glass, so we may use capacitance sensors instead to detect all types of glass. The costs to this change are monetary, since certain sensors like capacitance sensors are more expensive than other kinds of sensors. These costs will be mitigated with further analysis of our budget as we finalize the number of the sensors we will use for each sensor type for the bottom of our platform (which the object goes into).

Here is a picture of the setup we used to test the sensors (this was the capacitance sensor):

This week I made most of the design presentation slides, and added more details about how the image classifier will work with the sensor classifier to produce the final classification output of recyclable vs. non-recyclable. For a few hours on Saturday, I met with only Jessica (Tate said he was busy, so he did the Team Status Report instead) to come up with how many sensors of each type (inductive, capacitive, etc.) were needed, and how many GPIO pins we would use for each kind of sensor. Jessica and I finalized the placement / position of each type of sensor on the sorting platform (based on sensing ranges, our budget, and the assumption for the minimum object size our trash can will detect). I also added more images for some of the slides for the presentation, based on the feedback the group received from the previous presentation that some of the slides were too empty.

My progress is behind; the mechanism hasn’t been finalized. This week, Jessica asked Tate to finalize certain parts of the mechanism, so we could have it ready for the design presentation, but that hasn’t happened. Tate has been busy, so he hasn’t been able to meet with us or contribute much outside of class. Jessica and I work on the slides during the meetings he misses (typically we update him through text or a later meeting, if he’s available – this week we updated him on the sensor placement we came up with and the number of GPIO pins needed for each sensor; this was necessary because he’ll be presenting next week).

I hope to finalize the other components needed (including the mechanism) for the project, so the team can start buying more parts. I also want to test the sensors that we received.