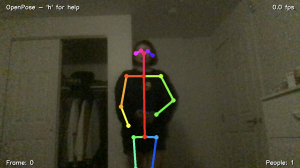

This week I’ve been focusing on writing a testing script so that we can have some quantitative metrics for our final presentation next week. We are testing by running the matching algorithm on different models with the same clothing and manually selecting the superimposed images that look passable. This was a suggestion that Marios gave us during our weekly meeting and we all agreed that it made the most sense for testing usability and precision. Currently I have ran the testing script with one shirt on 2032 test images of models from the warping model. We will select visually passable images and include the results and observations in our final presentation. Note this is only for superimposition, not warping since that has yet to be integrated. I’ve included some example outputs below.

Unpassable example:

Passable examples: