This week I upgraded our User Interface by adding an illegal move notification feature and move recommendation feature. Our team finished the integration and tested that all of the features we planned to make are working.

Jee Woong’s Status Report for 05/01/2021

This week, I focused on the integration of FPGA and UI and upgraded some of the UI. As you can see from the image below, I have created a light bulb button which can show recommendation of the moves if the user wants to see. When this button is pressed, the UI communicates with the FPGA and receives information about the move that should be recommended.

Furthermore, I updated the timer so that each player can have a timer that updates every turn. After I finished updating the UI, Joseph and I started testing the game to retrieve metrics. We had some minor issues with background subtraction, but we could solve the problem by tuning some parameters to detect chess pieces.

Next week, we will finish up showing move recommendations and detecting illegal moves on UI.

Team’s Status Report for 04/24/2021

This week, our team mainly focused on the integration of our overall system. We met together to integrate our entire system, and we tested the integration of laptop, UI, and FPGA. We have tested integration on both Mac OS and Windows to make sure our game supports both operating systems. The integration was successful, which allows communication between the laptop, UI, and FPGA. It is great news that the integration didn’t take a long time. So, for the following week, we will work more on integration and making the individual components better.

Jee Woong’s Status Report for 04/24/2021

Joseph and I mainly focused on the integration of Raspberry Pi and UI. After we integrated, we found an issue that the Raspberry Pi is not good enough to run Pygame and Computer Vision algorithms using videos. So, we thought the performance might improve if we use photos instead of videos.

Thus, I created a button that a user can press after he or she makes a move, which will take a photo of the board and compare it with the previous status of the board to figure out the difference between the two frames. Although the performance was slightly better on Raspberry Pi, it still couldn’t handle Pygame.

So, we decided to use our laptop instead of the Raspberry Pi so that we could have a smoother game. Now, we have a button which user can press after he or she makes a move, and Joseph and I tested with our board that the entire game works well with the buttons.

Team Status Report for 04/10/2021

Joseph has finished his background subtraction algorithm to detect the movement of pieces, and Jee Woong finished writing code for UI and Computer vision integration. One issue on Joseph’s side was that the pieces weren’t detected frequently, so we decided to color the pieces for now and order a colored version of chessboard pieces. As we are finished with simple UI, board detection, and piece movement detection, we are ready to integrate. Jee Woong has prepared the integration, so Joseph and Jee Woong will be mainly testing the integration code next week. Michael has been working on dealing with edge cases. And, he will also dive into integration part of the project as the integration of UI and CV finishes.

Jee Woong’s Status Report for 04/10/2021

This week, I mainly worked on the integration of the Computer Vision part of the project and the User Interface part of the project. As I have completed the representation of the initial board status, I tried to integrate the background subtraction and the board UI. So, I wrote the integration code to combine the board detection with UI and piece movement detection with UI.

Next week, I will try to test if the integration was successful with Joseph by trying with the Raspberry Pi that just arrived. As our team members started working on integration, I believe we can finish the project in time.

Jee Woong’s Status Report for 04/03/2021

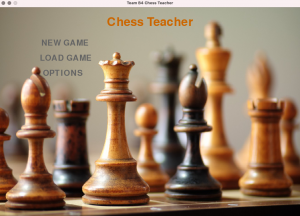

This week, I have started working on the User Interface part of our project. I have created the initial page and the game page of our game. And, the image below is the initial page design of our project UI.

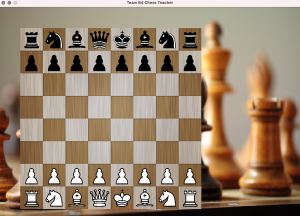

After I created the initial page of the UI, I made it possible to start a new game by clicking the “NEW GAME” button. After clicking the button, the screen looks like below. Currently, the board is on the left side of the screen, and I am planning to put a timer on the right-hand side of the screen and show the turns.

I have created a class for the overall game, and for each piece on the board, I have created a piece class so that I can follow and keep track of each piece. It shows the initial state of the game. After our team finalizes the computer vision part of the project, I am planning to test with the UI I created this week. So, for the following week, I will work on updating the board as the computer vision recognizes the piece movement changes.

Jee Woong’s Status Report for 03/27/2021

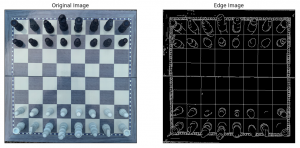

For the previous two weeks, I have been working on board-detection to accurately detect every corner of the board. Last week, I tried to use edge detection to retrieve all the edges from the board and then find the rectangular shape of the tiles. Below are the images of my approach for board-detection using edge detection.

As you can see from the image, it looks like it cannot find the squares (tiles) of the board but detect other parts of the board which are irrelevant to board-detection. So, as we decided that we would first detect the corners of the board before the pieces are places, I had to put this approach aside and try with a different approach.

Previously, 49 (7×7) corners could be detected from the function that OpenCV provides. However, I couldn’t detect the entire 81(9×9) corners since the function that OpenCV provides wasn’t able to detect all corners. Since we know that square tiles are separated equally from each square, I calculated the average distance between each of the corners and used the calculated value to predict the rest of the corners. The image below shows the result of the 9×9 corner detections.

(We are using HSV space, but the circles in green will not show clearly on HSV space, so for this report, I converted back to RGB space so that the corners detected are easy to see.)

In addition, I implemented a function that could find the position on the board given an x and y coordinate so that Joseph can use this function in the future to find out which piece has moved and where the piece has moved. So, I believe I have finished the board detection part, although there might be some improvements in the future.

Next week, I will start working on the User Interface part of our project so that once Joseph finishes his computer vision part, we can integrate the User Interface and Computer Vision to test whether they are working correctly.

Team Status Report for 03/13/2021

This week, we all made some progress in each part of our project. Michael had to spend some time on the integration of hardware because of our design change, and he managed to look at some of the documents and try simulations by himself. Joseph and Jee Woong kept working on the Computer Vision part of the project. Joseph started working on detecting moves using the background subtraction algorithm, and Jee Woong made some progress on detecting board corners and coordinates. Joseph is returning to Pittsburgh soon, so this will make Jee Woong easier to collaborate with him and make more progress on the Computer Vision part of the project.

We saw some of the technical comments from the peer feedback forms, and we will try to address more of the technical contents in our design documents which are due this Wednesday. Through our weekly zoom meeting, we are planning to complete the design documents and share our progress.

Jee Woong’s Status Report for 03/13/2021

This week, I worked more on board-detection. Previously, I was working on an image converted into grayscale, and I tried converting a board image into black and white. Because of the lighting issue, when I convert the image into black and white, it wasn’t able to detect the corners of the board. So, Joseph and I decided to keep working on grayscale. The image below is an image when I convert the image to black and white, and as you can see from the image, part of the board gets cut off.

As I mentioned from my previous week’s status report, I had an issue detecting the board when there are pieces placed on the board. So, I tried detecting the edges of the board and find squared so that I can still coordinate corners when there are pieces on the board. When I detect the edges of the board, it also gives out the coordinates of each edge. Thus, I am hoping I can extract the coordinates of the edges of the squares. I am still in the process of working on my new way of board detection. And, I hope this works out well.

Besides, I also have set up the environment to take pictures of the board. Previously, I took pictures with my phone camera, but I can now take pictures with the webcam we bought. And, the result of board detection was the same when I used the new image from the webcam. Next week is the deadline for the board detection, so I will try to finish the board detection with pieces with my own way of detecting squares from the edges.