The most significant risk is that we will be unable to debug a permission error that is preventing us from running our system upon boot. We configured a systemd such that our system will run when the Jetson Xavier is powered up. However, we currently receive an error that “Home directory not accessible: permission denied” as a result of Gstreamer. We attempted to fix this, but methods that worked for other users did not work for us. This risk is being managed by manually starting our system through a terminal, instead of having it start upon boot. If we are unable to fix this permission error before the final demo, then we will continue doing this.

We made several changes to our software specifications since last week. One change we made was the library we were using for audio. Upon testing our system upon the Jeston, we noticed that we weren’t receiving any threaded audio. After some debugging, we realized that this was due to the library we were using for audio, so we switched from playsound to pydub. Additionally, another change was that we took out the down label from the head pose model because we improved our head pose calculations and our alert is already being triggered by the eye classifier for heads pointed downward. This is because, if someone is looking down, their eyes are classified as closed.

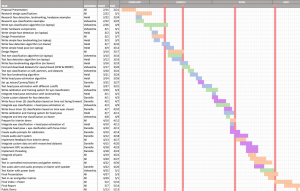

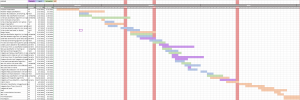

Below is the updated schedule. The deadline of the final video and poster were moved up for everyone in the class.