One significant risk that could jeopardize our success is training. It is possible that we underestimated the time required to complete our training. We foresee possible issues related to varying conditions such as different daylight lighting, various positions of our camera on car dashboards due to different dashboard heights, and distinct eye aspect ratios of various individuals. During our team meetings this week, we tried to manage this risk by adding more time to training when we updated our schedule, which is discussed more below.

After feedback from our Monday meeting with Professor Savvides, we decided to change our driver alert system. Originally, we planned to have a Bluetooth-enabled vibration device that would alert the driver if they were distracted or drowsy. Our main components for this was a Raspberry Pi and a vibration motor. However, after talking with Professor Savvides and our TA, we found that this would not be reasonable in our time frame. Therefore, we eliminated the vibration device and replaced it with a speaker attached to our Jetson Xavier NX. This significantly shifted our hardware block diagram, requirements, system specifications, and schedule.This shift towards an audio alert system did reduce our costs as well.

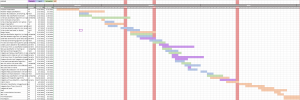

We have attached the updated schedule below that accounts for our recent changes in our design. We were able to consolidate tasks after taking out the tasks related to the vibration device, so that each team member still completes an equal amount of work. In our updated plan, we decided to add more time related to training, integration, and testing our several metrics.

We are slightly ahead of schedule, since we have a simple working example of face detection and a simple working example of eye classification. They can be found at our Github repo, https://github.com/vaheeshta/focused.