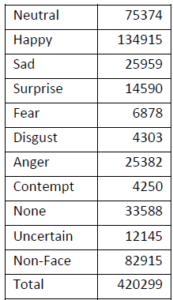

I’ve finished setting up Python/OpenCV on my local machine and polished our block diagrams. I’ve also acquired the necessary data from the AffectNet and Cohn-Kahnade databases. The AffectNet database provides around 500,000 (~120gb of data) faces categorized into 11 emotions:

The Cohn-Kahnade database is significantly smaller and also dated. Thus, Professor Marios advised us to stick to the AffectNet database for now as it has a sufficient amount of data for our training network. The next step for us is to purchase AWS credits and begin training our CNN to recognize facial emotion. We’re also looking into using CMU’s Intraface project for validating our network and also as a backup option in case we don’t get our accuracies high enough.

0 Comments