Progress

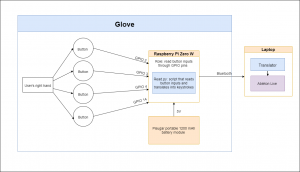

Prior to leaving for spring break, I took training samples with Chris and Mark. We took at least 50 samples of each gesture, along with some negative look-alike examples to test the robustness of our algorithms.

Deliverables next week

Chris will give me the Xavier sometime before leaving Pittsburgh, and I want to be able to run the Kinect on the Xavier. We have also split up our responsibilities for the remainder of the semester, so my task is to develop the music creation gestures (stomp, clap, hit). I want to have algorithms for those that can achieve at least 70% accuracy on our training examples.

Schedule

I am still behind schedule, since I need to start the algorithms for the music creation gestures.