Accomplishments this week

1. Advanced MIDI pattern matching is done!!

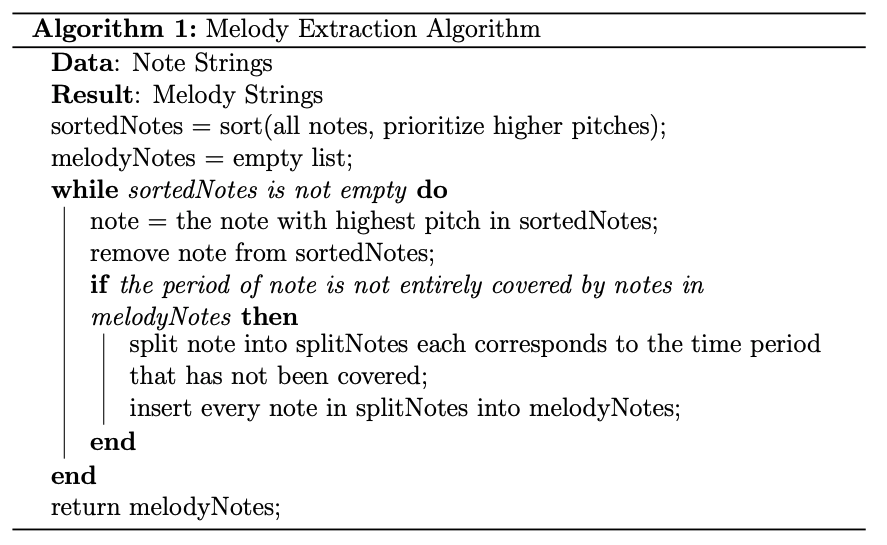

- After trying out the Levenshtein distance method as mentioned last week, I found that the melody extraction part (illustrated in the image below) will introduce more bias to the original midi slice. Since our target is not to find slices with similar melody but slices with similar note components and sequences. After careful consideration, I found out that the reason why the bag-of-words approach failed is that it only takes into account the number of occurrences of each note in that slice, but not the sequence of the notes being played, which is actually a very important metric here since lots of parts in the same piece of music can have same note components, but are played with different sequences.

- I finally decided to use what is called a sequence-vector approach, that uses a vector of notes in the same sequence as that of how the notes are put in, calculate the Euclidean Distance between those sequence vectors to find the similarity of the two MIDI slices.

- I made test cases similar to last week’s to test the sequence vector approach by randomly replace (instead of delete which was done last week) some notes from the original slices. This approach works well even when 50% of the original notes are miss played. Detailed metrics can be seen here: match_results_5, match_results_10, match_results_20, match_results_30, match_results_40, match_results_50. In these files, all ticks at which the slice is matched are reported, and the ground truth tick is always one of the ticks reported, and more importantly, the reported ticks are far away from each other! The results are great so I think the advanced MIDI matching task is done (without repeat), and I’ll move on to testing with real inputs (directly from the MIDI keyboard).

- The testing procedure is (1) slice the original midi file, called ori_midi, into slices of different lengths, called ori_slice (2) randomly replace range(10, 50, 10) percent of notes from the ori_slice, called test_slice (3) use a sliding window, whose length equals len(ori_slice), to loop from each note in ori_midi and try to match the window with the test_slice (4) return the offset of the window which has the smallest Euclidean Distance between itself and test_slice.

2. Computer Vision of Recognizing Flip/Repeat Points.

- Jiameng has tried out the approach here, which has a significantly lower accuracy rate than we expected, and we can only securely use the number of notes recognized as a potentially useful metric for implementing the MIDI tracker.

- The current approach we will use to implement the MIDI tracker (figure out what the user’s progress is on the sheet music and show a tracker which runs the matching algorithm and updates its position every 30 input MIDI signals) is that I will use the delta tick, and time signature information in the original midi file to calculate the number of measures passed and offset in the current measure; Jiameng’s software reports the number of notes passed before each flip/repeat points and I’ll use that to label the points on the original midi file.

- I’m currently working on this task. The task is harder than I thought because lots of MIDI files are mal-formatted with missing SMF header information, so I’m not sure how many ticks are used to represent a beat; the Mido library is not robust enough so it fails lots of the times with mal-formatted MIDI files.

Progress for schedule:

- On schedule

Deliverables I hope to accomplish next week:

- find out the position on the sheet music in a unit of measures.

- get started with testing on MIDI keyboards.

0 Comments