Finalizing Design + CV Research

This week, we mainly worked together on finalizing the goals, requirements and solutions that drove and will continue to drive the discussion around the design of our system. Some of the key outcomes from these discussions were:

- Defining metrics and verification methods for the “smoothness” that is so critical to the success of a video recording product. Here, we are thinking that we will go with a user-defined bounding box around the object’s bounding box that, when hit by the smaller box, will trigger movement. By having it be user defined, we can control the “smoothness” aspect of the system when making these bigger boxes small. We are having trouble coming up with ways of quantifying smoothness, but did a good amount of work to at least define testing metrics for minimal jittering when the motors should be stopped.

- We also determined a lower bound of 3 hours of battery life assuming a 12Ah battery when the Jetson is constantly at max load (25W) and we are constantly moving big 25W servos. Since we have decided to go with much, MUCH smaller servos, this 3 hour lower bound is very comfortable and we can afford to look into much smaller batteries even.

- Because we are thinking of using a slip-ring and a continuous rotation servo for the panning movements, I proposed using a sensor to reset the camera to look forward on system bootup.

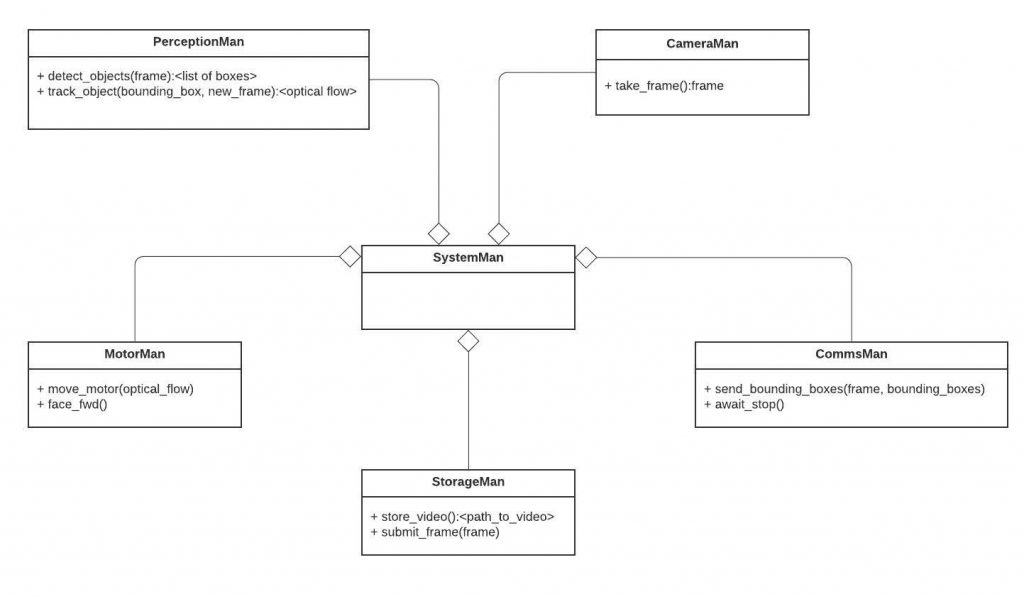

- While Thor was working on a first draft of a CAD model, Ike and I worked on finishing the design requirements (see Ike’s post from this week for a view of the resulting spreadsheet), as well as a draft of the software interactions on the Jetson (attached below). One thing worth mentioning from this diagram is that it only includes public methods from each class, both the perception and the storage manager will likely store some kind of state to keep track of previous frames in order to achieve their jobs. In addition, the overall system manager will act as the finite state machine we mentioned in last week’s status updates.

- I’ve also been looking into using just YOLOv3 for object detection and then use Lucas-Kanade tracking with deep learning optimizations to account for oclusions so we don’t lose our tracked subject. Previously, I thought we could use FaceNet if we wanted to clearly differentiate between subjects, but since this is an edge case of sorts, will try to go with general object detection and follow it up with state of the art tracking.

- Finally, I’ve found that coming up with quantifiable metrics for Computer Vision is much harder than I thought. I’ve started to look into quite a bit of research on performance and accuracies of state of the art CV methods/algorithms, but it’s very hard to translate that into how they would work on a Jetson Nano and in our specific setup. I will have to do quite a bit of research of both the literature and through my own experimentation, but will use what I’ve found so far in order to drive our design requirements.

- Moving forward, I will continue to further specify the software interactions and will start experimenting with some CV on my local machine. Once that’s up an running, I will try to make it work with the Jetson and start making InFrame a reality for all of our fans <3

0 Comments