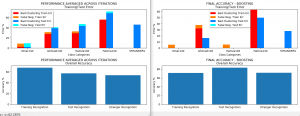

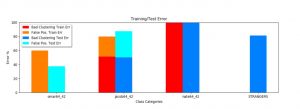

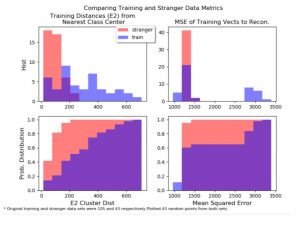

This week I did a little benchmarking between our existing MVP and our MVP once Neeraj’s dlib and face-cropping code were added in. We learned from Professor Savviddes that including anything but the face itself could have a significant impact on the performance of fisherfaces. So once the dlib and face cropping code was added I was hoping for a significant boost in performance. The automated tests showed that not much of a performance increase was achieved. This was not a significant issue as our automated tests already perform very well (~80%) since they are only testing for changes in facial expressions.

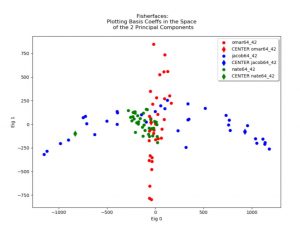

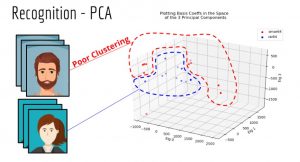

Our live testing MVP script, however, did have a modest improvement in delineating between Me, Neeraj, and Kevan. One reason we suspected it did not do as well was because we used a 64×64 image training set for that live test. We need higher resolution testing data. I gathered 256×256 testing set, which included side-face views, of myself. My partners will also be doing the same.

I also ordered a 1080p webcam, mount, and data cable for us to use in case our existing camera (provided by the Professor) did not meet our needs.

My work, I believe, is ahead of schedule thanks to contributions made over the break.

Next week, we will be working on fusing Kevan’s detection into our own work as well as improving recognition.