This week we did a lot of fine-tuning with the project. I wrote a script that easily collects training and stranger data. The user just has to change facial expressions while the script collects data and sanitizes it. We also improved a lot of the parameters used in the mvp prototype script (stranger E2 and cosine thresholds for example. We also collected a lot more data and realized that the more data we have the better it performs.

Group Status Report 4/27

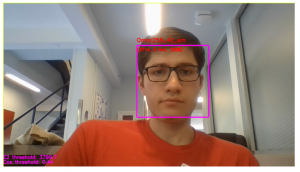

Using SVM over Kmeans led to remarkable success with recognition. We also found a better combo of PCA eigenvectors to keep. We fixed a bug in stranger detection. We implemented a live display of coefficients in the eigenspace. Facial detection is also seeing major improvements.

Weekly Status Report 4/27 – Omar

This week I pair-programed with Neeraj to implement the usage of SVM over Kmeans for recognition of the fisherface eigen coefficients. In other words, rather than trying to match a new face to the nearest centroid in the eigenspace, we partition the eigenspace into zones using SVM and label them accordingly. We also selected a more optimal selection of PCA eigenvectors (dropping only the first two). The combination of these led to remarkable success in our recognition algorithms. We are now seeing more than 90% accuracy in recognition.

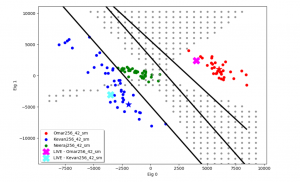

Part of our success above was thanks to implementing a tool which helps us visualize where faces land in the eigenspace. I programmed a tool which displays live the location of a face in the eigenspace along with the existing camera frame visualizer. Below image displays that tool (X marks the spot of the live face representation).

I found some bugs with the cosine and E2 stranger detection metrics and fixed them.

Group Status 4/20

Neeraj finished some early testing for our new fisherfaces implementation and we got very good results with that. Neeraj and Kevan are now working on combining that with our existing codebase.

Weekly Status Report 4/20 – Omar

This week me and Neeraj pair programmed to re-code our PCA. We finished that and tested and compared those results between the old PCA and new PCA in terms of the quality of the reconstructions as we varied the eigenvectors that were used. We then used our existing LDA code and interfaced that code with this new PCA in order to generate a new set of fisherfaces. Neeraj finished testing that fisherfaces and we got very good results with that. Neeraj and Kevan are now working on combining that with our existing codebase.

We are on-schedule.

Group Status Report – 3/13

We have fused facial detection. We tested with 256×256 sized data-set. Neeraj is working on recreating the PCA and LDA code to verify that it works. We will soon begin working on mouth movement detection once we reach the desired accuracy for all the other components. The main issue at the moment is getting the accuracy above 70% for the facial recognition component.

Weekly Status Report 3/13 – Omar

This week we got our camera (I set the camera up to work with our code) and we performed some live tests to see how we would fair with better quality data. We hypothesized that 256×256 dataset would perform better and we tested on Me, Neeraj, and Kevan. Unfortunately, while the testing performance was decent the live web-cam performance was still not acceptable.

Currently, Neeraj is recreating some of the PCA and LDA to verify that those code portions are correct.

I am on schedule.

Group Status Report 4/6

Facial detection is now able to run larger training sets on AWS and has been fused with the MVP script we have running.

We have implemented Cosine distance for stranger detection. We are also planning on rejecting any side shots of people in training, testing, and live testing. We believe these are unnecessarily difficult tasks for us to hurdle as PCA is not well suited to it. There should be more than enough frontal shots for us to do participation and attendance. The tasks is now just to use facial landmarks (ratio of face width to inter-eye width) from dlib to detect a side shot.

Weekly Status Report 4//06 – Omar

- What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I implemented cosine distance as a distance metric for understanding whether someone was a stranger. This was done by finding the cosine distance of all training points and selecting a percentile of those points for which I consider any new image with a cosine distance to its nearest centroid larger than that the cosine distance of that percentile to be a stranger. I ran tests to compare how effective this was at detecting strangers versus Euclidean distance. I also compared how detrimental they were to false negatives: wherein a sample is falsely not considered one of the students (i.e. a stranger) because its cosine distance is too high, however, the nearest centroid (i.e. our prediction) is actually the correct individual. These false negatives eat away at our accuracy because of poor stranger detection.

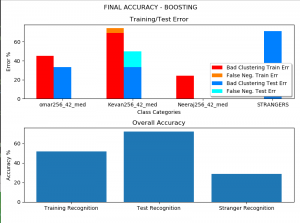

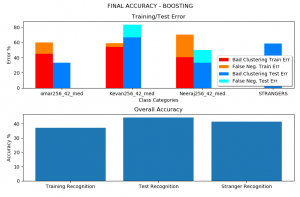

Above are the results of using E2. Stranger accuracy is about 30%. Any more stringent stranger detection causes too many false negatives.

Above are the results of using cosine distance. Stranger accuracy is about 40%. However, training recognition suffered from false negatives (see orange bars in the above graph).

I also reviewed the PCA and LDA code with Professor Savvides. Also, I ordered a laptop webcam, mount, and cable.

- Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

-

- On schedule.

-

- What deliverables do you hope to complete in the next week?

- Improve stranger detection by better leveraging cosine distance.

Group Status Report 3/30

Our facial detection is nearing completion and will soon be fused into our MVP. Hopefully, this fusion will be simple as the OpenCV facial detection code we are using is fairly straightforward (provide frame, get back a list of face bounding boxes).

We are still working on improving facial recognition. We hope that better face cropping, dlib correction, and zeroing out background noise will all lead to improvements.

There are no major changes to design/schedule.