Week 1: 2/10-2/16

Hans:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

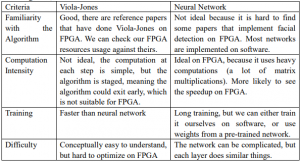

I have read three papers about some previous work on FPGA implementation of facial recognition system. I have researched and gained a solid understanding about the algorithm for facial detection (Viola-Jones algorithm), because this is the first step for our system. On Friday, we just got the comments on our proposal from Professor Savvides and TAs. The main concern is that our proposed algorithm for facial detection (Viola Jones algorithm) has an early exit property, which has to be determined at runtime. Professor Savvides suggests that we should use a neural network, which contains pure computations and can all be synthesized at compile time. Therefore, our team gathered to talk about the two approaches, and I have summarized them in the following table, which we will discuss about with Professor Savvides next week.

Time for this week:

4 hours: meet in groups in class

4 hours: read papers about facial detection

4 hours: discuss with Sheng-Hao (in charge of FPGA) about facial detection papers and debate between the Viola-Jones and neural network approach on FPGA

2. Is your progress on schedule or behind?

It is on schedule.

3. What deliverables do you hope to complete in the next week?

– Discuss with professor and TAs and finalize the facial detection design

– Have initial training code for the algorithm we determined.

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– I reread the research papers that we found on the Viola-Jones algorithm, trying to really force how the algorithm works into memory. (1.5 hours)

– I read three papers on facial detection and/or recognition implemented on an

FPGA. I then had discussions with Hans on the setups behind what was done in each paper, as well as some of the hardware optimizations they performed. (5 hours)

– After reading the three papers mentioned in the bullet point above, I found FPGA development boards with at least as good specs as the ones in the papers. However, after this I looked at the boards we could rent from the inventory and realized that the KC705 and VC707 boards were so much better than what we could afford with $600. So, I instead requested to borrow one of the two for our project. (1 hour)

– We finally got our written design feedback a day before this report was due, and in it Professor Savvides suggested that we use the YOLO algorithm or neural nets instead of the Viola-Jones algorithm. Hans and I looked the the YOLO algorithm and he shared what he knew about neural nets with me. We then discussed the pros and cons of Viola-Jones and the pros and cons of neural nets. One thing that was confusing to us was that Professor Savvides said that we wouldn’t be able to fully utilize the fast exit advantage of Viola-Jones in hardware, but Hans and I think that a pipelined version of it would be almost the same as a pipelined neural net. Fast exit or not, both pipelines would still would process 1 face candidate per cycle on average. We decided to talk to Professor Savvides this week to get more clarification on his thought process. (2 hours)

– I downloaded Vivado 2016.4 (the latest version compatible with my os, Windows 8.1) and started reacquainting myself with it again. I did this by loading example projects and reading the user guide. I also took a look at the KC705 documentation (Professor Nace emailed us saying we had been given the KC705 on Thursday). I made sure to download the right board files for Vivado to be compatible with the KC705. One thing I forgot about was how we might need a floating or node-locked license for Vivado, an issue which I’ll address in the next bullet point. (2 hours)

– The free version of Vivado works fine, but I wanted to see if CMU already has a floating license available to use. I took a look at past project papers for this

course and one paper mentioned where Vivado was installed on the ECE

clusters, as well as where the license files were. I’m going to ask Professor Mai

for help on linking the license files to my local installation of Vivado, much like we did with Quartus and its license files in 18-341. (1.5 hours)

– Total hours: 13 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am behind schedule since I was supposed to have a decent grasp on the algorithm for facial detection by this week. However, since we need to get clarification from Professor Savvides, I don’t know which algorithm to focus on yet. Everything else is on track. To get back on schedule, Hans and I will readily get to work on writing the training code for whichever algorithm we decide to choose, after we finish talking to Professor Savvides.

3. What deliverables do you hope to complete in the next week?

– Figure out how to send data over UART back and forth between KC705 and

laptop, and have a simple project doing so.

– Code for training and validating a facial detection classifier based on whichever

algorithm we decide to go with.

Andy:

1. What did you personally accomplish this week on the project?

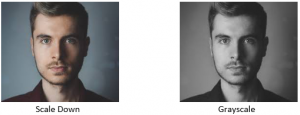

I have a working scaleDown function and a working grayscale function using the PIL library. This library function works, and we may use this for preprocessing, but I also looked into the actual implementation of the functions because we will need to scale down the images when we are performing the machine learning algorithms to scan for faces in the images.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

My progress is currently on schedule. I have followed the Gantt chart that we created for our presentation and have completed the tasks for this week. However, I would like to look more deeply into the underlying implementation of the PIL functions for image preprocessing so that I can write a version that we can use for our other stages of facial recognition.

3. What deliverables do you hope to complete in the next week?

Next week, I hope to look into how we will store the images, whether it be in local memory or in the cloud. Some considerations to be made during this task are if we have enough local memory to store all the images of the users and what sort of latency would storing the images in the cloud introduce. I will do some research on this topic and come up with tradeoffs between the different options and see which is better suited for out project.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

Our biggest concern is the I/O transfer delay between software and FPGA. Since our goal is to speed up the facial recognition system using FPGA, I/O delay may impact our performance.

Our first step is to get a powerful FPGA (KC-705), which we just obtained from Professor Nace. The baud rate of this FPGA can reach as much as 921600 bits/sec. To estimate it safely, let’s say we use a baud rate of 460800 bits/sec. Imagine we transfer a grayscale image of 100×100, with one byte per pixel. The size of our image is 100x100x1x8 = 80000 bits. The delay is approximately 80000/460800 = 0.1736sec, which is reasonable. Notice that this is just a rough estimate.

We will also the measure the performance of the system with and without I/O. If I/O constitutes the main bottleneck of our system, we will make sure we document that in our reports.

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

To address the proposal comments from our professor and TAs, our facial detection algorithm (Viola-Jones algorithm) is not a suitable method for FPGA due to its inherent sequential nature. We should consider a neural network approach, which has intense computational complexity and a lot of parallelization potentials. We have listed the pros and cons of using both approaches and will speak with Professor Savvides about this issue again in the upcoming week.

This costs us some extra time into researching both approaches, but it helps us reduce some risk when we go deeper into the project. We will make sure we give enough considerations about these ideas, so we can stick with it throughout the semester to avoid wasting time in the future.

3. Provide an updated schedule if changes have occurred.

No change.

Week 2: 2/17-2/23

Hans:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

Discussion: 4 hours

I discussed with the team to finalize our facial detection algorithm. Last week, we had this debate on whether to use Viola-Jones or neural network for facial detection. We have decided that we will use Viola-Jones because we have a lot of documentations and resources from past papers that use Viola-Jones for facial detection on FPGA.

Research into Cascading Classifiers: 4 hours

I have sorted out the details in how the images pass through the cascading classifiers in Viola-Jones. Specifically, I have looked into how the Haar features extract the details in the face image and are able to determine if the input image has a face. I will prioritize implementing the classifier logic because this is the part that needs to be put onto FPGA. Since training for Viola-Jones will take a long time, we have decided to use some pre-trained weights for now.

Write Initial Code for Cascading Classifiers: 6 hours

I have written some initial code for the cascading classifiers with some minor details omitted. The code contains the skeleton of feeding all sub-windows of an image into the classifiers. I still need to add the details of using pre-trained weights to predict on the input images.

2. Is your progress on schedule or behind? Ideally, the cascading classifier should be finished this week, but we spent some extra time choosing our algorithm for facial detection. I will finish the classifier in the first half of next week.

3. What deliverables do you hope to complete in the next week?

- Finish cascading classifiers

- Run it on pre-trained weights and detect faces correctly

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week was a little hectic so I wasn’t able to get as much done.

– I talked with Hans about which facial detection algorithm we should use, since Professor Marios didn’t show up to our weekly meeting. We ended up deciding to use Viola-Jones since it’s more familiar to us. I also gained some understanding of Viola-Jones from talking to Hans. (4 hours)

– I downloaded all the drivers I needed for the KC705 board and ran diagnostics on it. The BRAM and DDR3 had no issues so that was good. (.5 hours)

– I started a UART project in Vivado but quickly realized that it involves the AXI protocol, which I don’t really understand. I’ll have to get it down first before I can progress. (1 hour)

– I updated the website on Wednesday and changed up the design a little. (.5 hours)

– Total hours: 6 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am behind schedule. I was supposed to understand data transfer over UART by the end of this week, and hoped to have a project demonstrating it also. This upcoming week I’ll put in extra time so that the project demonstrating data transfer over UART is finished, to catch up to the schedule on our Gantt chart.

3. What deliverables do you hope to complete in the next week?

– Have a project demonstrating data transfer over UART

Andy:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I have been working on designing the Webapp UI for the Identity Checker. I currently have the flow for the two paths thought out. Users are able to add themselves to the database if they haven’t used the identity checker before. If they have, they can check their identity and their identity will be checked with users stored in our database. If no matches are found, I am considering allowing users to select an option to be able to add themselves to the database. However, this may be due to inaccurate identity checking on our end, so it is a design decision I will have to make after performing some user testing.

I also looked into the tradeoffs between storing our images in the cloud versus storing locally. Since there is an additional latency associated with retrieving data from the cloud, we believe that the better decision would be to store our images locally. Hopefully, our image preprocessing will be able to reduce the amount of storage required for the images so that we have enough space for multiple users.

2. Is your progress on schedule or behind?

My progress is currently on schedule. I have followed the Gantt chart that we created for our presentation and have completed the tasks for this week.

3. What deliverables do you hope to complete in the next week?

Next week, I would like to begin implementation of the Webapp, and at least get the barebones UI working. I also want to take this structural implementation and perform some user testing to make sure that the flow is logical and intuitive.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We need to figure out data transfer mechanism (UART) between software and FPGA as soon as we can, because we don’t have a lot of experience on it.

We will look at past groups’ projects and see how they set it up. We will ask for help if we need it. We will also set up an example project that demonstrates we can send data correctly.

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Nothing has changed since our proposal.

3. Provide an updated schedule if changes have occurred.

No change.

Week 3: 2/24-3/2

Hans:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

Parse XML file:

I have finished parsing pre-trained weights from OpenCV XML file. The script is written in Python, and it generates a .c file, which can be included with the cascading classifier implementation.

Makefile and compilation:

I have written a Makefile to include necessary files for the cascading classifier. The repo compiles, and it is up at https://github.com/hanschen1996/18500-Facial-Recognition/. The next step is to test the module with images.

2. Is your progress on schedule or behind? Yes. The cascading classifier is finished, and I will test it with real images next week to ensure good accuracy.

3. What deliverables do you hope to complete in the next week?

- Test cascading classifiers

- As a stretch goal, convert the code to RTL code

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– I created a project with simple data transfer over UART. Turns out I didn’t need the AXI protocol for the Xilinx UART IP. Driving the RTS, CTS, RX, and TX pins for the UART device on the board using a finite state machine was good enough. I’m currently using TeraTerm to send and view data sent over UART, but I need to figure out how TeraTerm does this, so that I can write my own python file emulating the process. (10 hours)

– Total hours: 10 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am behind schedule. I need to start working on integrating the UART data transfer with BRAM. I also need to figure out the simplest way for me to confirm that what’s in BRAM is actually in there.

3. What deliverables do you hope to complete in the next week?

– Have a project demonstrating BRAM usage

Andy:

1. What did you personally accomplish this week on the project?

I have implemented the UI that I designed the previous week and it is running on a local host development server. I made the front-end with pure HTML and CSS and used the Django framework (Python). I felt that the site was somewhat bland, so I also looked into some JavaScript libraries to improve the UI, mainly React and Angular. Comparing the two, I found that Angular is a complete framework while React is a JavaScript library, so it seems like Angular is a more complete solution. However, the web app is not the focus of this project, so I felt that I would try to use the option that has better documentation and more support from the community. It seemed to me like Angular had more tutorials and a stronger open source community, so I am leaning towards using Angular.

I also looked into how to access the camera to take pictures of users using HTML. It seems like the getUserMedia API is fairly commonly used to capture media. Other options were to directly use an input tag and ask for a file, in which a prompt will show up to ask the user which app to capture images from.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

My progress is currently on schedule. My task for this week was to implement the UI for the app. However, after doing that, I felt that I needed to look more into the photo-taking aspect because that is a critical component of being able to perform facial recognition, which is the core of our project.

3. What deliverables do you hope to complete in the next week?

Next week, I would like to implement my own image scale-down algorithms so that we can use this code when we are running the facial recognition algorithms on the FPGA as well. I would also like to try to get the web app to work up to being able to take images of users and storing them locally.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We received the feedback from the professor that we need to make sure we understand how to use pre-trained weights in Viola-Jones algorithm. We have finished code and begin testing. If we encounter any issues related to accuracy, we will make sure to talk to Professor Savvides next week.

We are also addressing the UART transfer between software and FPGA. We have looked at projects that implement data transfer between software and FPGA, and will adopt some examples that work for our project.

We hope to address these two major hurdles before spring break.

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Nothing has changed since our proposal.

3. Provide an updated schedule if changes have occurred.

No change.

Week 4: 3/3-3/9

Hans:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I have gathered the data for testing, and plan to see how the facial detection model performs on the data. My goal is to achieve 80% accuracy (correctly capturing a face if there is one, or denying non-face images), which is what we promised in our design review.

It is a rough week with a lot of projects and midterms due, so I haven’t gathered the testing results. I plan to gather these before we come back from spring break.

2. Is your progress on schedule or behind?

It is a little behind because ideally I need to get the facial detection model tested by this week. I plan to use spring break to finish testing to catch up with the schedule.

3. What deliverables do you hope to complete in the next week?

– Translate C code into HDL.

– Experiment with HLS that can translate C code into HDL code in case my code is not optimized well.

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– I met with Hans to write down the skeleton for the viola-jones implementation.

– I wrote a multiplier, a divider, and a signed comparator as well as testbenches for them.

– Turns out python has a handy package called pySerial that handles all the uart on the laptop side, so all we need to do is to spawn a thread constantly listening at our laptop’s COM port to receive data from the FPGA. Sending data is done through string format using b”” to wrap the string text instead of “” so that it’s encoded as bytes. Pyserial lets us choose which uart protocol (parity, #stop/start bits, .etc) so we’ll l just choose the one easiest to implement on the FPGA side.

– Total hours: 12 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am behind schedule. I stopped working on BRAM for the time being since that’s only needed for facial classification, and right now we need to focus more on getting detection working on the FPGA.

3. What deliverables do you hope to complete in the next week?

– Have all the detection code all written out, and preferably have some bugs ironed out

Andy:

1. What did you personally accomplish this week on the project?

I worked on accessing the laptop camera and being able to take pictures using HTML / Javascript. Initially, I struggled to put together something that would be able to access the camera because most of the documentation I found online mentioned using the HTML Media Capture API, which prompts the user to upload a file. While this wouldn’t make or break our project, it would be nicer to have users be able to actually access the webcam through the webapp directly and take their picture through the webapp rather than having to take a picture and then uploading it as an image file. After searching more, I found that the getUserMedia API does allow for web real time communications access to the webcam.

After using the getUserMedia API, I found that I couldn’t access the webcam through google chrome, but could access it through any other browser. I initially thought that Chrome didn’t support the API, but I later found out that even though I granted access to the webcam, my system settings for the mic and webcam were turned off.

I also reorganized our github repo and separated the webapp components that I am working on from the machine learning components that Hans is working on.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

My progress is slightly behind schedule. My main goals for this week were to get the camera access integrated to the webapp and figure out how I would store the images that are taken. I was able to get the camera access working, but haven’t worked on the storage aspect yet. To catch up, I will use this slack week that we have (Spring break) to try and see how I can store the images that are taken by the user.

3. What deliverables do you hope to complete in the next week?

Next week is spring break, so it’s a slack week. However, I would like to get the webapp working to the point where users can take a picture of themselves and store the image location locally where we can then apply image preprocessing to minimize the amount of data sent to the FPGA.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We need to fully test our facial detection module and UART between software and FPGA before we come back from spring break. We have been working on these challenges, but it has been a rough week in terms of workload. We plan to use spring break to catch up on the work.

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Nothing has changed since our proposal.

3. Provide an updated schedule if changes have occurred.

Use spring break to finish testing facial detection module and UART setup.

Week 5: Spring Break + 3/17-3/23 (including spring break)

Hans:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

I have tested the facial detection in C, and it is able to detect faces from the yalefaces database. Below is an example of how the facial detection sketches out the face region.

More solid testing is definitely needed, because we also need to account for the non-face images. I will obtain some non-face images and pass all images to the detection module. I will gather the overall testing statistics after we finish major code development.

Sheng-Hao and I have put down the initial FPGA code for facial detection. The overall skeleton is complete, but we still need to figure out the details such as the clock cycle and optimization. We will make sure the code compiles, runs, and produces the correct result next week.

2. Is your progress on schedule or behind?

It is on schedule.

3. What deliverables do you hope to complete in the next week?

– Test facial detection FPGA implementation

– Look into facial recognition in details and have initial C code implementation

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week was a little hectic so I wasn’t able to get as much done.

– I met with Hans to discuss details like hardware design choices and to make sure we were on the same page for the flow in RTL.

– I played around with BRAM and looked at online documentation for it. It isn’t too hard to use. The hard part is deciding what to choose for all the configuration options they have.

– Total hours: 6 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am behind schedule. I still haven’t completely figured out BRAM and Andy and I need to meet to sync up the webapp with the UART code.

3. What deliverables do you hope to complete in the next week?

– Have all the detection code all written out, ready or close to ready to synthesize and simulate for minor logic issues (so no syntax errors, mismatched port connections, un-compilable code, .etc)

Andy:

1. What did you personally accomplish this week on the project?

I worked on figuring out how to save the images taken into local storage. Currently, the flow of the webapp is that the user has camera access after clicking on the button to add their picture to the database or to identify themselves. Once they have camera access, a picture will be take when the user clicks on the button to take a picture. The picture is then downloaded to the local downloads folder where we then can perform the grayscaling and downscaling to minimize the amount of data to be sent to the FPGA.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

My progress is currently on schedule. I would like to see if there is a better way to directly pass the image to the FPGA without having to download the image to the downloads folder, but the current method of downloading the image is functional.

3. What deliverables do you hope to complete in the next week?

Shenghao and I were not able to get the UART communication between the webapp and FPGA working yet, so that is our next biggest task that we hope to tackle in the upcoming week.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We haven’t fully connected the frontend camera module with the UART module. We need to get that setup as soon as possible because this is a crucial part for our demo.

We have written the code but haven’t tested it out. We will test it out during our next meeting.

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Nothing has changed since our proposal.

3. Provide an updated schedule if changes have occurred.

We will meet on next monday to set up UART, and deal with any issues that come up.

The rest of the schedule is unchanged.

Week 6: 3/24-3/30

Hans:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

Sheng-Hao and I have synthesized verilog code in Vivado. There are many issues associated with this process, because we have encountered issues such as setting Vivado, big register sizes that Vivado doesn’t allow, etc.

We have also tested individual verilog modules such as downscaling, integral image calculation. We are still testing the whole facial detection pipeline on FPGA, and we hope to get ready before the Wednesday demo.

2. Is your progress on schedule or behind?

It is slightly behind, because we spent too much time synthesizing verilog code in Vivado. I haven’t got a chance to look at facial recognition implementation details, and I will take a look at that after we have fully tested facial detection on FPGA.

3. What deliverables do you hope to complete in the next week?

– Be able to demo facial detection on FPGA.

– Look into facial recognition in details and have initial C code implementation

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– I met with Hans to work on the RTL and we fully implemented the following modules: top, vj_pipeline, downscaler, int_img_calculator, accum_calculator and sqrt.

– Hans and I also created python scripts to generate the vj_weights.vh header file and the text for all 2913 instantiations of our accum_calculator module.

– We also wrote testbenches for every module that we fully implemented this week.

– We got all the submodules for our top module to the point where they’re synthesizable in around 10 minutes at most, so we’re ready to synthesize our top module overnight, so that we can check for timing and area the next day.

– At the same time we’ll start simulating to find the minor logic bugs in our code.

– I also met with Andy and got the UART python code synced with the webapp code.

– For convenience, I uploaded an RTL design to the FPGA board’s BPI flash that echoes any data it receives over UART. The board can be programmed by pressing the PROG button on the board,, without needing to connect via JTAG to a laptop running Vivado.

– Total hours: 30 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am behind schedule. I still haven’t completely figured out BRAM and we haven’t progressed the detection RTL to the point where we can test it on the FPGA.

3. What deliverables do you hope to complete in the next week?

– Have demoable face detection for the demo next Wednesday

– Have an RTL skeleton for the facial classification code

– Be all read up on the eigenface algorithm

– Have a working project using BRAM

Andy:

1. What did you personally accomplish this week on the project?

I worked with Hans and Shenghao to get the webapp up and running locally on their machines so that they would be able to test if their facial recognition code would be able to integrate with the webapp. I also worked with Shenghao to try and send data between the FPGA and the webapp and we were able to successfully transfer data.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

My progress is currently on schedule. At this point in time, I would like to clean up the UI and get it ready for demoing on Wednesday.

3. What deliverables do you hope to complete in the next week?

I want to get the facial recognition code integrated with the Webapp by Tuesday so that we can demo it as a whole system on Wednesday. I will work with Hans on Monday to see if we can get that sorted out.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We have set up the UART module to communicate between webapp and FPGA. We have tested sending simple data, and it works.

We have tested most of the submodules on FPGA, and are still testing the entire facial detection module on FPGA. We hope to finish this by Wednesday demo.

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Nothing has changed since our proposal.

3. Provide an updated schedule if changes have occurred.

We will finish testing facial detection on FPGA before Wednesday demo.

Week 7: 3/31-4/6

Hans:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– Successfully demoed facial detection

We have successfully demoed facial detection on our webapp interface. Users are able to take a picture, and our facial detection can detect all faces in the image. After getting feedback from the professor and TAs last week, I have added Non-Maximum Suppression (NMS) after our facial detection module finishes, which helps us detect multiple faces and find the best bounding box among all face candidates.

– Improve FPGA facial detection and try to synthesize the whole project

This week, Sheng-Hao and I worked a lot on FPGA implemention of facial detection. Since we write the implementation from scratch in verilog, we have come across a lot of issues in the synthesis, including big register sizes, having too many pipeline stages in our design, etc. We have cut down our pipeline stages, but we still have trouble with big register sizes in our verilog code, so it is taking a long time for synthesis. We are currently working on moving big arrays into FPGA BRAM, to see if this will help with our synthesis.

– Implemented most of facial recognition module in C

I have gathered training data for Eigenface algorithm by cropping the faces from Yale Faces database. I ran my facial detection code on all faces in the database, and output each image as a 20×20 image. The only thing that I have to work on is to implement finding eigenvalues and eigenvectors in C.

2. Is your progress on schedule or behind?

We are a little behind on the FPGA implementation for facial detection, because we are having trouble with synthesis. We will try to get it resolved by Wednesday.

3. What deliverables do you hope to complete in the next week?

– Finish facial recognition implementation in C, and start testing

– Resolve the issue of moving large arrays in verilog to FPGA BRAM. Start looking at HLS if we still cannot get it resolved

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– Last week we said that our submodules synthesized quickly, which was only partly true. They were compiled in 10 minutes, not synthesized in 10 minutes. The actual instantiation and optimizing parts of synthesis happens after every module is compiled, and takes a lot longer.

– The compilation of the top module itself also didn’t go as planned. We had it running overnight, for around 12 hours, and it still didn’t finish. We did some research and math and figured out our 160 by 120 array of 32 bit logics for storing the image from the laptop uses over 600,000 registers, which is way more than our FPGA’s ~400,000 available registers. This could be part of the issue for why our top module wasn’t compiling. It would definitely also cause huge issues for the instantiation and optimization parts of synthesis. Additionally, in vj_pipeline, we use 2913 scanning windows (25 by 25 arrays of 32 bit logics), each of which use 20,000 registers, for a total register amount of over 58,000,000. This would also cause huge issues for the instantiation and optimization parts of synthesis. So, in conclusion, we realized that we had to optimize away almost all of the scanning windows and only store the image from the laptop at most once.

– I wrote uart_tcvr and uart_tcvr modules to add uart capability to our top module.

– Hans and I worked to shorten our pipeline to five stages, using only 5 scanning windows in parallel, which results in only 100,000 registers being used. However, we still have 2913 instantiations of accum_calculator, which may use a lot of LUTs.

– I figured out all our issues with block RAM, ported all the localparam arrays into block RAM. I’m still working out how we might possibly store the image from the laptop in block RAM and not waste many clock cycles reading from it.

– Total hours: 30 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am behind schedule. At this point we’re considering only doing facial detection on the FPGA, and considering using Vivado HLS to generate Verilog from our software implementation instead of writing the Verilog ourselves.

3. What deliverables do you hope to complete in the next week?

– Have progress on a Vivado HLS version of our facial detection code

– Have most of the variables requiring many registers stored in block RAM or optimized way, so that we can try synthesizing again.

Andy:

1. What did you personally accomplish this week on the project?

I improved the UI of the webapp and automated the picture taking process so that you can continuously take pictures of the user and identify their face without having to restart the app. I also integrated the webapp to the ML code that hans worked on by calling a shell script that executes the facial detection and we were able to successfully demo it this week.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

My progress is currently on schedule.

3. What deliverables do you hope to complete in the next week?

We now have the webapp integrated with facial detection on the software end. I hope to be able to get the integration working with the FPGA this week so I will be working with Shenghao on that task.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The FPGA synthesis for facial detection is more challenging than we would imagine. We are still working on using FPGA BRAM to store large arrays in our code.

If we still cannot get it to work, we will look at HLS, which is a synthesis tool to download C code onto FPGA to make sure we have a baseline working on FPGA.

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

The FPGA implementation is taking longer than we are expecting, so we will keep working on it until the Wednesday status meeting. If we cannot get our verilog code to work by Wednesday, we will look at HLS that can synthesize C code onto FPGA.

3. Provide an updated schedule if changes have occurred.

Sheng-Hao and Hans will continue to work on facial detection on FPGA this week. Other schedules remain the same.

Week 8: 4/7-4/13

Hans:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– Implemented initial facial recognition with accuracy 76%, assuming face is in the database

I implemented eigenface algorithm from scratch. I take the yalefaces database. For each subject, I use 5 images for training, and rest of the images for testing. There are 15 subjects in total, so there are 75 training images. There are 87 test images left.

This initial version assumes the face exists in the database, and it reaches accuracy of around 76%. The incorrectly recognized faces are mostly due to lighting issue, which is a well-known issue with Eigenface algorithm. The next step is that I need to figure out how to find the threshold to determine whether the face is not in the database.

– Successfully synthesized facial detection in HLS to be able to program onto FPGA

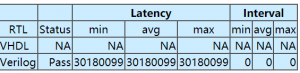

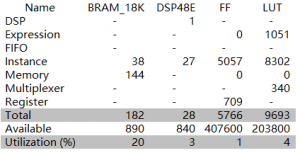

I have experimented HLS that can synthesize C code onto FPGA. The synthesis passed, and the simulation is able to finish the detection phase in about 0.3 seconds (one clock cycle is 10ns, and 30180099 clock cycles in total). We are able to reach this speed without any optimization. I will look into the optimization techniques in HLS this week to speed up the detection on FPGA. Below is the simulation result.

Below is the usage report from Vivado:

– Work with Sheng-Hao on our own verilog implementation for facial detection

Sheng-Hao has figured out how to use BRAM in our verilog implementation, and we have successfully moved some of large arrays into BRAM. We still have to figure out how to store downscaled images in BRAM.

2. Is your progress on schedule or behind?

It is on schedule.

3. What deliverables do you hope to complete in the next week?

– Improve facial recognition to be more robust against lighting

– Have a way to determine that a face is not in the database

– Optimize FPGA facial detection in HLS to reach a speedup compared to software facial detection

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– Hans and I refactored int_img_calc to a pipeline with 2 stages. The first integrates each row, and the second integrates each col. We also shortened the logic bit widths where we could to avoid unnecessary register usage.

– Hans and I also refactored vj_pipeline to be in place, taking in a new scanning window only when it’s done with the current one. This makes the whole design take longer, but is a necessary sacrifice for us to actually synthesize.

– I refactored our top module to calculate the integral image only on the scanning window, which lengthens the critical path by the time required for 25 adds but lets us do away with most of the integral images we instantiated (one normal and one squared for each pyramid level’s image).

– I also refactored our top module to downscale in place., so we don’t have to instantiate each pyramid level’s image as registers.

– I also refactored our int_img_calc to use only DSPs for multiplying (some combinational and some synchronous).

– I added an ip_lib file, which contains simulation modules to emulate vivado ip modules.

– I’m still trying to figure out how to fit the image from the laptop in block RAM in such a way that we can downscale in place, or just not use a downscaler at all. Without this piece of the puzzle, the design takes 12 hours to synthesize, but also uses 400% of the available LUTs and 200% of the F7 (7 input) muxes on the FPGA. We’re pretty sure a lot of this is from all the indexing we have to do into the registers storing the image from the laptop.

– Total hours: 20 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am behind schedule. At this point there’s nothing much I can do other than to keep grinding away and hope I eventually optimize enough to make our handwritten Verilog design synthesizable.

3. What deliverables do you hope to complete in the next week?

– Have the Vivado HLS design ready to debug with ILA.

– Have a way to fit the image from the laptop in block RAM so that the design works in the way I described above.

Andy:

1. What did you personally accomplish this week on the project?

This week I experimented with improving the flow of the webapp. Currently after facial detection, a separate window opens after the image is saved. Instead of opening up the image with the faces detected in a new window, I tried to figure out a way to display the image on the canvas on the webapp instead. I had some issues with this because we converted the image to a pgm file format, which can’t be directly displayed to my knowledge. An alternative could be to convert the image back to a jpeg or png format if I can’t figure out how to display the image with the faces detected.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

We haven’t been able to get the FPGA facial detection component working yet, so the integration between the webapp and FPGA isn’t complete yet. This task will be dependent on the completion of the FPGA facial detection.

3. What deliverables do you hope to complete in the next week?

The facial recognition is close to completion, so upon that completion, I will be integrating that component with the webapp to complete the software facial detection and recognition component of our project.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We improved our own verilog implementation for facial detection because we successfully used BRAM to store some large arrays in our code. We haven’t tested synthesizing our latest code, because we still have to work on moving downscaled images into BRAM as well.

In case our own implementation still doesn’t work after these improvements, we have successfully synthesized our facial detection C code in HLS. The synthesis finished, and the facial detection module is able to finish in ~0.3s. We reach this speed without optimization, and we will look into optimization this week. After all, we already have a baseline version for FPGA.

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Not really.

3. Provide an updated schedule if changes have occurred.

This week:

(1) continue to improve our own verilog facial detection implementation.

(2) improve HLS version by optimizing with #pragma clauses

Week 9: 4/14-4/20

Hans:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– Improved facial recognition accuracy

Last week, I used 5 training images for each subject in the Yalefaces database, and reached 76% accuracy. I realize we can totally use more images in the training set because each subject has more than 10 images in the database. I have tried using a range of 6-9 images for training for each subject, and I reach the highest test accuracy with 8 training images for each subject. I have told Andy to take 8 images when someone wants to add himself to the system.

Based on current testing with the Yalefaces database, the accuracy of recognition system reaches 80%.

– Integrate recognition system with webapp

I worked with Andy to integrate the recognition system with webapp. I have established a folder to record all added faces, so that the app can read the entire database correctly when it reboots. Every time a new person adds the face to the database, the model retrains to include the newly added faces. We have tested the app, and users should be able to add faces and recognize themselves later.

– Obtain the bitstream of FPGA facial detection and currently testing

I have worked with Sheng-Hao to integrate HLS output with Sheng-Hao’s UART implementation. We have successfully obtained the bitstream for FPGA. We are currently testing with sending FPGA with real image data, and see if we can correctly output face coordinates if we find any.

2. Is your progress on schedule or behind?

It is on schedule.

3. What deliverables do you hope to complete in the next week?

– Test facial detection FPGA bitstream, and integrate with webapp

– Optimize FPGA facial detection in HLS to reach a speedup compared to software facial detection

– I still need to find out a way to detect if a face is not found in the recognition system.

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– Hans gave me a working HLS implementation at 50 MHz and I combined it with the uart_rcvr and uart_tcvr modules, along with some ILA modules from the Vivado IP library, to get a debuggable facial detection design on the FPGA.

– I found a variable in uart_rcvr that isn’t zeroed out on reset, but is completely rewritten every time data is received over uart. Somehow, that fixed our design and now it synthesizes, implements, and generates a bitstream. However, there are timing issues.

– Total hours: 20 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am behind schedule, but only for the handwritten Verilog design. At this point there’s nothing much I can do other than to keep grinding away and hope I eventually optimize enough to make our handwritten Verilog design synthesizable. I’m on schedule for the Vivado HLS design.

3. What deliverables do you hope to complete in the next week?

– Have the Vivado HLS design ready to demo on the FPGA.

– Have a way to fit the image from the laptop in block RAM so that the design works in the way I described above.

Andy:

1. What did you personally accomplish this week on the project?

This week I mostly spent time making improvements on the webapp and finishing up the integrations between the app and the facial detection and recognition. There were a lot of relative paths that needed to be updated as we moved around the folder structure of our project a fair amount. I updated the webapp to take 8 pictures of the user to add to our database of images. I also finished integrating the facial recognition and the entire flow works for users to add their face to the database and identify themselves now.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

On schedule.

3. What deliverables do you hope to complete in the next week?

The software components are functional and should be ready for the demo on Wednesday. We should be able to get some tests done as a benchmark to see how accurate our facial recognition is. I would also like to add a final screen displaying the name of the user that was recognized by the facial recognition.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We have successfully output the bitstream for FPGA facial detection using the HLS approach. This addresses one of the major hurdles in our project, and establishes our baseline for our optimization.

Currently all subsystems are functional. Our final goal is to optimize our FPGA facial detection to achieve a speedup over the software facial detection. We have made some initial plans for optimizations:

– Use more registers in our code, because the current flip-flop utilization is really low

– Use optimization clauses in HLS to pipeline loops in our implementation

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Not really.

3. Provide an updated schedule if changes have occurred.

No changes.

Week 10: 4/21-4/28

Hans

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– Improve FPGA facial detection performance by making the clock run at 200MHz. This optimization cuts the runtime by about a half.

– Tried a bunch of other optimizations in HLS, including using more registers, indicating dependencies between code sequences, loop unrolling, loop pipelining. I would say loop pipelining helps the most, and the runtime is cut from 0.08s to 0.03s. Software facial detection takes about 0.05s, so now FPGA outperforms software facial detection by about 20%.

– Worked on the final presentation.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

It is on schedule.

3. What deliverables do you hope to complete in the next week?

– Practice presentation since I am presenting.

– Dig into HLS more and try to improve the FPGA performance more hopefully.

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– Removing the ILA from our design fix our timing issues, and we were able to demo the HLS design at 50 MHz at Wednesday’s demo.

– Hans gave me a working HLS implementation at 200 MHz. It had issues with timing again, but I eventually fixed them by synchronizing uart_tx and uart_tx in the top module.

– Hans and I finished the final presentation.

– Total hours: 20 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am behind schedule for the handwritten Verilog design. At this point there’s nothing much I can do other than to keep grinding away and hope I eventually optimize enough to make our handwritten Verilog design synthesizable. I’m on schedule for the Vivado HLS design.

3. What deliverables do you hope to complete in the next week?

– Have a way to fit the image from the laptop in block RAM so that the design works in the way I described above.

Andy:

1. What did you personally accomplish this week on the project?

This week I updated the flow of the webapp so that the home page is the option to choose between adding to the database and facial recognition. Users are only prompted to input their name if they are adding to the database, which was required for all users in the past even if they were just checking their identity. I also edited the facial recognition page to display the image of the user, along with the name they are recognized to be. There was also an issue with the user’s names not being updated in the session, so I fixed that problem.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

On schedule.

3. What deliverables do you hope to complete in the next week?

The functionality that we wanted to get done is complete. For the next week, we will be comparing the performance between the fpga facial detection and the software implementation. During the demo, our TA’s and professor Savvides suggested that we include the runtimes displayed on the screen, which is something we might consider adding to our webapp. Other than that, I will be cleaning up the codebase and doing some tests to check for any bugs that we might have.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We have more than our MVP at this point, with a 200 MHz working design using Vivado HLS (which was our contingency plan).

Our final goal is still speedup so Hans will keep optimizing in HLS and Sheng-Hao will keep working at the handwritten Verilog version.

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Not really.

3. Provide an updated schedule if changes have occurred.

No changes.

Week 11: 4/29-5/4

Hans

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

– Finished final presentation

– Worked on final report

– Tried other HLS optimizations but nothing work particularly well at this point. Our final speedup on detecting one face in the image is 1.6x, which is what we said in the final report.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

On schedule.

3. What deliverables do you hope to complete in the next week?

– Finish demo and report

Sheng-Hao:

1. What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week was a little hectic so I wasn’t able to get as much done.

– I tried to keep optimizing the Verilog design, but didn’t make substantial progress. At this point I’m making the decision to just move on to the final report.

– Total hours: 3 hours

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am on schedule.

3. What deliverables do you hope to complete in the next week?

– Final Report

Andy:

1. What did you personally accomplish this week on the project?

This week was the final presentation and we went in with our project fully functional. I didn’t add anything new to the project this week. I mainly ran the project manually and looked to see if there were any bugs that could occur from users whose faces could not be detected or in situations with bad lighting. From my testing, I didn’t find any obscure cases that would cause an error.

2. Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

On schedule.

3. What deliverables do you hope to complete in the next week?

Give a good demo on demo day and work on the final report.

Team Status:

1. What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

Nothing.

2. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Not really.

3. Provide an updated schedule if changes have occurred.

No changes.