Tianhan Hu:

This week I continued to work on hand-button interaction detection part of our project.

After going through some research papers, I found that it is really hard to distinguish whether a hand is held above the table or is pressing on the table. I discussed the issue with my teammates, and we decided to fall back to a less intuitional, but much more realistic approach by using hand-gesture detection. This means that the user would now change gesture on the button he/she wants to activate instead of pressing on it.

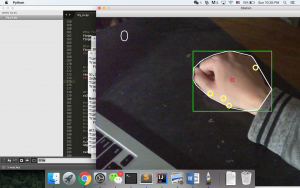

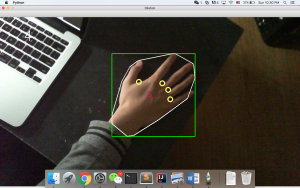

The first approach I am implementing right now is by first extracting hand contour with skin color detection, then calculate the convex hull and convex defects, and finally detect gestures by calculating the distance between fingers to finger webs.

Here are some preliminary results:

As you can see in the last picture, while I have all my fingers out the program says there are only four fingers. Thus, still much needs to be done to fix the error.

Xinyu Zhao:

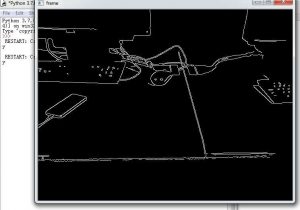

This week I experimented more with the canny edge detection and the video stream module of python. I think compared with the static image version, the boundaries captured in the video stream is somewhat more definite.

I also tried a method which extracts the meaningful coordinates from images, but it still needs improvement as it’s not very accurate.

I think it’s still a little behind schedule, as a more complete solution is needed, next week I should have better time management. Also for next week I want to work on improving the coordinate extraction and do more testing. Deliverables are a working algorithm for the above problem and a plan of camera adjustment.

0 Comments