Tianhan Hu:

This week I spent most time self-learning OpenCV with Python3 since computer vision would be the core part of our project. I installed OpenCV in a virtual environment and walked through the following tutorial: https://www.pyimagesearch.com/2018/07/19/opencv-tutorial-a-guideto-learn-opencv/

After that, I walked through methods described in this page for tutorial in hand gesture detection: https://www.intorobotics.com/9-opencv-tutorials-hand-gesturedetection-recognition/

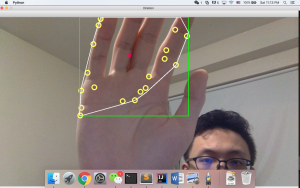

As an end result, I was able to write a small piece of code that is able to detect hand movement based on some sample code included in the tutorial. The sample picture is as below:

I think currently I am on schedule. Next week, I will be looking into some computer vision algorithm to refine this code so that it could differentiate between “pushing” on a surface and just “moved over” a surface.

Xinyu Zhao:

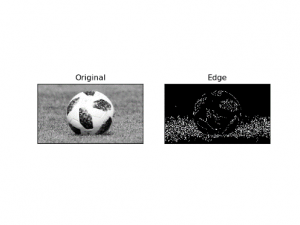

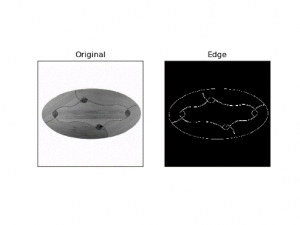

Because our first part is trying to capture the dimensions of a surface(table), I learned about edge detection, studied sample codes of Canny edge detection and did many runs to get a feeling of the effect, also thought about how well this could work for our purpose.

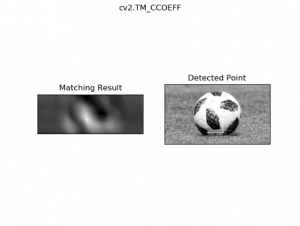

I also studied the code for template matching in OpenCV, to get an understanding of what it’s like since we’ll be doing it in later stages. (The detection “rectangle” can’t be seen if images are smaller)

I think the progress is a little behind schedule, we haven’t talked to professors who specialize in this area yet and I believe we need to do more researches on more solutions of the initial AR part.

For next week, I hope we can work out a working table(edge) detection algorithm and also ask professors who might have specialization in this part about general ideas and implementations.

0 Comments