Our demo video is located here

~Ajay

Ajay

This week we had our presentation and finished up all our metrics. In terms of results, we got a mAP@50 of 99% and mAP@75 of 91%. In respect to our requirements which was 80%, we exceeded those by quite a bit. Our accuracy was 73% which works for our use case because of the notion of queuing heuristics. Most of this week I spent building out the compute mAP function. I used the INRIAPerson dataset and wrote the intersection and the union functions my self. We ran the function on the p2.xlarge. Other than that we are working on our final report and getting ready for the poster.

Vayum

This week we put all the final touches on the project ot make sure it was functioning fully and according to what we thought. We tweaked our graph.js interface to make it moving and dynamic and overall we increased our precision values

Team Report

Overall our team is pretty much done. Our schedule is all finalized and we are working together for the final report.

Ajay

This week I spent mostly cleaning up the code base and verifying all the EC2 Connections were still working properly. We actually faced an issue with IT where they kept marking my raspberry pi as an insecure device and restricting network access. After repeated attempts to let them know that i had secured my device, eventually they realized that they had a bug in the vendor software they used to scan the network and our access was restored. The other half of the week I spent building the final presentation and practicing presenting. I also spent time verifying out final metrics and our mAP calculations. Most of these lined up close to our numbers that we wanted except that our matching characteristic had a little bit of a low percentage on accuracy. In general, the product is working pretty well but there are places where we could improve on it.

Team Report

Our in lab demo went well, we are still coordinating to practice our final demo and what it will look like. We are going to get started on the final report and poster next week.

Vayum

This week I created an API to integrate the data from Ajay’s facial recognition algorithm and seamlessly put it as a part of our web application. I used Django web requests, Postman, and wrote a stand alone program that looks for POST requests, parses the JSON data, and calculates a moving average of a continuous data stream. This part is to calculate the average time to wait based on the data we get from our cameras and raspberry pis. Overall, we still need to focus on figuring out how we want to integrate the data with Peters part along with occupancy part and the team. Other than that, I would say that we are up to date and on track.

Ajay:

This week I got most of the functionality working for the entire REID system. I finished the raspberry PI code so that now we can take photos and upload them to s3 buckets and call the appropriate POST endpoints to trigger storage/detection functions. I actually found a 4x speedup within the raspberry pi code revolving around having the camera which was turning on and off. Instead I forced it to stay on for the duration of the program and as a result, we were able to speed up the overall operation of the raspberry pi by 4x. I finished writing the DB on the EC2 instance so now we can store our feature vectors and persist them in storage. The histogram functions are also finished so we can now extract them from the bounding box and persist them. I wrote the matching function as well which uses Bhatcharraya distance. This seems like it works well but we are still doing final testing to verify all the numbers. This following week I need to do the MAP calculations and do the other metric verification.

Team Report:

We are still on track to have a final demo but need to see how we are doing with the occupancy part of the project and focus the next week on testing and debugging that to see how accurate we are.

Week 7 Status Report

Vayum:

This week I focused on the heat map development portion of the project. This part turned out to be more challenging than I expected both with the actual design of the heat map and changing what we have to dynamic data that is always moving. Originally, I had a status of the just static data. We originally moved from one side to another by putting in fake data, but there was no way to really create a dynamic heat map of a table with the actual floor plan according to online resources. After doing a bunch of research, I had to import a bunch of modules that made incorporating these modules very difficult.

Overall, I would say I am a little behind in terms of this and need to focus especially on accelerating the process. I dont want to spend too much time being stuck on this and I would say that after this I would need t move on to other parts of the project.

Ajay:

Last week I wrote a small characteristic matching function to look at the histograms. This was a more brute force approach as I wanted to see how useful a brute force simple algorithm would work. It did not work that well so I will implement the Bhattacharya distance metric this week. We also got our raspberry pi’s this week so we will port our camera code over to the PI to ensure it works with that.

Team report:

Overall our team is slightly behind as we just received our raspberry pi’s this week. This is accounted in for our slack and we will be able to catch up

Ajay

This week I ported my code into AWS. The biggest issue I faced was something I didn’t even expect would be an issue but when I tried to reserve a p2.xlarge instance on aws I was instantly blocked. To resolve this, i had to contact AWS support and get the instance limit to be removed for my account. After that, we were able to test our system on AWS and immediately we saw insane gains from the GPU’s. On a 6th generation i5 processor, it took around 25 seconds to do object detection using the YOLO method. With the Tesla k80, it took .15 seconds. Currently, our bottleneck is in the method we have with storing photos as it takes around 2 seconds to upload the photo to the s3 bucket. If we figure out another way of storing these photos we might approach that but I think at this point this is our best approach. I also wrote the histogram function and sketched out the function for calculating average wait time. For the average wait time, it revolves around using the total wait time so far and averaging in the next value. This week I want to work on the matching functionality. I thought we would get to it this week but the AWS setup ended up taking longer than expected.

Vayum

I finished the home page dashboard, the layout for the additional page after that and connected the web service. We also sketched up the API for how this will interact with everything else, including the YOLO detection system and the hardware sensors. Currently, I still have to write the algorithms and get more data in our database, with actual data connected from our PIs and our sensors. We also ordered parts so are we basically ready to put everything together. I would say we are on track.

Team Report

In terms of team status we are a little bit behind since the AWS setup took so much time. I think that we can recover the time although because now that we are set up on AWS, testing the system can happen in much faster time. Other than that our diagram for connecting our individual parts has been sketched out and the internetworks has been designed. We need to build it this week and test it.

Ajay

We missed the last status report but will update on what we’ve done overall. I’ve gotten the function that takes the photo -> recognized a person and spits out the bounding box to work. This was a simple application of the Yolo algorithm. After that, I wrote the histogram function to extract out color data from the image. Currently, I’m running this on a CPU so my main tasks for this week are getting this running on an AWS instance. This week I also want to write the matching functionality to start getting matches from photos.

Vayum

I’ve made good progress overall on the web application portion of the project. I am currently working on the views and controller parts of the backend to deal with dynamically changing data. I’ve set up the database with all the relevant fields, have the front page working with our basic set up, and wrote the server code. This week, the plan is to finish the controller and try to get the additional pages working. I have been stuck on some bugs in the backend side of the project so hopefully I will resolve that within the next few days. Apart from that, I will soon need to start integrating with Ajay and Peter to ensure that the transfer of data from our hardware portions of the project go smoothly.

Team Report

Overall we are on schedule and are working well together. We have placed our orders for our parts and they should be arriving within the next week. There are no major risks at this point but we are monitoring the speed of the algorithms as that is the main point that we are a little worried about. Overall no schedule changes.

Vayum

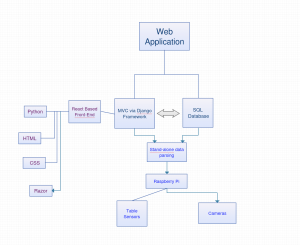

This week I worked on developing the socket needed to transfer data from our cameras and sensors to a central server for all of our data processing. In addition, I specified the web app implementation for and began working on the MVC architecture needed. The implementation is as follows below.

In addition to this, I looked more into the process of the predictive features for determining business of the restaurant. After researching how Yelp and Google Times do it, I will be making a KNN classifier to give segments of busiest to non busiest times, with a bar graph or something similar to display the relevant information.

The next step is to start generating test data to test to see if my socket works, and how to best format my database. Most of the actual backend implementation with the front end will begin after the break ends. I am on schedule so far in everything that I am doing and I think we are progressing well.

Ajay

This week we spent most of our time working with the design review and writing the report out and getting that well specked out.

In terms of the reidentification work I did not accomplish as much I would have liked. I got the Yolo v3 tiny to work but this was not as important as a GPU instance will be plenty fast enough with the default yolo detector for our purposes. I did more reading and I think I have figured out the algorithmic approach I want to take to determine the dominant color regions for the feature vector. After taking a convolution of the image to blur together the colors, I will try solving this via a connected components approach to get the color blobs. I am also looking at the RGB/YUV histogram to extract that into a feature vector as well. Next week I want to place the orders for the Raspberry PI’s/ cameras and get a rudimentary feature extractor working.

Ajay

This week I spent working with darknet and working with the YOLO v3 detector. After running it on my laptop I was able to get the weights working correctly and able to detect people within images. The one issue I found was it took about 8 seconds to identify on a laptop CPU which means I need to use GPU to be able to classify it within the performance constraints. The next week I want to spend time working with Yolo v3 tiny which is a more lightweight version of the object detector which might good enough for our use case. Next week I also want to work more with the the pixel data and the convolution function to see if I can extract the color data from the image.

Vayum

This week I interfaced with sensor data to see if the data we were getting would match the correct format that we wanted to store in our DB. I realized I need to write a data parsing stand a lone program to convert the JSON fields into fields that would be easily accessible in the backend. In addition to this, I realized that I might also need to do this for camera data.

Next week I am going to work on writing the socket and also doing this same idea with the socket data assuming I can properly form a connection. We also decided upon using SQL for the database as it was the most common and best choice we had.

I would say that I am on schedule barring any setbacks next week and overall the project is coming along fine.