Team Status

Risks and Contingency

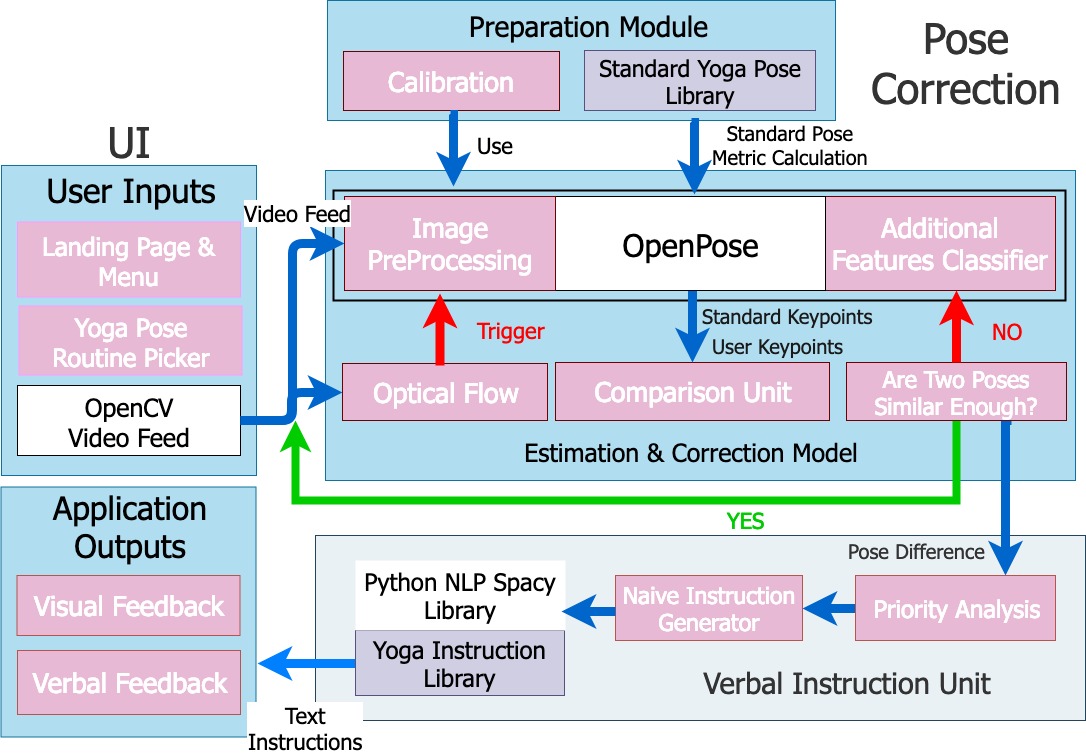

At this time most of the functionalities of our application have been completed. The major risk for now is the unstable environment for webcam demo. We encounter the following two problems while testing our application:

- Unstable Lighting condition. We tested our application in the ECE coves, and the major light sources is natural light. During cloudy days, the webcam can only capture dim and blurry images, and these images don’t work well with OpenPose. OpenPose always reports missing important keypoints when performing pose estimation on these images. Since the accuracy of OpenPose relies heavily on the lighting condition of the input images, we need to find a stable and strong light source for our final demo.

- Webcam cannot focus well on objects or human locating further than 1.5m from itself. The newly bought webcam cannot focus and thus cannot capture clear images for humans at a certain distance from the webcam. This affects the performance of OpenPose. We suspect that this is also caused by the weak lighting we had when testing the webcam. We will test this webcam with stronger light source and see its performance.

Schedule Changes and Updates

We are a bit ahead of our schedule and have most functionalities working correctly. There are no changes to our current schedule.

Tian’s Status

Accomplished Tasks

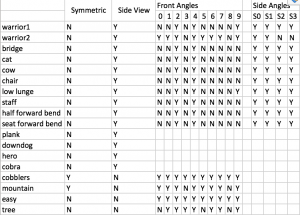

- Finished static image testing on all poses. I collected incorrect and correct samples for each newly added yoga pose, and tested the instruction generator based on these samples.

- Implemented Threshold Manager, a struct that dynamically adjusts the thresholds for each pose as user attempts each pose. For example, if the user keeps getting the same instruction “Jump Your Feet Apart” for Warrior 1, this implies that they might not be flexible enough to open their hips as in the standard pose. Then the Threshold Manager will loosen the threshold for that angle between the hips so the user can pass the pose after making some effort, instead of getting stuck at this pose.

- Integrated and tested the entire application with Sandra and Chelsea. We worked together to integrate all the parts: controller, optical flow, UI, pose comparison and instruction generation. We also performed webcam testing on all the poses and adjusted thresholds accordingly.

- Implemented functions that flip a pose horizontally and flip an instruction horizontally (i.e. exchanging all left and right counterpart in the instruction). In this way, for the poses with different facing directions such as Left-Facing Low Lunge and Right-Facing Low Lunge, we only need to implement one of them.

Deliverables I hope to complete next week

- Implement and visualize overall scoring for the poses so that the user can use the real-time scoring as a reference

- Improve the instruction generator by adding more detections. For example, the generator needs to detect if the user is touching the floor with correct body parts.

- Start preparing for the final presentation and the poster

Chelsea’s Weekly Status

Accomplished Tasks

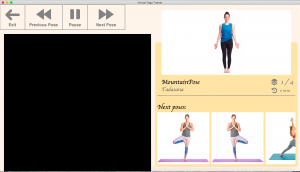

Since we added a lot more poses, I added more data structures and methods to the routine class for easy communication among the UI, the routines, and the verbal instruction generation classes.

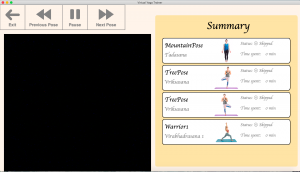

I also made major modifications to the summary page because our routine sizes grew a lot from our first demo and poses could no longer fit in the original layout.

During our meetings to fully integrate everything, I got to fix a lot of minor UI bugs as well as specifying styles for all the components. I also added the visual feedback that overlays on top of the video feed, which consists of a single fixed size circle that indicates to the user where there’s a mismatch angle between the user and the standard pose. However, it’s not working so well and we suspect that it’s inaccuracy from the Open Pose side. We are conducting further tests with the visual feedback this weekend before the demo. As a backup or a plus, I will be adding a progress bar that shows the user how close he/she is to completing a pose based on the number of angles that are within the specified threshold.

Tasks for next week

Next week I hope to fix any remaining UI bugs and introduce features to improve user experience. Then I hope to start making the presentation with my teammates as well as drafting the report.

Sandra’s Weekly Status

Accomplished Tasks

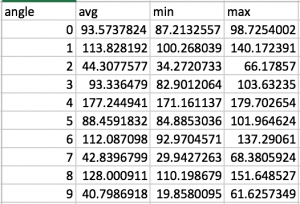

In an effort to help reduce the number of unnecessary instructions, I implemented logic to help reduce repeated instructions. In addition, the user now has to hold the pose for 5 seconds. I also implemented logging, which is keeping track of all of our metrics, for runtime. We have a log file that generates each time.

During our meeting this week, I worked with Chelsea and Tian to do lots of testing of the application. We tested some of the new poses and how they work with the new logic that we each implemented.

Tasks for next week

I need to make sure that the program allows the user to exit a routine and begin a new one. In addition, I will be continuing to get logging info and translate this into tables and other graphics. I will also begin working on the final report, presentation, and poster.