Team Update

One risk that we have identified is poor lighting and photos that are too dark and low quality. Poor lighting also affects the accuracy of the OpenCV face detection. For our demo location we have tried to find a brighter area to accommodate our robot, however in the future we may consider attaching a light ring to our robot to ensure that every photo is bright enough if we continue to run into problems.

Another significant risk continues to be mounting and getting accurate data from our IR and thermal sensors. We have begun testing with the sensors we received recently, and it seems that if we use multiple sensors to average or vote we can get accurate thermal data from a reasonable distance of a few feet. However, we have yet to mount our sensors onto the tripod structure so there is still risk involved.

For the design of our system, there have not been any significant changes over the past week. We have been primarily implementing our current design and doing testing.

There were minimal changes made to our Gantt chart, which is shown here.

Adriel

This week I continued testing on our IR and thermal sensors with Cornelia. I made some modifications to the tripod mount so that it can be fixated on the Roomba correctly, while also providing access to the USB to serial DIN cable. I designed the files for the surface that the RPi, Arduinos, and sensors will be placed on. I laser cut the files, but occasionally the laser wouldn’t cut all the way through the balsa wood, even with multiple passes, so I finished the cut using a box cutter. I also worked with Mimi and Cornelia to solder wires onto the sensors.

My progress is currently behind schedule, because using the box cutter took slightly longer than expected. Additionally, the sensor wires being soldered took much longer than expected because we thought we could use pre-made male to female wire in the sensor, but they would not all fit in the little box where the pins are located. At this point, I should’ve made some progress on the motorized stick. In order to catch up, I will start designing the motorized stick early this week, and take concrete steps to complete its construction.

By next week, I hope to have the motorized stick constructed and have the LCD display integrated into our project. I also hope to have everything tested and prepared for our midsemester demo.

Mimi

This week I completed the code which automatically uploads photos taken to a Dropbox folder. I also did some minimal timing testing, finding that it takes approximately 1/10 of a second to write an image to a jpg file from the array I have it in initially, and then around 2-3 seconds to upload to dropbox. I also did testing with taking images with the raspberry pi camera and using OpenCV to outline faces. From the testing I did, it appears that lighting may be an issue for our project, because in poor lighting the OpenCV is not as accurate and the images have poor quality. Lastly, Cornelia and I worked on integrating our code together so that images can be taken when we sense a human, while the Roomba is moving around.

My progress is currently on schedule.

In the next week, we have to prepare for our mid-semester demo on Wednesday. For our project, I will continue working with Cornelia on working out any kinks in the code and integrating the sensor data. We will also work on code for adjusting the Roomba position based on camera data, to optimize photos. Lastly, I will continue testing the speed of image transfer and try to make it faster.

Cornelia

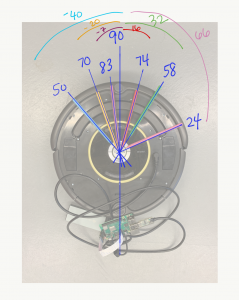

This past week, I worked with Adriel to test the IR and thermal camera sensors in isolation from the system using an Arduino. I then worked on connecting the Arduino to the RPi to send signals from the sensors to the RPi. Then I ultimately integrated the received signals into the Roomba code. The code is able to detect humans, but the logic for stopping the Roomba 3 ft away still needs to be tested and debugged. Additionally, after detection, we need to look for a face and trigger image capture if detected. I also made changes to the Roomba code such that when it detects things with its built-in sensors, it turns 60º away from the triggered sensor, not the center, front-most point. This required some measurements and calculations shown in the attached image here:

According to our Gantt chart, I am on schedule. We have connected and tested at least 1 of each type of our sensors (IR and thermal camera), so connecting the rest will just be a matter of duplication. We have received all of our IR sensors, thermal camera sensors, and Arduinos. We have also integrated the camera code with the Roomba movement code and are able to take photos when a face is detected and the Roomba is moving.

This next week, before mid-semester demo, I will be testing our robot with the team and making sure the IR and thermal camera sensors are able to detect humans and stop at least 3 ft away. After the demo, I will be working with Mimi to move the Roomba according to the camera frames received and faces’ positions within them. I will also be working on the second reading assignment due next Sunday night.