Our team overall have been using classes such as 18220 as well as more advanced megatronics and device science classes my teammates have taken to develop the embossing and communication design. The software design is inspired by webhacking learned in 18330, wrappers and database/cache organization learned in 18213, and testing/verification skills learned in 18240/18341. While the actual algorithms aren’t molded around an existing algorithm, the analytical skills and practice of coming up with our own stems from all the classes we have taken at Carnegie Mellon but for me, especially 15122 and 18213.

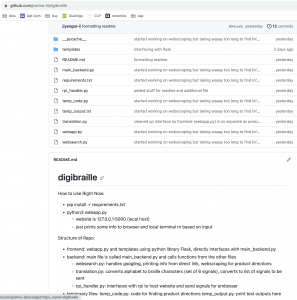

At the beginning of this week, I created a github (https://github.com/joshna-ii/digibraille) to help keep track of my progress and allow my teammates to work with my code, especially for when Zeynep develops the frontend further. I spent quite a bit of time later in the week working on the ReadMe and making it as easy to understand and use even in these early stages in order to set a precedent.

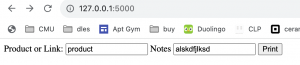

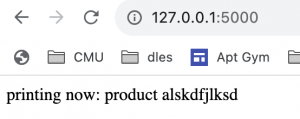

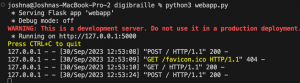

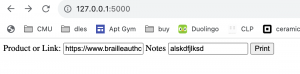

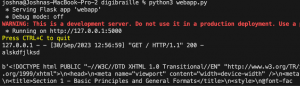

The main frontend file is called webapp.py, and I spent the first half of the week using the Flask library in order to create a website which is currently hosted locally (127.0.0.1:5000) when you run the python program. This is an example output and handler:

In general, I have been able to handle the different types of non edge-case inputs and a couple edge case inputs (non-input. poor web link formation) from the website post stage to the string that needs to be translated.

Based on the html user input, I had the webapp.py frontend handling file call the run_backend function in main_backend.py. This next file’s brings together the different parts of the backend: rpi_handler.py, translation.py, websearch.py, and soon a database file.

Websearch.py does the actual webscraping and queries depending on if main_backend.py received a link or a product name. I have a function called google which returns the first link for a google search given the keywords.

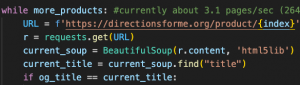

I have also created a function that handles direct links that are inputted as well as a function that finds matching keywords in all the pages of websites such as directionsforme and backoftheboxrecipes using the requests library and beautiful library.

The bulk of the execution code (outlined functions are still connected clearly everywhere) for the third function is currently in my temp_code.py file because I have been doing some unit tests, edge cases, and one-off tests. While I have been able to improve efficiency by tenfold by only accessing certain attributes of the webpages, it is still way too slow so my plan is to spend the beginning of next week transferring data from these websites to an internal database by modifying the function I created this week in order to make this process faster. If sufficient results have not been found with the internal database, main_backend.py will use the google function I worked on earlier and mentioned above and webscrape directions off of that.

I also spent a couple hours learning how to work with the raspberry pi yesterday after receiving it.

I am having trouble communicating with it as my laptop does not seem to recognize the device when I ping localhost so I am getting an ethernet cable today to try it that way and if that doesn’t work, I will go to campus and try to connect it to one of those computers. I am spending today working on rpi_handler.py in order to host the basic website through that and get the basic framework down for how the python files will communicate signals on location and movement of solenoids+motors to the raspberry pi post translation through the gpio pins.

I have also spent time this week working on the design presentation for next week including creating block diagrams, scheduling, designing algorithms, figuring out interactions between components, and calculating quantitative expectations.

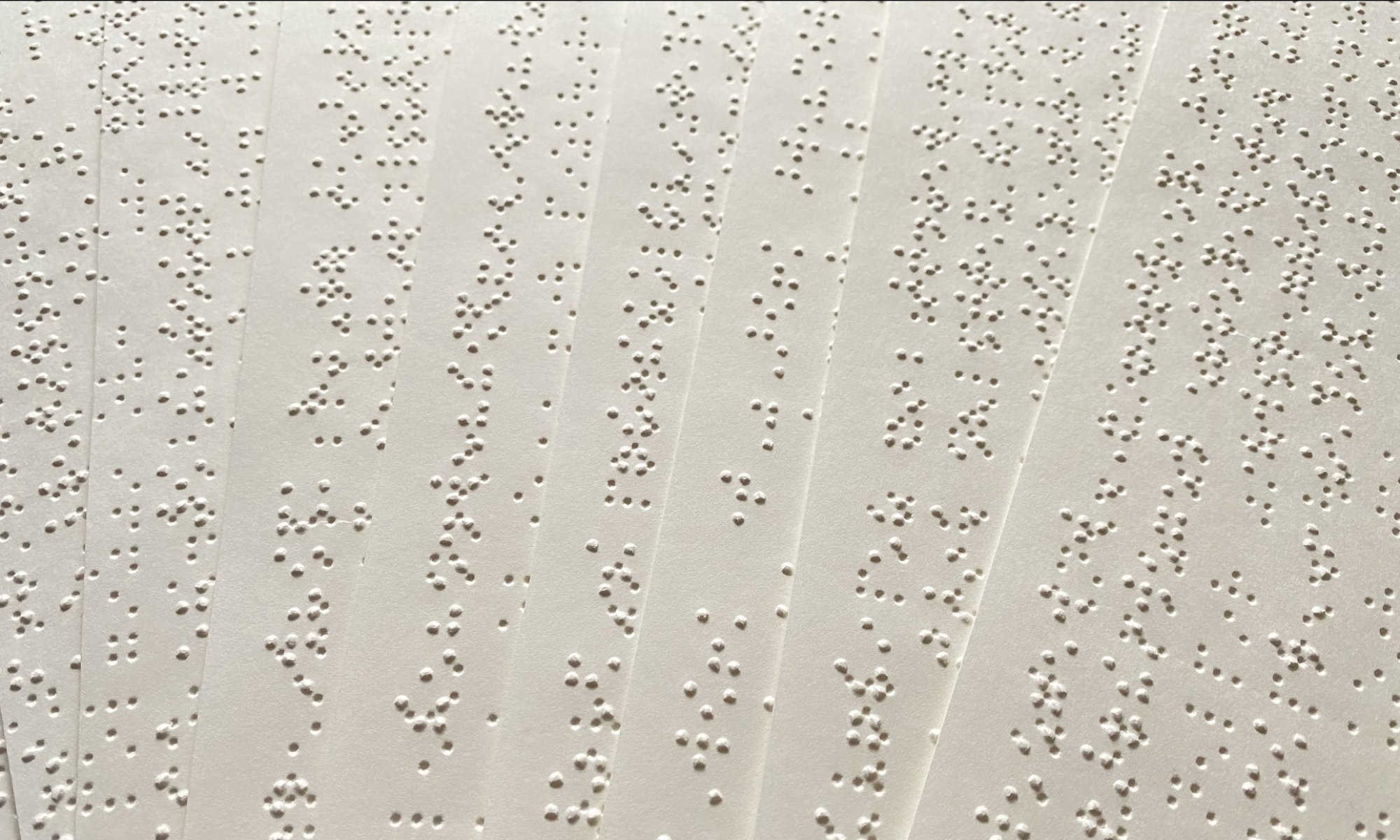

While I did not achieve the reach goal I set last week of getting 50% of the webscraping done due to this method turning out to be innefficient, I am still on track with our expected schedule with the webscraping, website, and integration files so I do not have to reorganize the schedule. My goal for this week is to get the basic rpi – python script communication down, get 50% of the webscraping done with databases and creating said database, and start the braille translations while making a more detailed schedule based on the different rules braille follows. I plan to translate the current string output I create to a list of a set of 6 signals with some of the more general braille rules after learning Braille even better myself as a person since there isn’t a 1:1 mapping between the english alphabet and braille characters.