This week we worked on the design presentation as a group in order to clean it up according to the feedback we received from our proposal presentation.

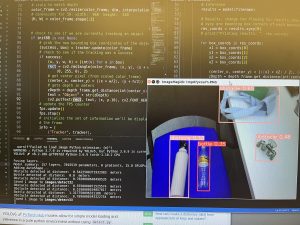

On the CV side of things, this week I continued working on getting a script for using openCV tracking along with the LiDAR camera depth points. Specifically, I modified the CSRT script that I had from last week and changed the camera input from just using the RealSense as a webcam into the RealSense frame stream necessary to grab depth and color data from using pyrealsense2. From here, I was able to scale the stream dimensions to be able to map the color stream to the depth stream, calculate the center point of the selected bounding box, and obtain and display a distance reading in meters on the bounding box while it tracked the selected object. The depth readings worked pretty well on the bottles, capturing distances less than 1 meter. I plan on seeing the minimum and maximum ranges of the camera by testing the accuracy of the distance readings at different distances. This is helpful as a stepping point towards integrating depth readings with the bounding boxes we will get from Mae’s yolov5 model.

Mae and I also worked this week on importing her model onto the Jetson, and testing to see whether its inference would work with a simple script sampling a frame from the Jetson every 10 seconds and running that frame through her trained model. It did work pretty well, however with slight limitations as it was most effective at detecting water bottles against a solid contrasting background rather than if held in front of a window or when it blended slightly in with the Hamerschlag lab floor. We will have to work on testing inference at different spots in the building to find an area with highest contrast.

After we determined that inference worked, I built upon the script and added in the depth stream, calculated the center pixel of the bounding box from the yolov5 network, and outputted a distance for each detected object, based on whether it was an obstacle or a bottle. These calculations would be performed every 10 seconds for now, as we kept the 1 sample per 10 seconds framework from before. Getting the LiDAR to work with Mae’s yolov5 model was a main achievement this week, since from here we will be able to begin fine tuning the model as well as the parameters of the LiDAR camera.

I believe I am catching up a bit more this week in terms of progress, however I wish I was able to see if I could incorporate the yolov5 model to the openCV tracking algorithm and merge all three aspects of I am currently working on into a single system. Hence, I will be working on this throughout next week. Some things I will be paying attention to are the streaming size and sample rate (large resolutions result in terrible delay when processing for depth), since I’ll need to find the highest sampling rate that will still allow for the full processing to occur, or to find a way to overlap the samples so that I can somehow “lookahead” to a next frame, and offset the sampling for a more frequent information retrieval rate.

0 Comments