This week I focused on a refactor of the Computer Vision Localizer to bring it from a ‘demo’ state to a clean implementation as outlined by our interface document. This involved redoing the entire code structure, removing a lot of hard-coded variables and exposing the right functions to the rest of the application.

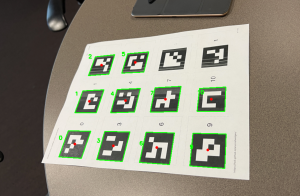

I also worked on creating 8 distinct images for the robots to display and calibrating them for the algorithm via a LUT to account for the errors in the camera and neopixel’s not being perfectly color accurate.

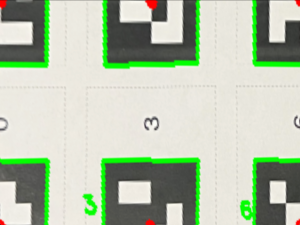

I also worked on making the Computer vision code work with the fiducials. The fiducials are the QR code pieces that allow a human to place them at the edges of a sandbox (table). Based on the fiducial locations, I was able to write an algorithm to detect what fiducials were in what order and appropriately apply a homography to the sandbox and scale it such that each pixel was 1mm wide. This allows us to place the camera anywhere, at any angle and get a consistent transformation of the field for localization, path planning, and error controller! It also allows us to detect the pallets.

Attached below is an example. Pic 1 is a sample view from a camera (the fiducials will be cut out in reality) and Pic 2 is a transformation and scaling of the image between the fiducials [1,7,0,6] as the corner points